news + thoughts

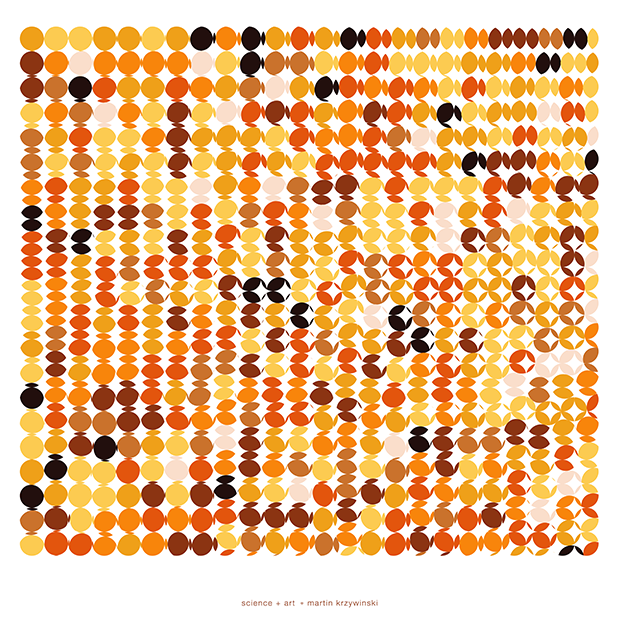

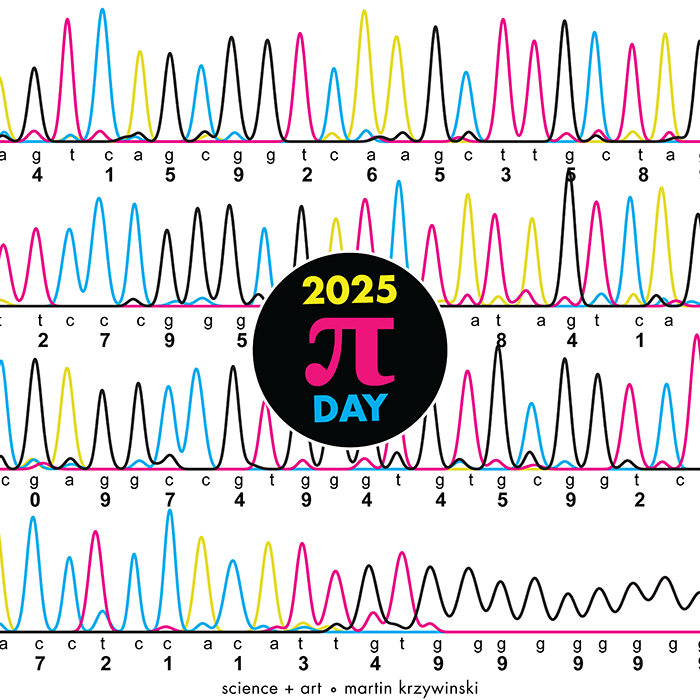

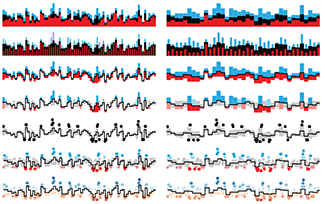

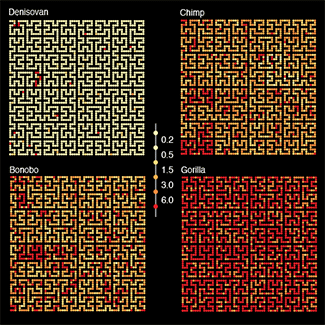

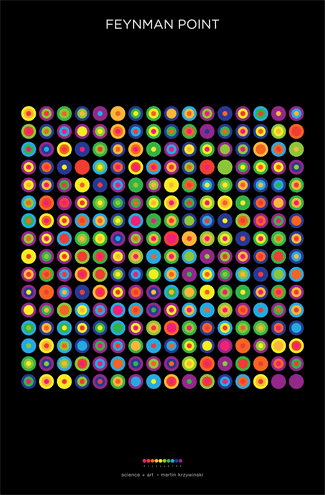

Beyond Belief Campaign BRCA Art

Fuelled by philanthropy, findings into the workings of BRCA1 and BRCA2 genes have led to groundbreaking research and lifesaving innovations to care for families facing cancer.

This set of 100 one-of-a-kind prints explore the structure of these genes. Each artwork is unique — if you put them all together, you get the full sequence of the BRCA1 and BRCA2 proteins.

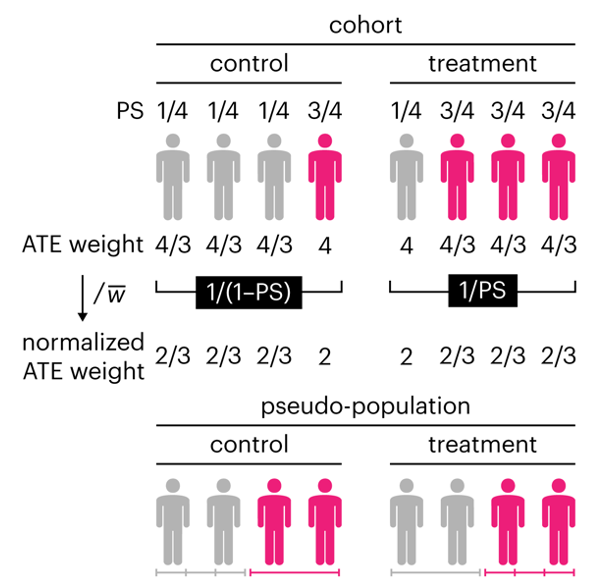

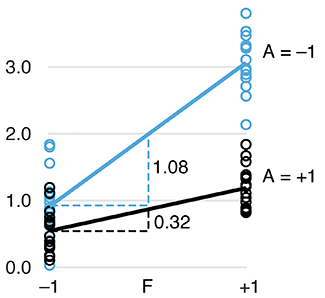

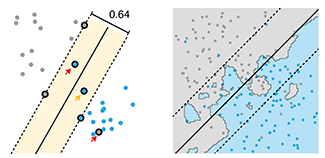

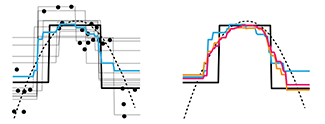

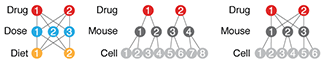

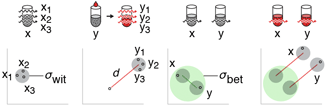

Propensity score weighting

The needs of the many outweigh the needs of the few. —Mr. Spock (Star Trek II)

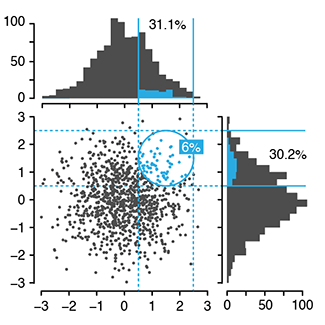

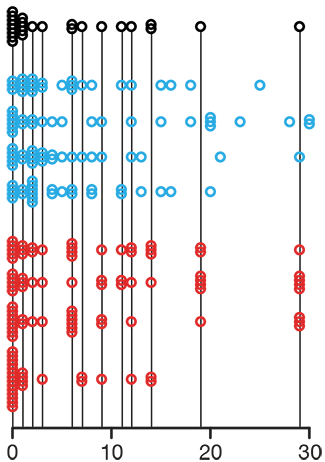

This month, we explore a related and powerful technique to address bias: propensity score weighting (PSW), which applies weights to each subject instead of matching (or discarding) them.

Kurz, C.F., Krzywinski, M. & Altman, N. (2025) Points of significance: Propensity score weighting. Nat. Methods 22:1–3.

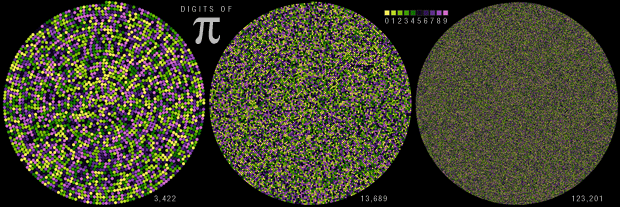

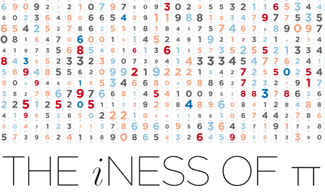

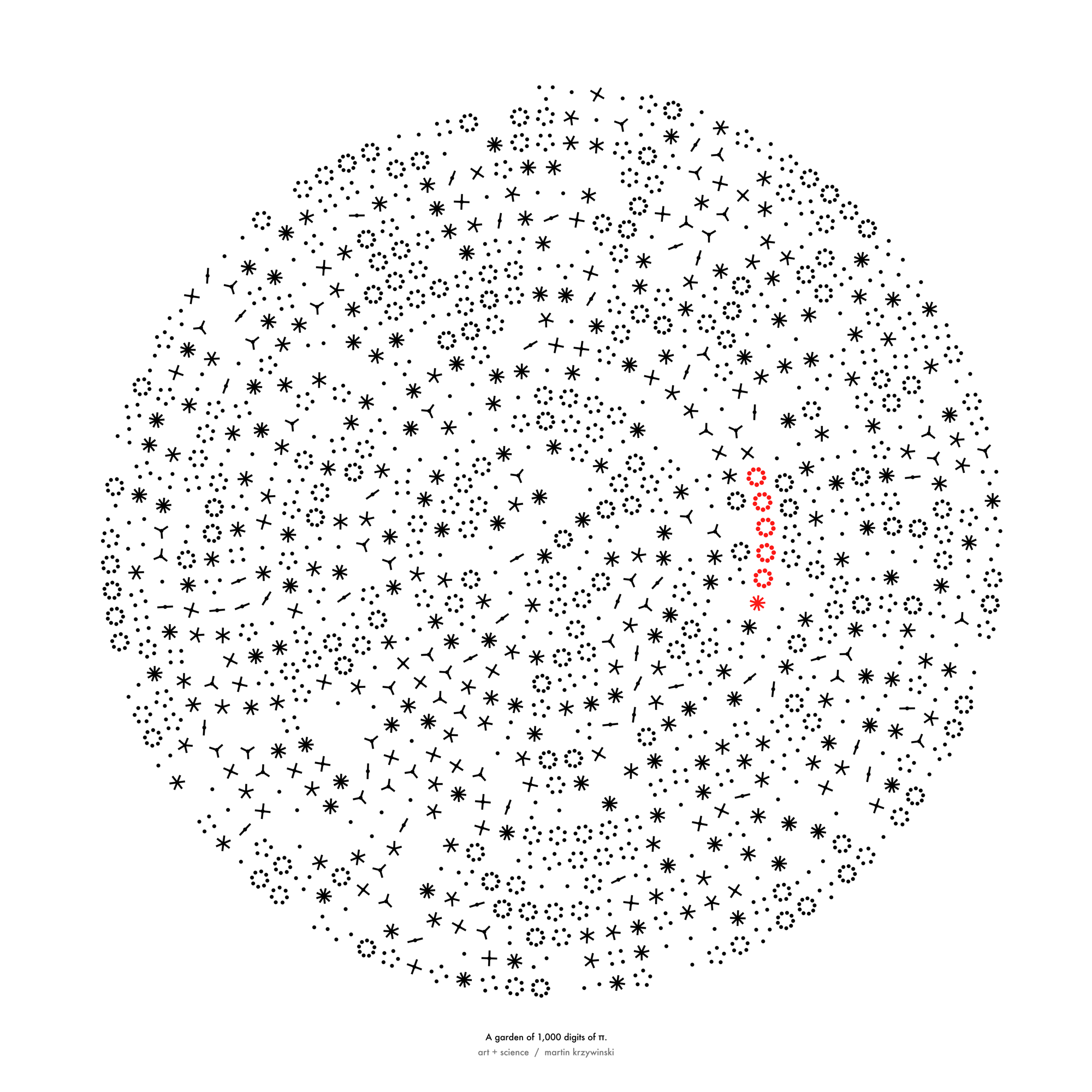

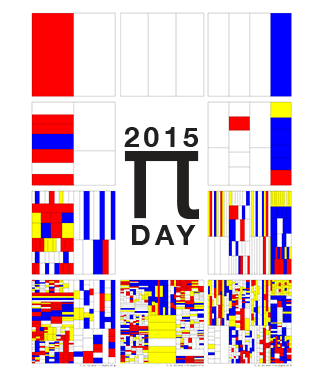

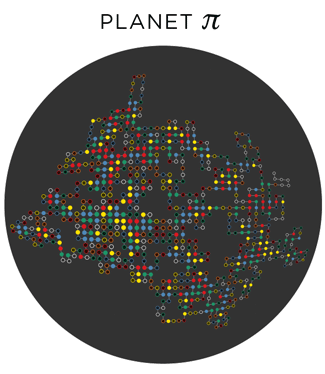

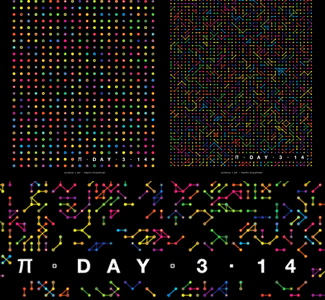

Happy 2025 π Day—

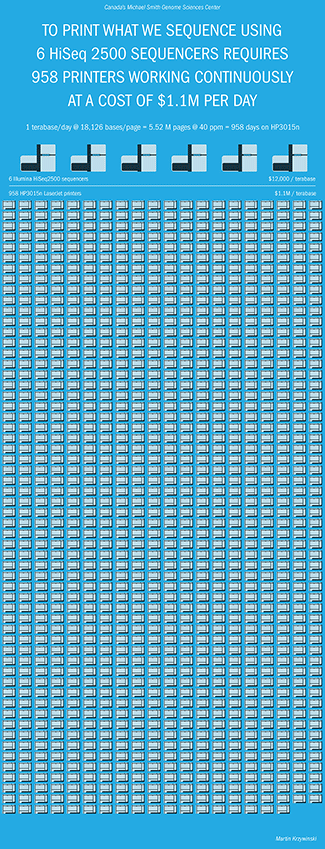

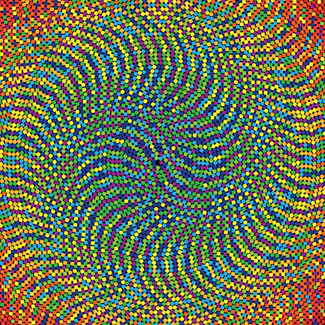

TTCAGT: a sequence of digits

Celebrate π Day (March 14th) and sequence digits like its 1999. Let's call some peaks.

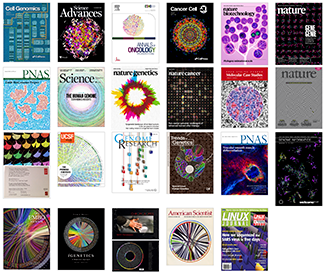

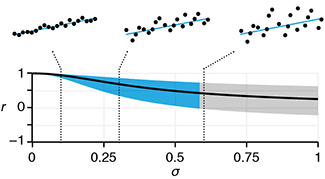

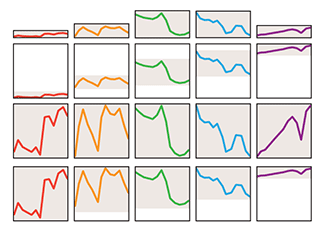

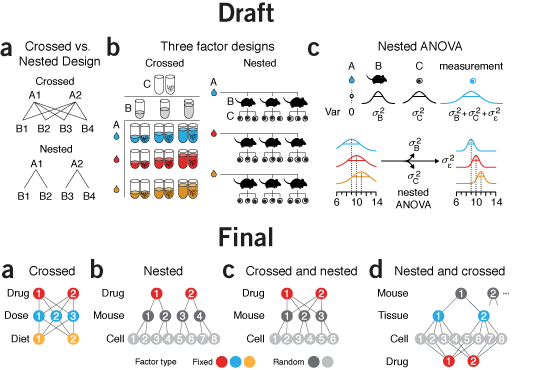

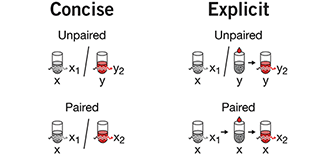

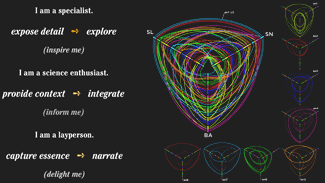

Crafting 10 Years of Statistics Explanations: Points of Significance

I don’t have good luck in the match points. —Rafael Nadal, Spanish tennis player

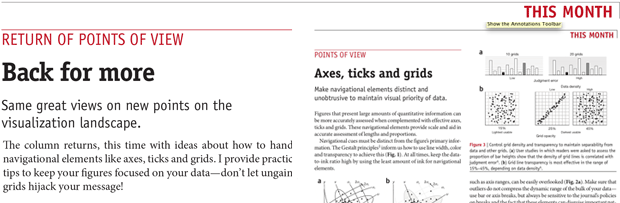

Points of Significance is an ongoing series of short articles about statistics in Nature Methods that started in 2013. Its aim is to provide clear explanations of essential concepts in statistics for a nonspecialist audience. The articles favor heuristic explanations and make extensive use of simulated examples and graphical explanations, while maintaining mathematical rigor.

Topics range from basic, but often misunderstood, such as uncertainty and P-values, to relatively advanced, but often neglected, such as the error-in-variables problem and the curse of dimensionality. More recent articles have focused on timely topics such as modeling of epidemics, machine learning, and neural networks.

In this article, we discuss the evolution of topics and details behind some of the story arcs, our approach to crafting statistical explanations and narratives, and our use of figures and numerical simulations as props for building understanding.

Altman, N. & Krzywinski, M. (2025) Crafting 10 Years of Statistics Explanations: Points of Significance. Annual Review of Statistics and Its Application 12:69–87.

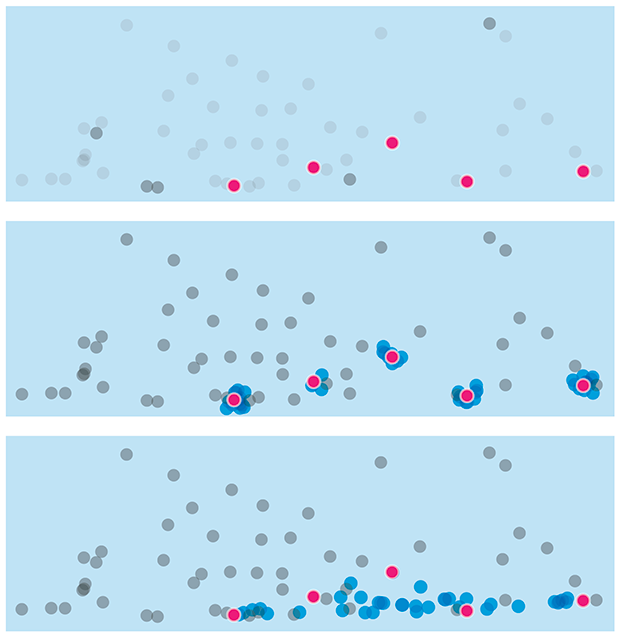

Propensity score matching

I don’t have good luck in the match points. —Rafael Nadal, Spanish tennis player

In many experimental designs, we need to keep in mind the possibility of confounding variables, which may give rise to bias in the estimate of the treatment effect.

If the control and experimental groups aren't matched (or, roughly, similar enough), this bias can arise.

Sometimes this can be dealt with by randomizing, which on average can balance this effect out. When randomization is not possible, propensity score matching is an excellent strategy to match control and experimental groups.

Kurz, C.F., Krzywinski, M. & Altman, N. (2024) Points of significance: Propensity score matching. Nat. Methods 21:1770–1772.

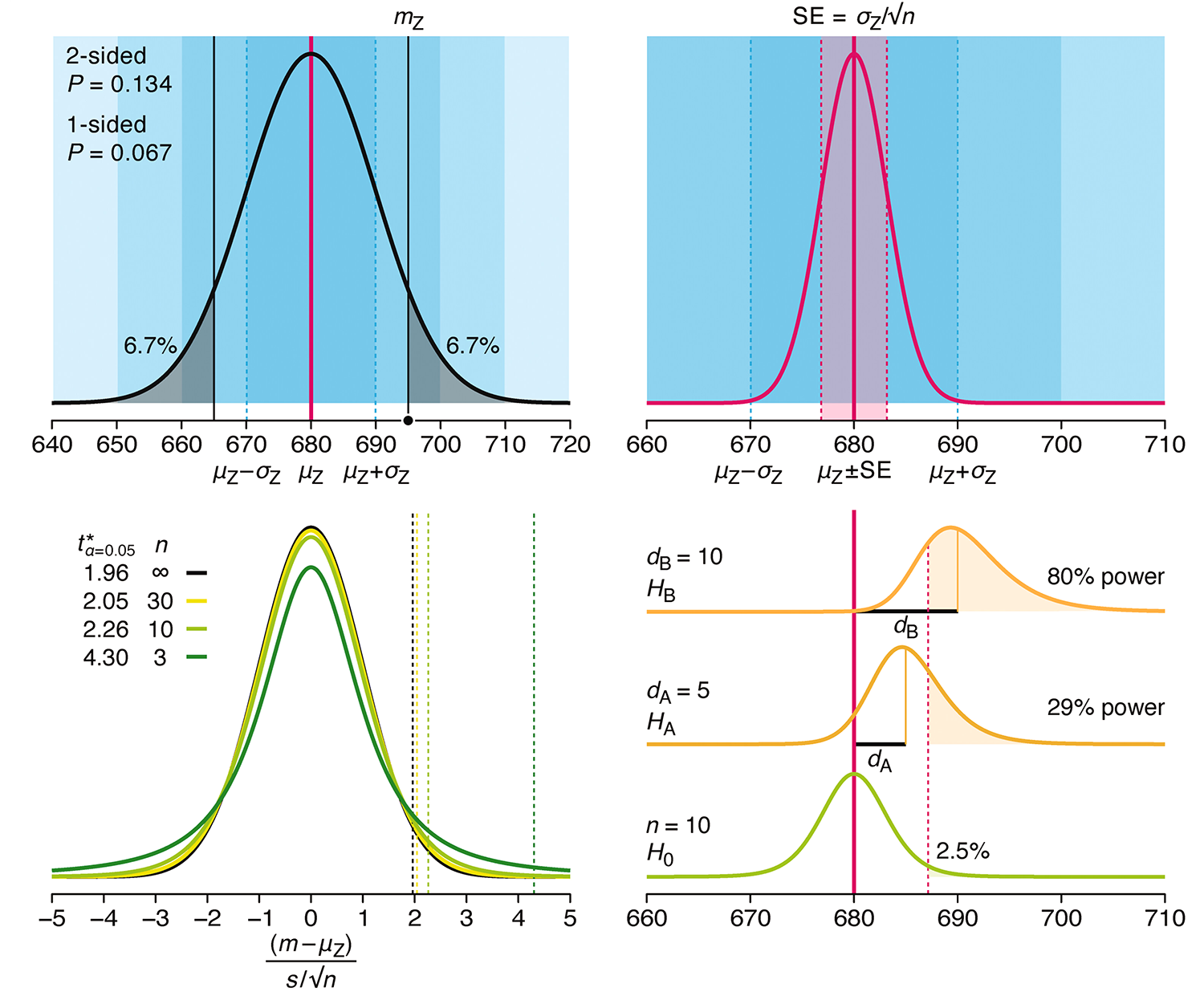

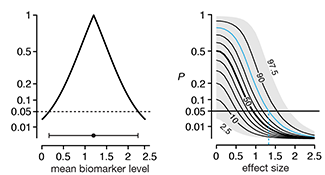

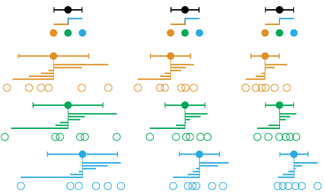

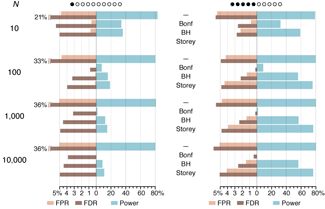

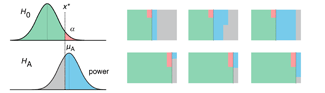

Understanding p-values and significance

P-values combined with estimates of effect size are used to assess the importance of experimental results. However, their interpretation can be invalidated by selection bias when testing multiple hypotheses, fitting multiple models or even informally selecting results that seem interesting after observing the data.

We offer an introduction to principled uses of p-values (targeted at the non-specialist) and identify questionable practices to be avoided.

Altman, N. & Krzywinski, M. (2024) Understanding p-values and significance. Laboratory Animals 58:443–446.

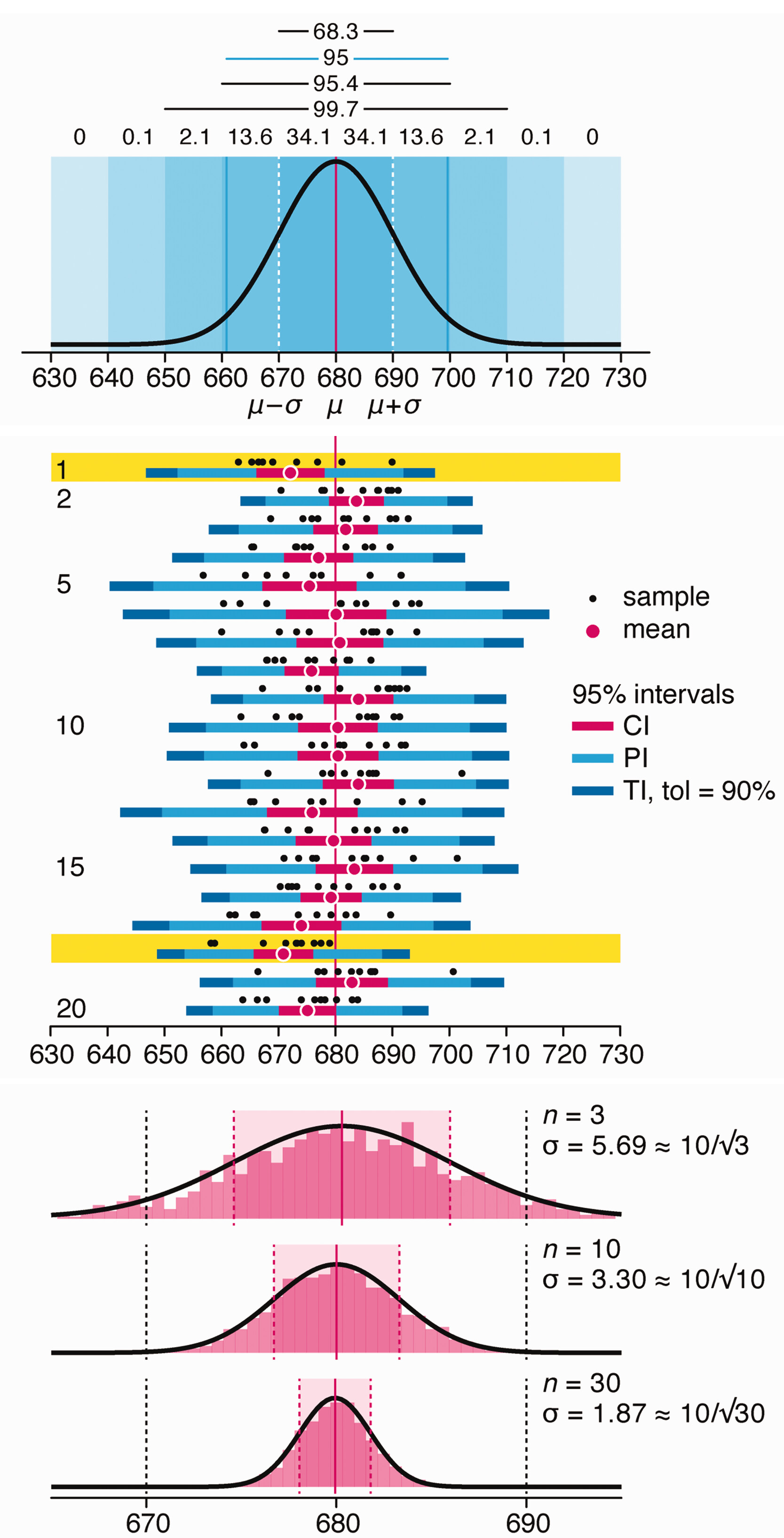

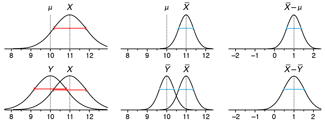

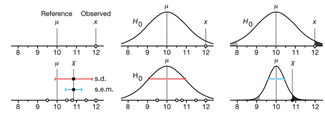

Depicting variability and uncertainty using intervals and error bars

Variability is inherent in most biological systems due to differences among members of the population. Two types of variation are commonly observed in studies: differences among samples and the “error” in estimating a population parameter (e.g. mean) from a sample. While these concepts are fundamentally very different, the associated variation is often expressed using similar notation—an interval that represents a range of values with a lower and upper bound.

In this article we discuss how common intervals are used (and misused).

Altman, N. & Krzywinski, M. (2024) Depicting variability and uncertainty using intervals and error bars. Laboratory Animals 58:453–456.

Nasa to send our human genome discs to the Moon

We'd like to say a ‘cosmic hello’: mathematics, culture, palaeontology, art and science, and ... human genomes.

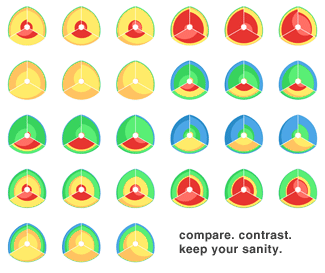

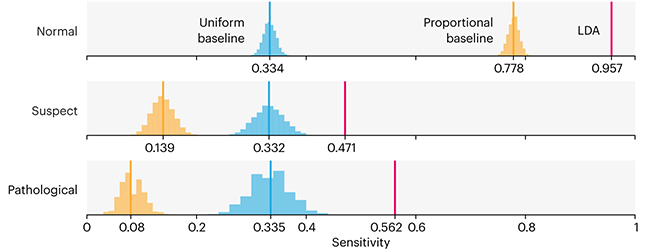

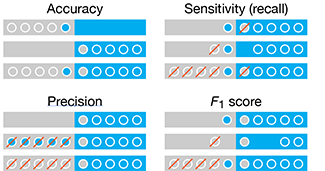

Comparing classifier performance with baselines

All animals are equal, but some animals are more equal than others. —George Orwell

This month, we will illustrate the importance of establishing a baseline performance level.

Baselines are typically generated independently for each dataset using very simple models. Their role is to set the minimum level of acceptable performance and help with comparing relative improvements in performance of other models.

Unfortunately, baselines are often overlooked and, in the presence of a class imbalance, must be established with care.

Megahed, F.M, Chen, Y-J., Jones-Farmer, A., Rigdon, S.E., Krzywinski, M. & Altman, N. (2024) Points of significance: Comparing classifier performance with baselines. Nat. Methods 21:546–548.

Happy 2024 π Day—

sunflowers ho!

Celebrate π Day (March 14th) and dig into the digit garden. Let's grow something.

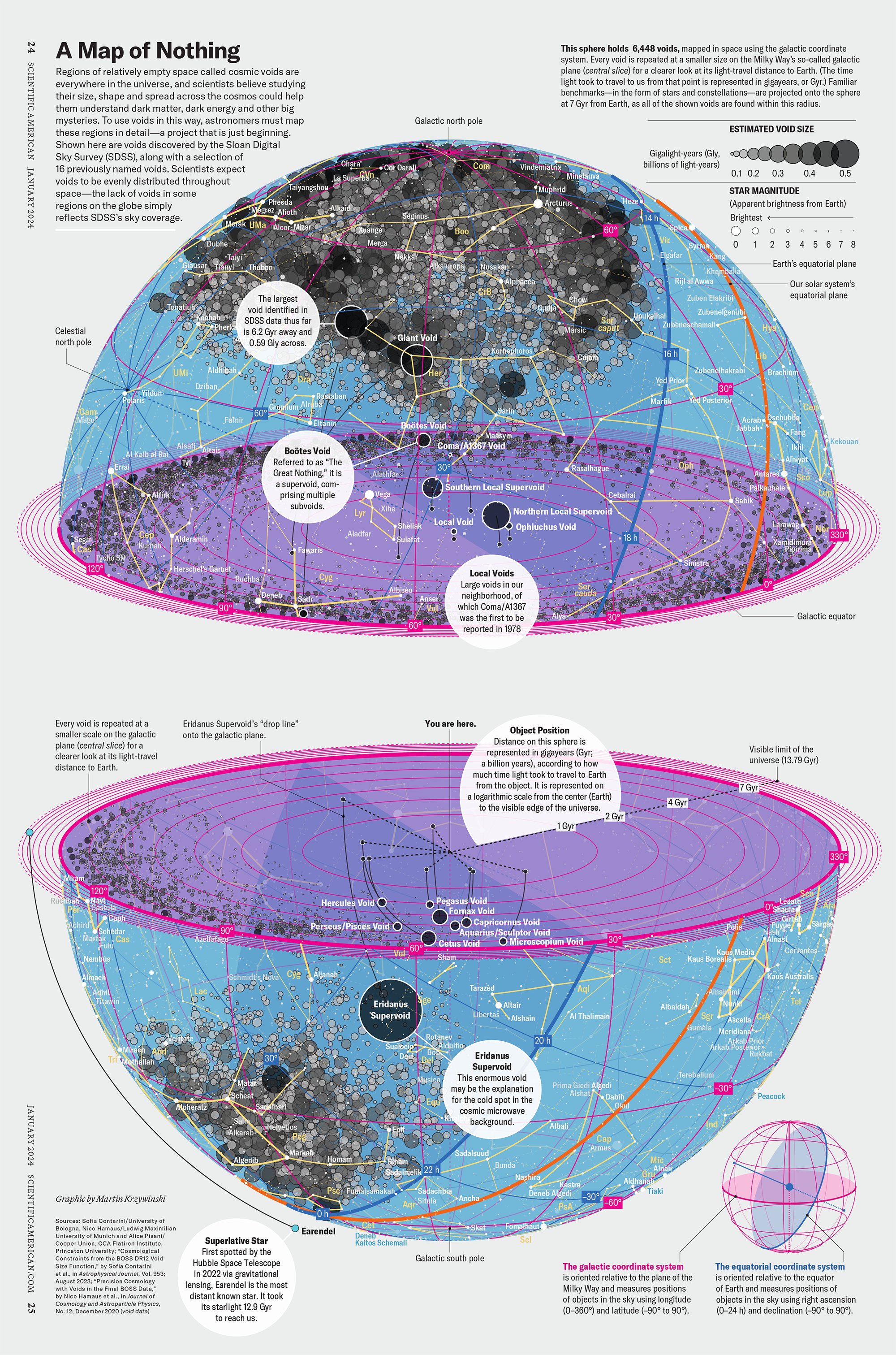

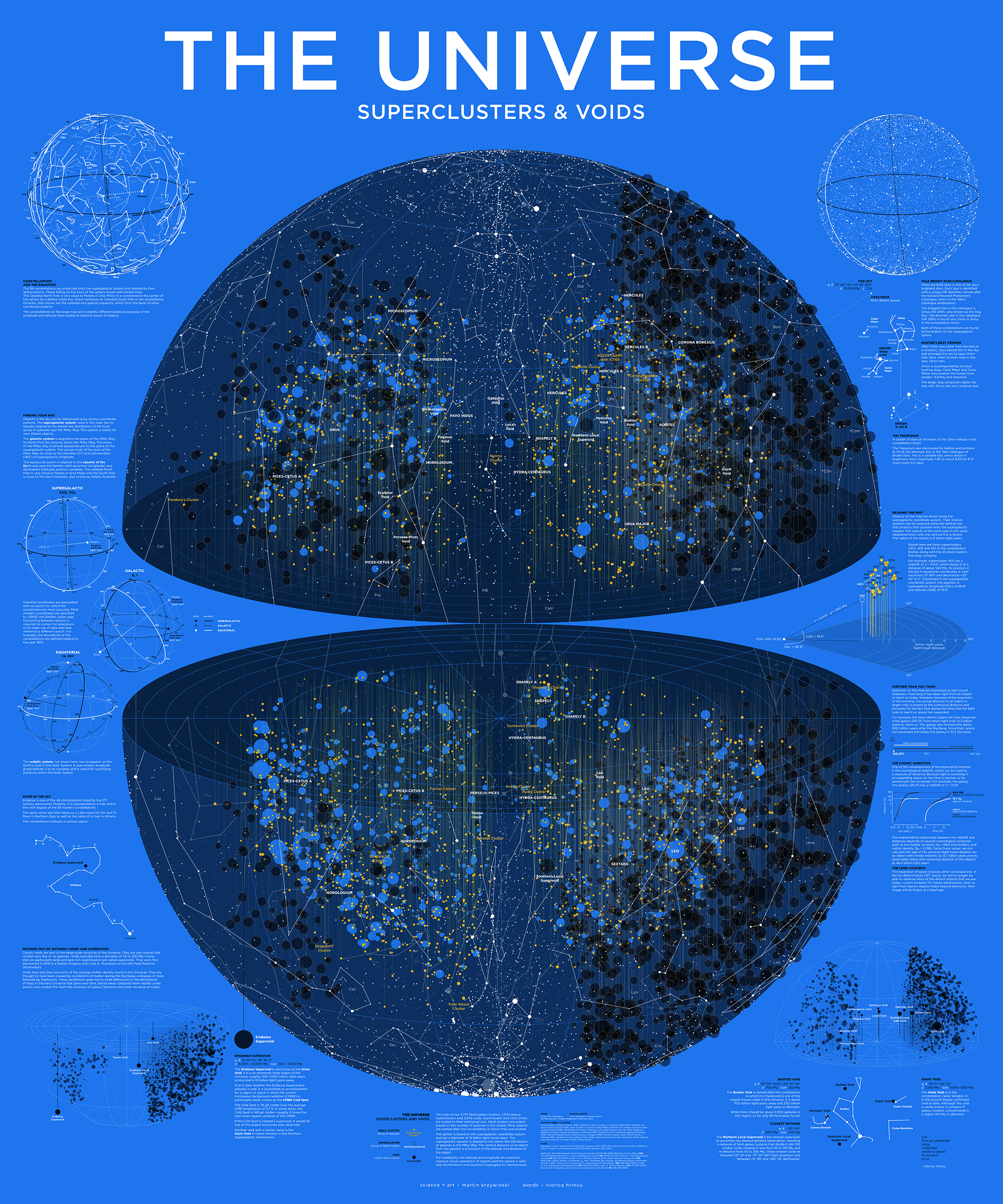

How Analyzing Cosmic Nothing Might Explain Everything

Huge empty areas of the universe called voids could help solve the greatest mysteries in the cosmos.

My graphic accompanying How Analyzing Cosmic Nothing Might Explain Everything in the January 2024 issue of Scientific American depicts the entire Universe in a two-page spread — full of nothing.

The graphic uses the latest data from SDSS 12 and is an update to my Superclusters and Voids poster.

Michael Lemonick (editor) explains on the graphic:

“Regions of relatively empty space called cosmic voids are everywhere in the universe, and scientists believe studying their size, shape and spread across the cosmos could help them understand dark matter, dark energy and other big mysteries.

To use voids in this way, astronomers must map these regions in detail—a project that is just beginning.

Shown here are voids discovered by the Sloan Digital Sky Survey (SDSS), along with a selection of 16 previously named voids. Scientists expect voids to be evenly distributed throughout space—the lack of voids in some regions on the globe simply reflects SDSS’s sky coverage.”

voids

Sofia Contarini, Alice Pisani, Nico Hamaus, Federico Marulli Lauro Moscardini & Marco Baldi (2023) Cosmological Constraints from the BOSS DR12 Void Size Function Astrophysical Journal 953:46.

Nico Hamaus, Alice Pisani, Jin-Ah Choi, Guilhem Lavaux, Benjamin D. Wandelt & Jochen Weller (2020) Journal of Cosmology and Astroparticle Physics 2020:023.

Sloan Digital Sky Survey Data Release 12

Alan MacRobert (Sky & Telescope), Paulina Rowicka/Martin Krzywinski (revisions & Microscopium)

Hoffleit & Warren Jr. (1991) The Bright Star Catalog, 5th Revised Edition (Preliminary Version).

H0 = 67.4 km/(Mpc·s), Ωm = 0.315, Ωv = 0.685. Planck collaboration Planck 2018 results. VI. Cosmological parameters (2018).

constellation figures

stars

cosmology

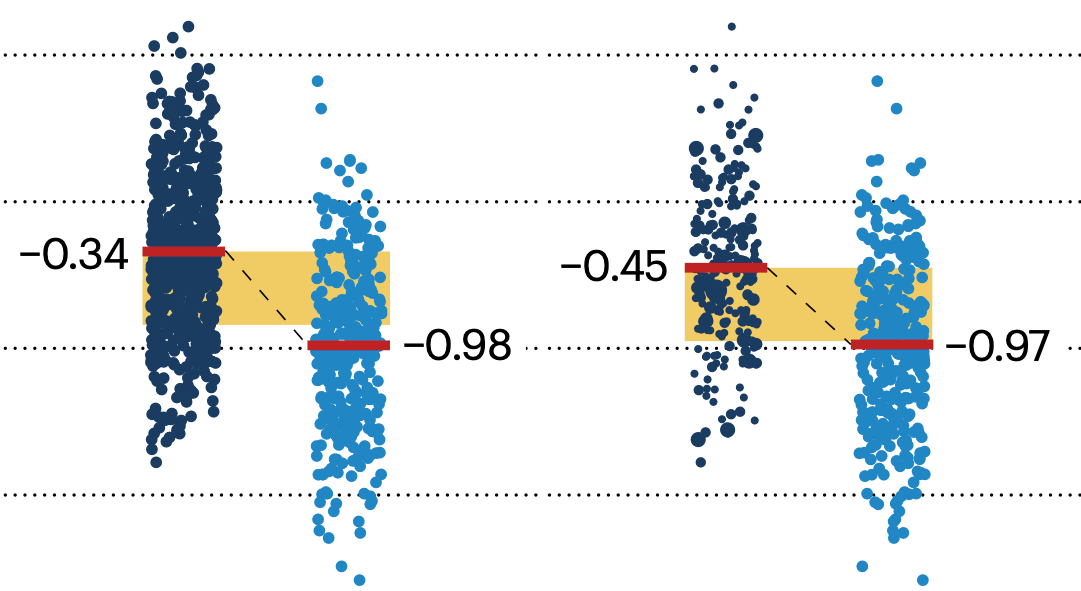

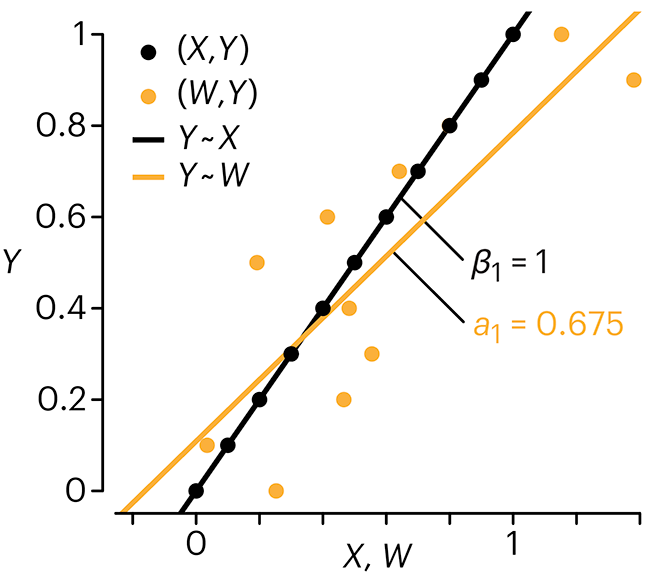

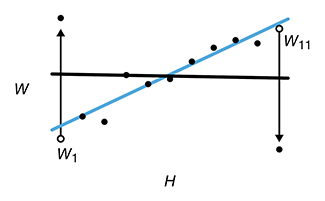

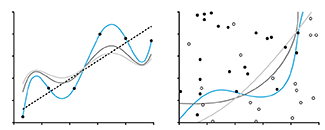

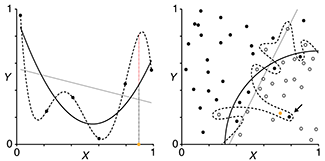

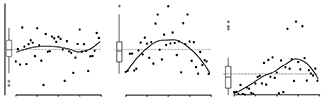

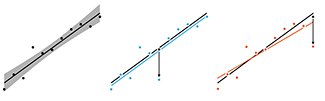

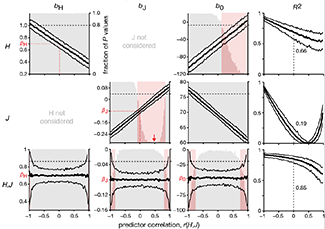

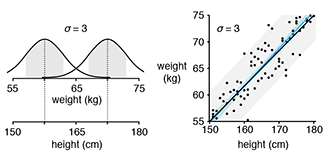

Error in predictor variables

It is the mark of an educated mind to rest satisfied with the degree of precision that the nature of the subject admits and not to seek exactness where only an approximation is possible. —Aristotle

In regression, the predictors are (typically) assumed to have known values that are measured without error.

Practically, however, predictors are often measured with error. This has a profound (but predictable) effect on the estimates of relationships among variables – the so-called “error in variables” problem.

Error in measuring the predictors is often ignored. In this column, we discuss when ignoring this error is harmless and when it can lead to large bias that can leads us to miss important effects.

Altman, N. & Krzywinski, M. (2024) Points of significance: Error in predictor variables. Nat. Methods 21:4–6.

Background reading

Altman, N. & Krzywinski, M. (2015) Points of significance: Simple linear regression. Nat. Methods 12:999–1000.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of significance: Logistic regression. Nat. Methods 13:541–542 (2016).

Das, K., Krzywinski, M. & Altman, N. (2019) Points of significance: Quantile regression. Nat. Methods 16:451–452.

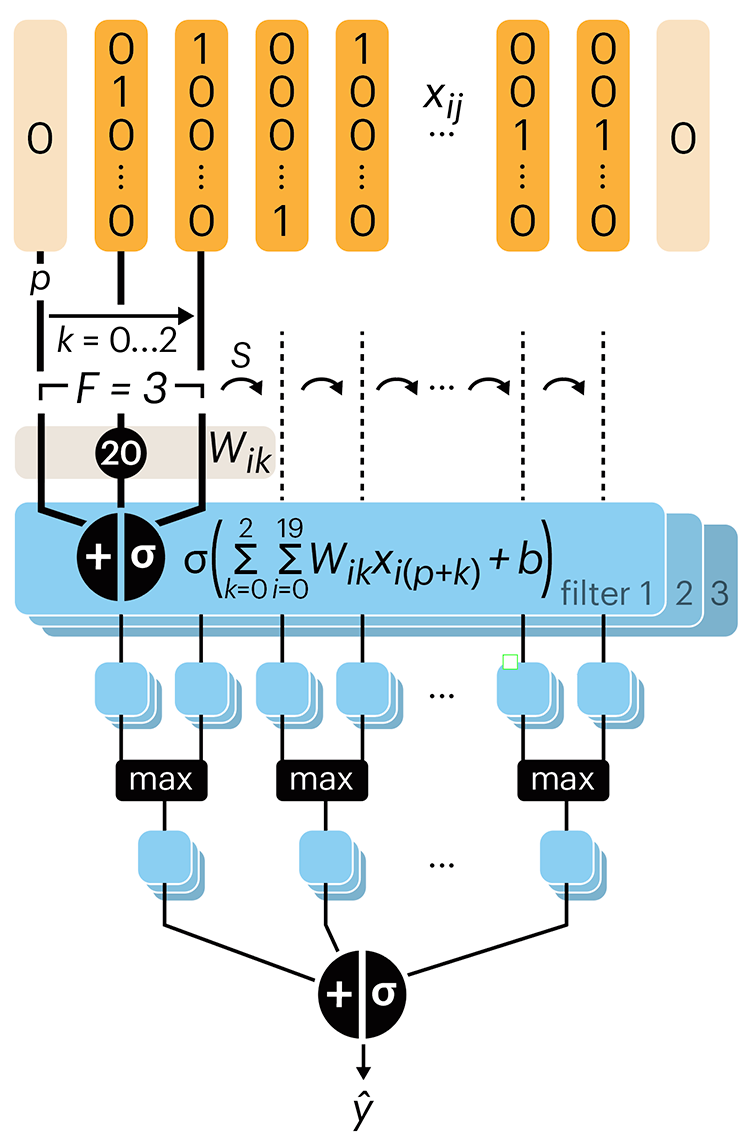

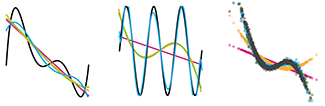

Convolutional neural networks

Nature uses only the longest threads to weave her patterns, so that each small piece of her fabric reveals the organization of the entire tapestry. – Richard Feynman

Following up on our Neural network primer column, this month we explore a different kind of network architecture: a convolutional network.

The convolutional network replaces the hidden layer of a fully connected network (FCN) with one or more filters (a kind of neuron that looks at the input within a narrow window).

Even through convolutional networks have far fewer neurons that an FCN, they can perform substantially better for certain kinds of problems, such as sequence motif detection.

Derry, A., Krzywinski, M & Altman, N. (2023) Points of significance: Convolutional neural networks. Nature Methods 20:1269–1270.

Background reading

Derry, A., Krzywinski, M. & Altman, N. (2023) Points of significance: Neural network primer. Nature Methods 20:165–167.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of significance: Logistic regression. Nature Methods 13:541–542.

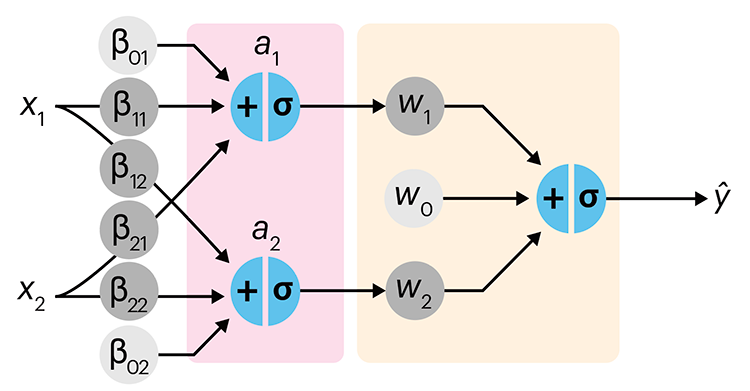

Neural network primer

Nature is often hidden, sometimes overcome, seldom extinguished. —Francis Bacon

In the first of a series of columns about neural networks, we introduce them with an intuitive approach that draws from our discussion about logistic regression.

Simple neural networks are just a chain of linear regressions. And, although neural network models can get very complicated, their essence can be understood in terms of relatively basic principles.

We show how neural network components (neurons) can be arranged in the network and discuss the ideas of hidden layers. Using a simple data set we show how even a 3-neuron neural network can already model relatively complicated data patterns.

Derry, A., Krzywinski, M & Altman, N. (2023) Points of significance: Neural network primer. Nature Methods 20:165–167.

Background reading

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of significance: Logistic regression. Nature Methods 13:541–542.

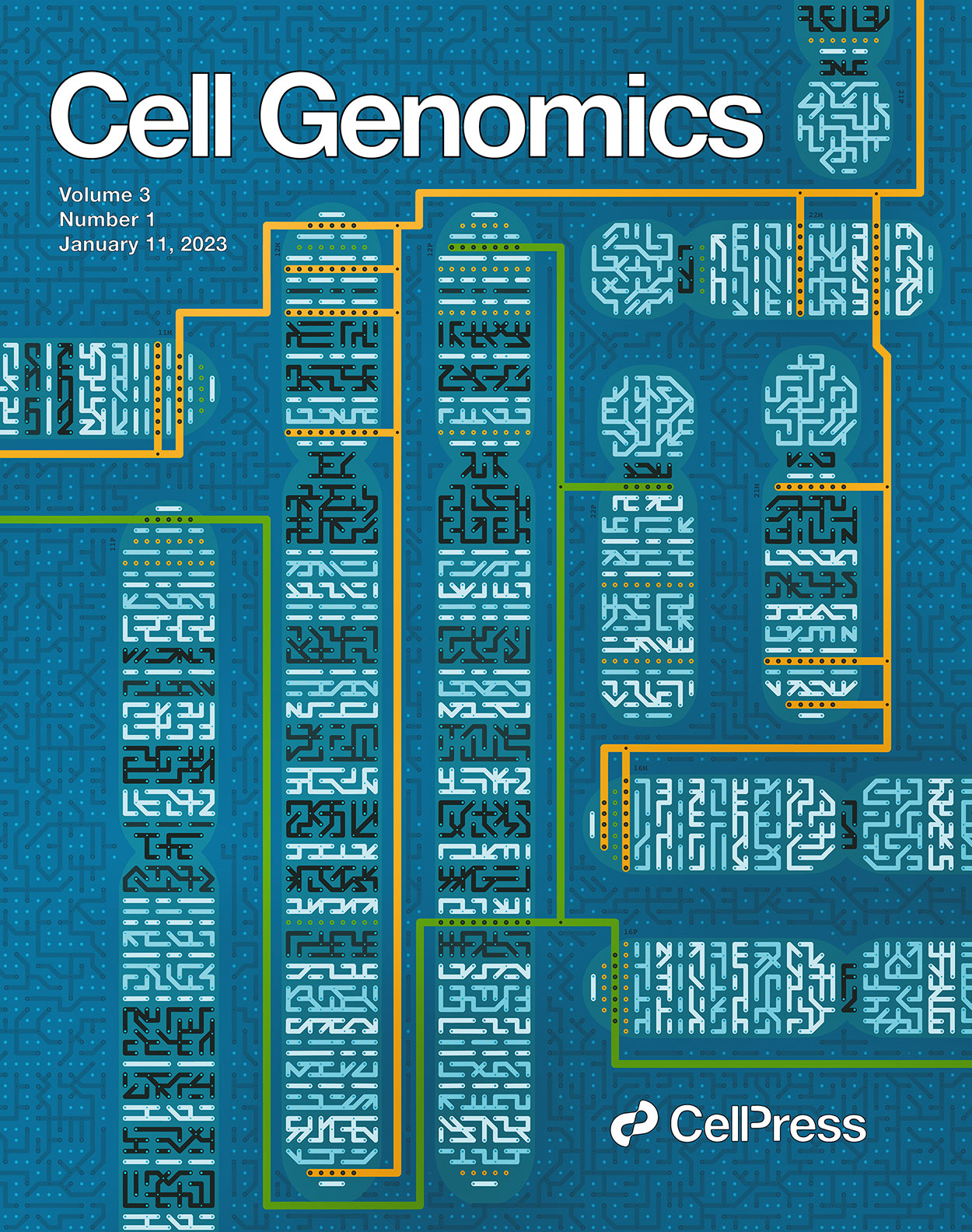

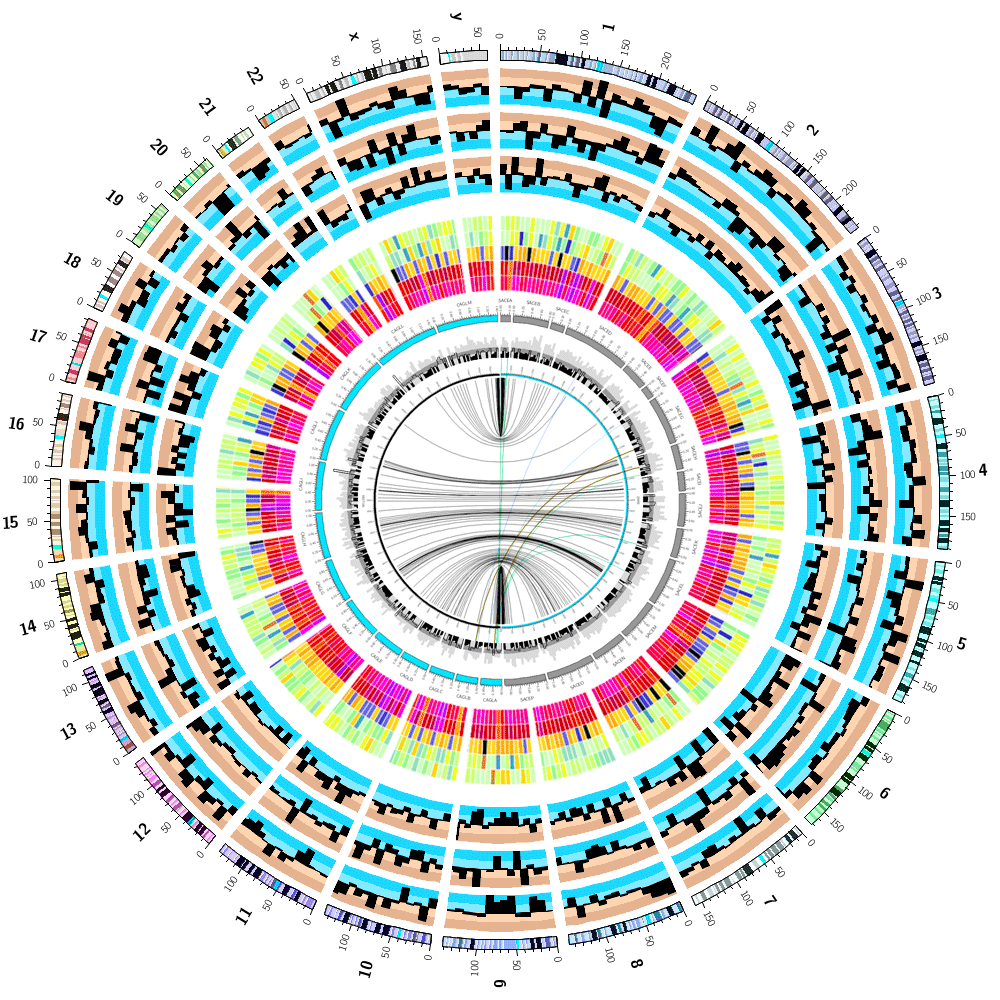

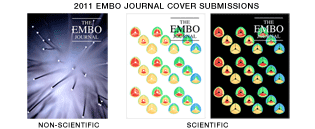

Cell Genomics cover

Our cover on the 11 January 2023 Cell Genomics issue depicts the process of determining the parent-of-origin using differential methylation of alleles at imprinted regions (iDMRs) is imagined as a circuit.

Designed in collaboration with with Carlos Urzua.

Akbari, V. et al. Parent-of-origin detection and chromosome-scale haplotyping using long-read DNA methylation sequencing and Strand-seq (2023) Cell Genomics 3(1).

Browse my gallery of cover designs.

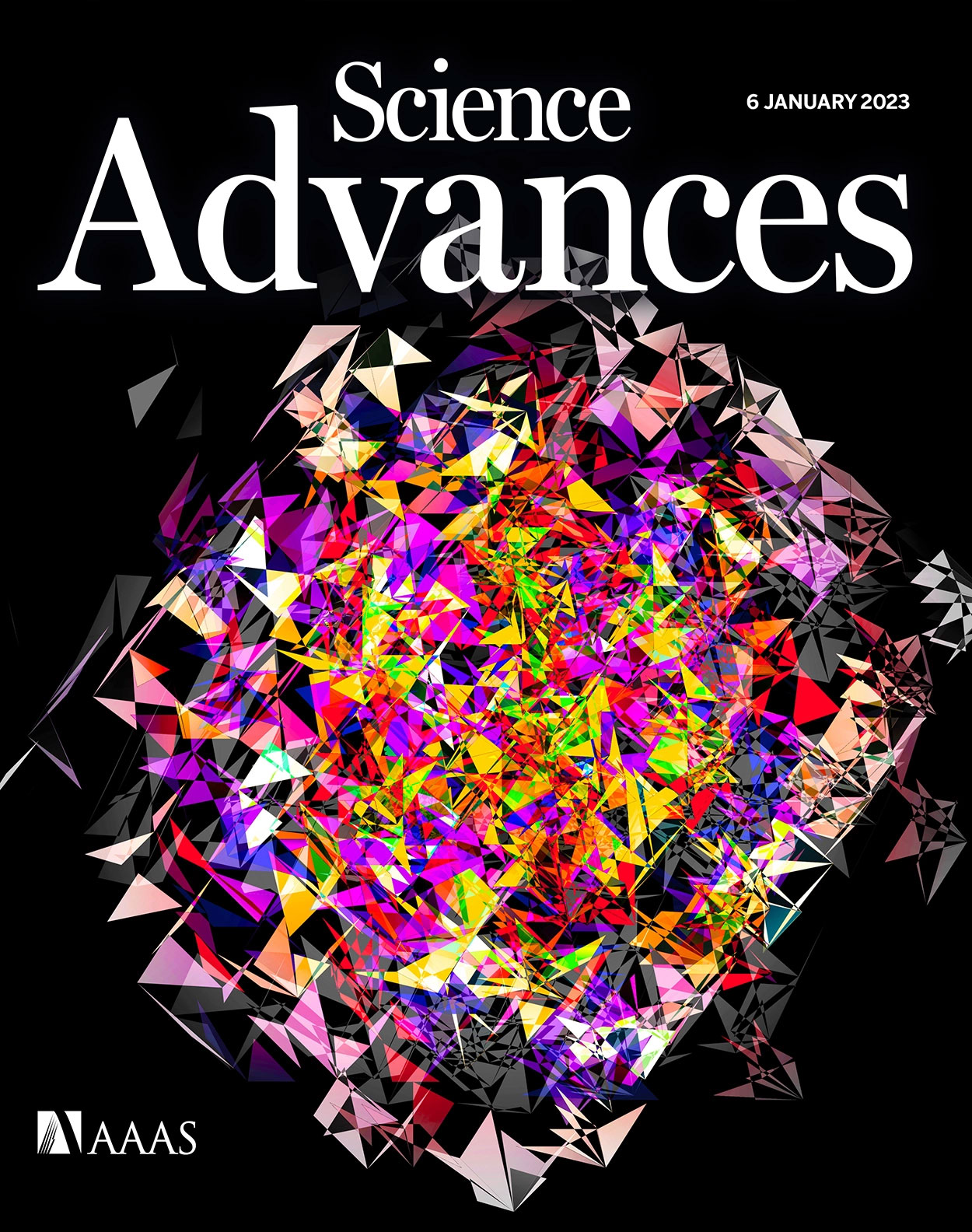

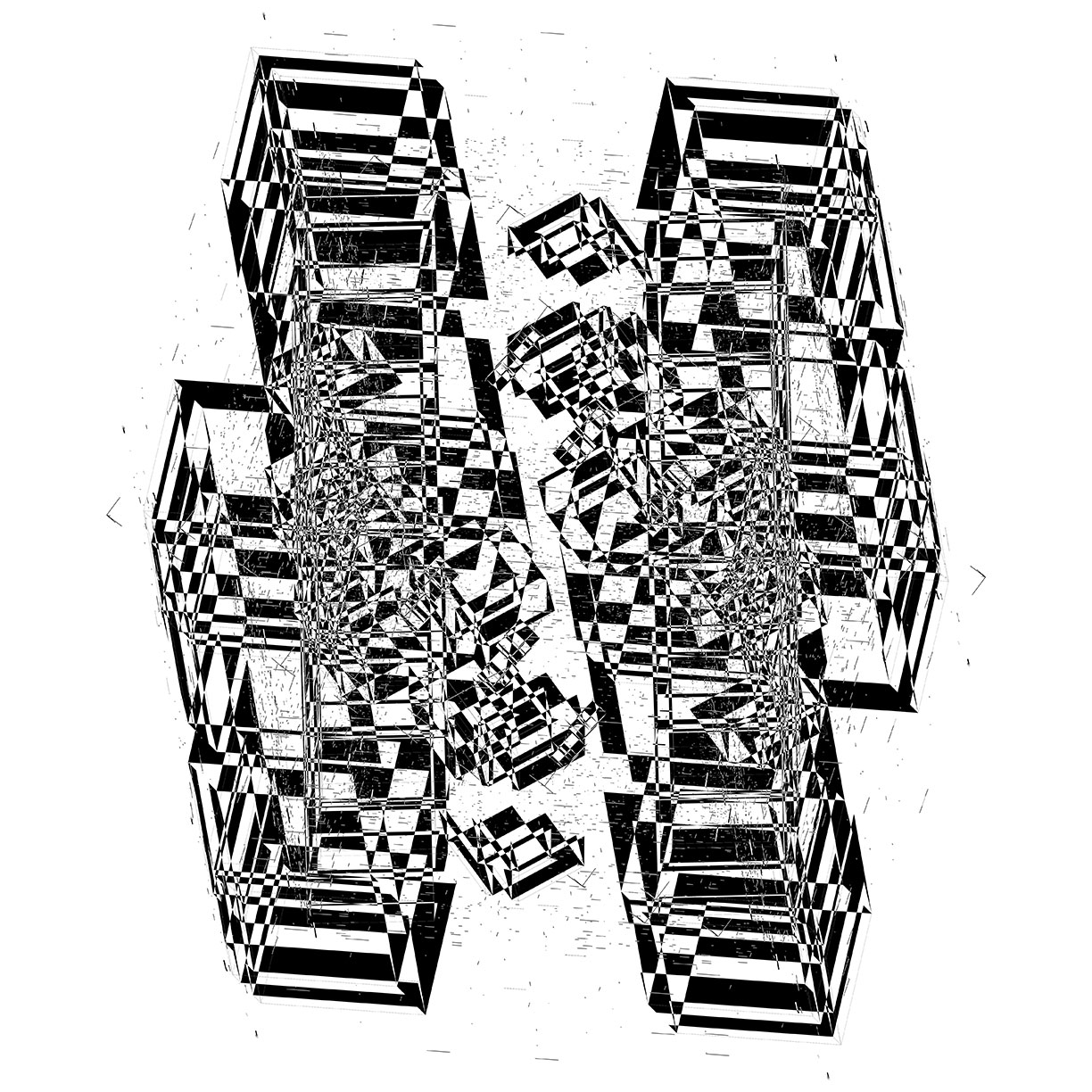

Science Advances cover

My cover design on the 6 January 2023 Science Advances issue depicts DNA sequencing read translation in high-dimensional space. The image showss 672 bases of sequencing barcodes generated by three different single-cell RNA sequencing platforms were encoded as oriented triangles on the faces of three 7-dimensional cubes.

More details about the design.

Kijima, Y. et al. A universal sequencing read interpreter (2023) Science Advances 9.

Browse my gallery of cover designs.

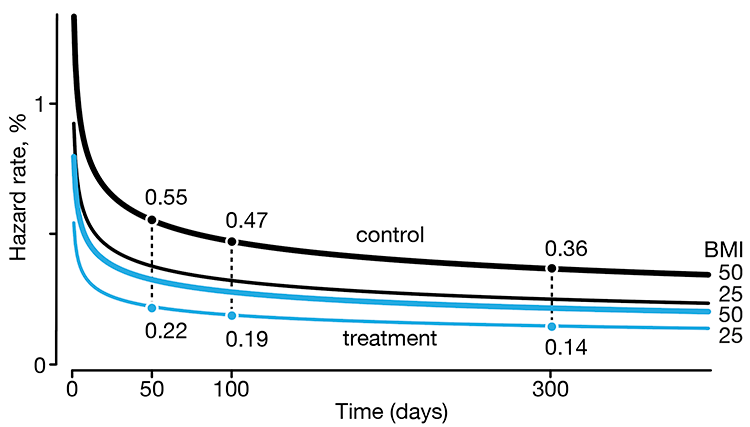

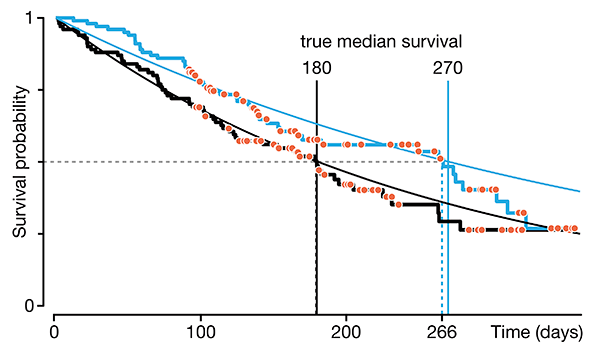

Regression modeling of time-to-event data with censoring

If you sit on the sofa for your entire life, you’re running a higher risk of getting heart disease and cancer. —Alex Honnold, American rock climber

In a follow-up to our Survival analysis — time-to-event data and censoring article, we look at how regression can be used to account for additional risk factors in survival analysis.

We explore accelerated failure time regression (AFTR) and the Cox Proportional Hazards model (Cox PH).

Dey, T., Lipsitz, S.R., Cooper, Z., Trinh, Q., Krzywinski, M & Altman, N. (2022) Points of significance: Regression modeling of time-to-event data with censoring. Nature Methods 19:1513–1515.

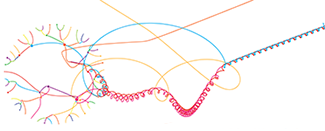

Music video for Max Cooper's Ascent

My 5-dimensional animation sets the visual stage for Max Cooper's Ascent from the album Unspoken Words. I have previously collaborated with Max on telling a story about infinity for his Yearning for the Infinite album.

I provide a walkthrough the video, describe the animation system I created to generate the frames, and show you all the keyframes

The video recently premiered on YouTube.

Renders of the full scene are available as NFTs.

Gene Cultures exhibit — art at the MIT Museum

I am more than my genome and my genome is more than me.

The MIT Museum reopened at its new location on 2nd October 2022. The new Gene Cultures exhibit featured my visualization of the human genome, which walks through the size and organization of the genome and some of the important structures.

Annals of Oncology cover

My cover design on the 1 September 2022 Annals of Oncology issue shows 570 individual cases of difficult-to-treat cancers. Each case shows the number and type of actionable genomic alterations that were detected and the length of therapies that resulted from the analysis.

Pleasance E et al. Whole-genome and transcriptome analysis enhances precision cancer treatment options (2022) Annals of Oncology 33:939–949.

Browse my gallery of cover designs.

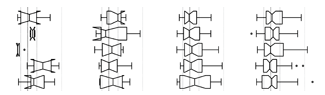

Survival analysis—time-to-event data and censoring

Love's the only engine of survival. —L. Cohen

We begin a series on survival analysis in the context of its two key complications: skew (which calls for the use of probability distributions, such as the Weibull, that can accomodate skew) and censoring (required because we almost always fail to observe the event in question for all subjects).

We discuss right, left and interval censoring and how mishandling censoring can lead to bias and loss of sensitivity in tests that probe for differences in survival times.

Dey, T., Lipsitz, S.R., Cooper, Z., Trinh, Q., Krzywinski, M & Altman, N. (2022) Points of significance: Survival analysis—time-to-event data and censoring. Nature Methods 19:906–908.

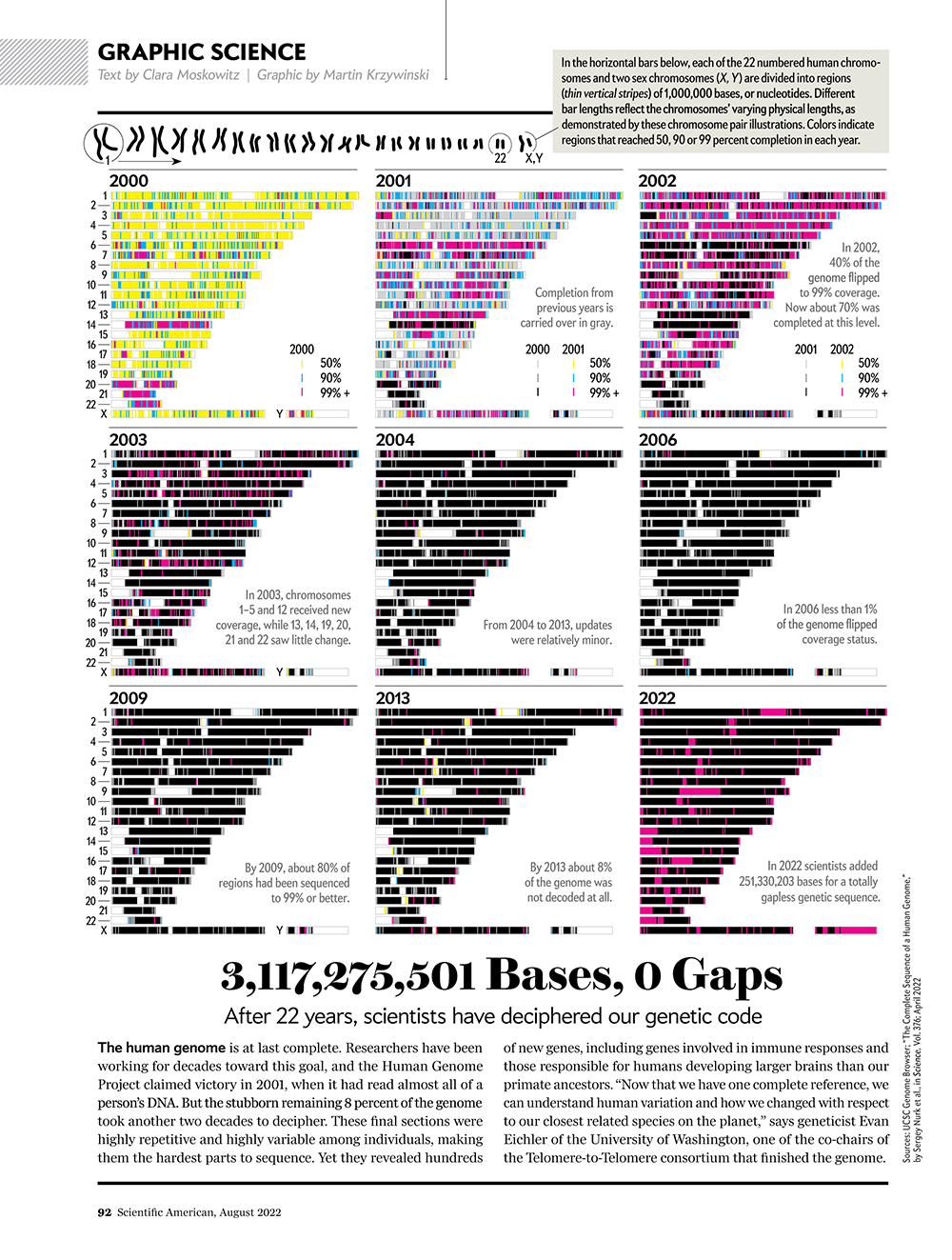

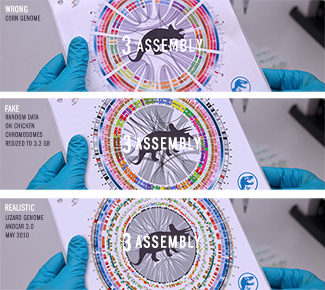

3,117,275,501 Bases, 0 Gaps

See How Scientists Put Together the Complete Human Genome.

My graphic in Scientific American's Graphic Science section in the August 2022 issue shows the full history of the human genome assembly — from its humble shotgun beginnings to the gapless telomere-to-telomere assembly.

Read about the process and methods behind the creation of the graphic.

See all my Scientific American Graphic Science visualizations.

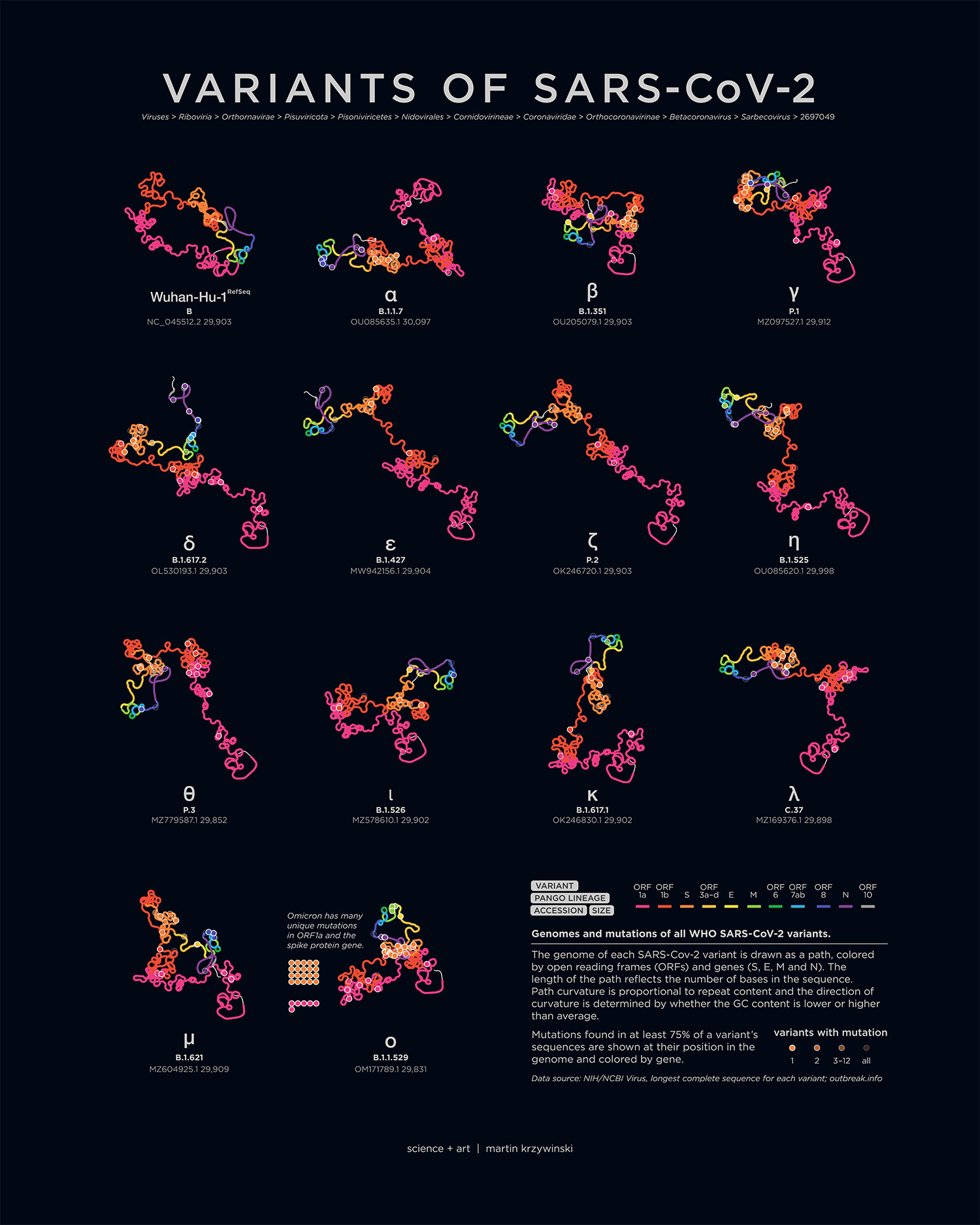

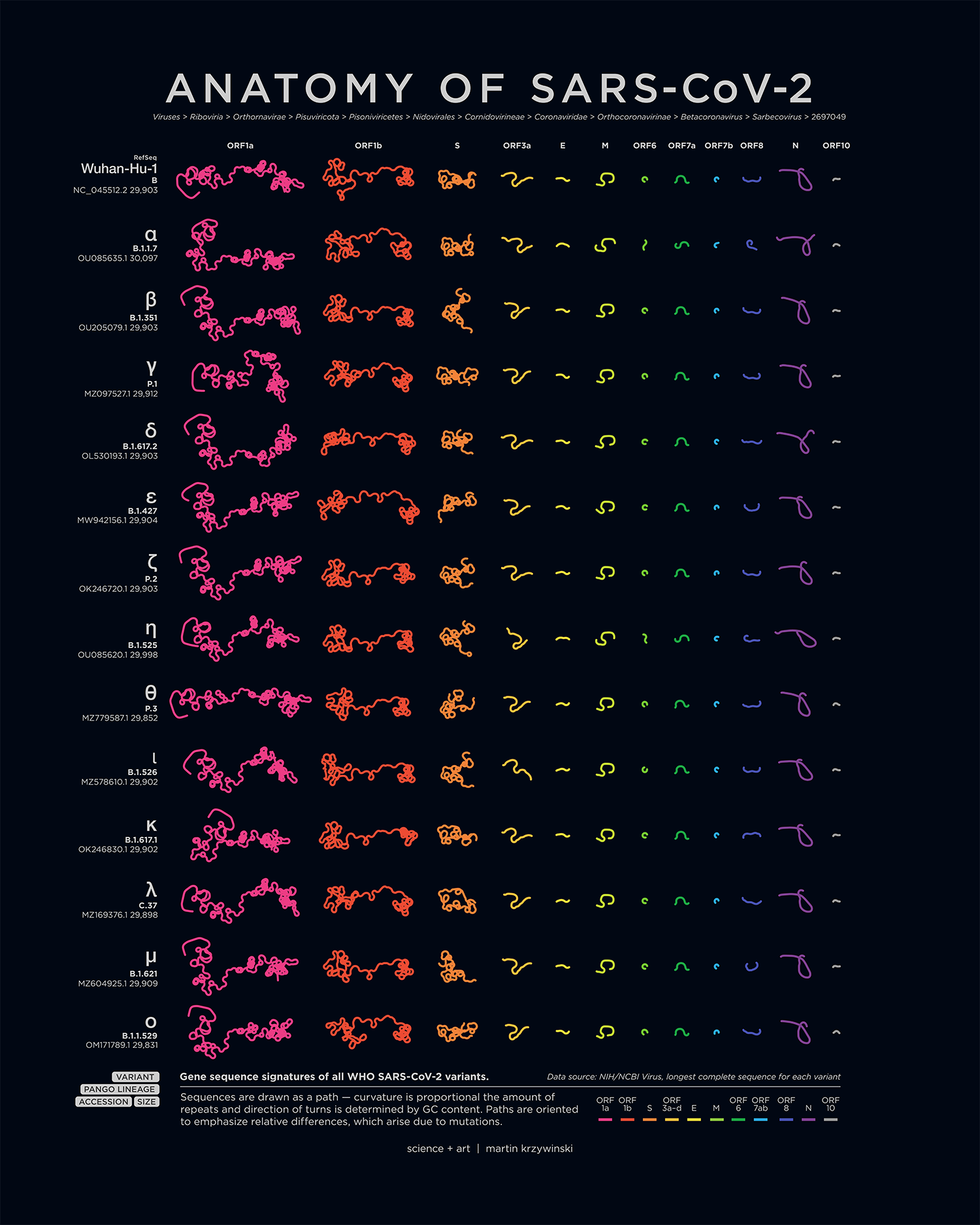

Anatomy of SARS-Cov-2

My poster showing the genome structure and position of mutations on all SARS-CoV-2 variants appears in the March/April 2022 issue of American Scientist.

An accompanying piece breaks down the anatomy of each genome — by gene and ORF, oriented to emphasize relative differences that are caused by mutations.

Cancer Cell cover

My cover design on the 11 April 2022 Cancer Cell issue depicts cellular heterogeneity as a kaleidoscope generated from immunofluorescence staining of the glial and neuronal markers MBP and NeuN (respectively) in a GBM patient-derived explant.

LeBlanc VG et al. Single-cell landscapes of primary glioblastomas and matched explants and cell lines show variable retention of inter- and intratumor heterogeneity (2022) Cancer Cell 40:379–392.E9.

Browse my gallery of cover designs.

Nature Biotechnology cover

My cover design on the 4 April 2022 Nature Biotechnology issue is an impression of a phylogenetic tree of over 200 million sequences.

Konno N et al. Deep distributed computing to reconstruct extremely large lineage trees (2022) Nature Biotechnology 40:566–575.

Browse my gallery of cover designs.

Nature cover — Gene Genie

My cover design on the 17 March 2022 Nature issue depicts the evolutionary properties of sequences at the extremes of the evolvability spectrum.

Vaishnav ED et al. The evolution, evolvability and engineering of gene regulatory DNA (2022) Nature 603:455–463.

Browse my gallery of cover designs.

Happy 2022 `\pi` Day—

three one four: a number of notes

Celebrate `\pi` Day (March 14th) and finally hear what you've been missing.

“three one four: a number of notes” is a musical exploration of how we think about mathematics and how we feel about mathematics. It tells stories from the very beginning (314…) to the very (known) end of π (...264) as well as math (Wallis Product) and math jokes (Feynman Point), repetition (nn) and zeroes (null).

The album is scored for solo piano in the style of 20th century classical music – each piece has a distinct personality, drawn from styles of Boulez, Feldman, Glass, Ligeti, Monk, and Satie.

Each piece is accompanied by a piku (or πku), a poem whose syllable count is determined by a specific sequence of digits from π.

Check out art from previous years: 2013 `\pi` Day and 2014 `\pi` Day, 2015 `\pi` Day, 2016 `\pi` Day, 2017 `\pi` Day, 2018 `\pi` Day, 2019 `\pi` Day, 2020 `\pi` Day and 2021 `\pi` Day.

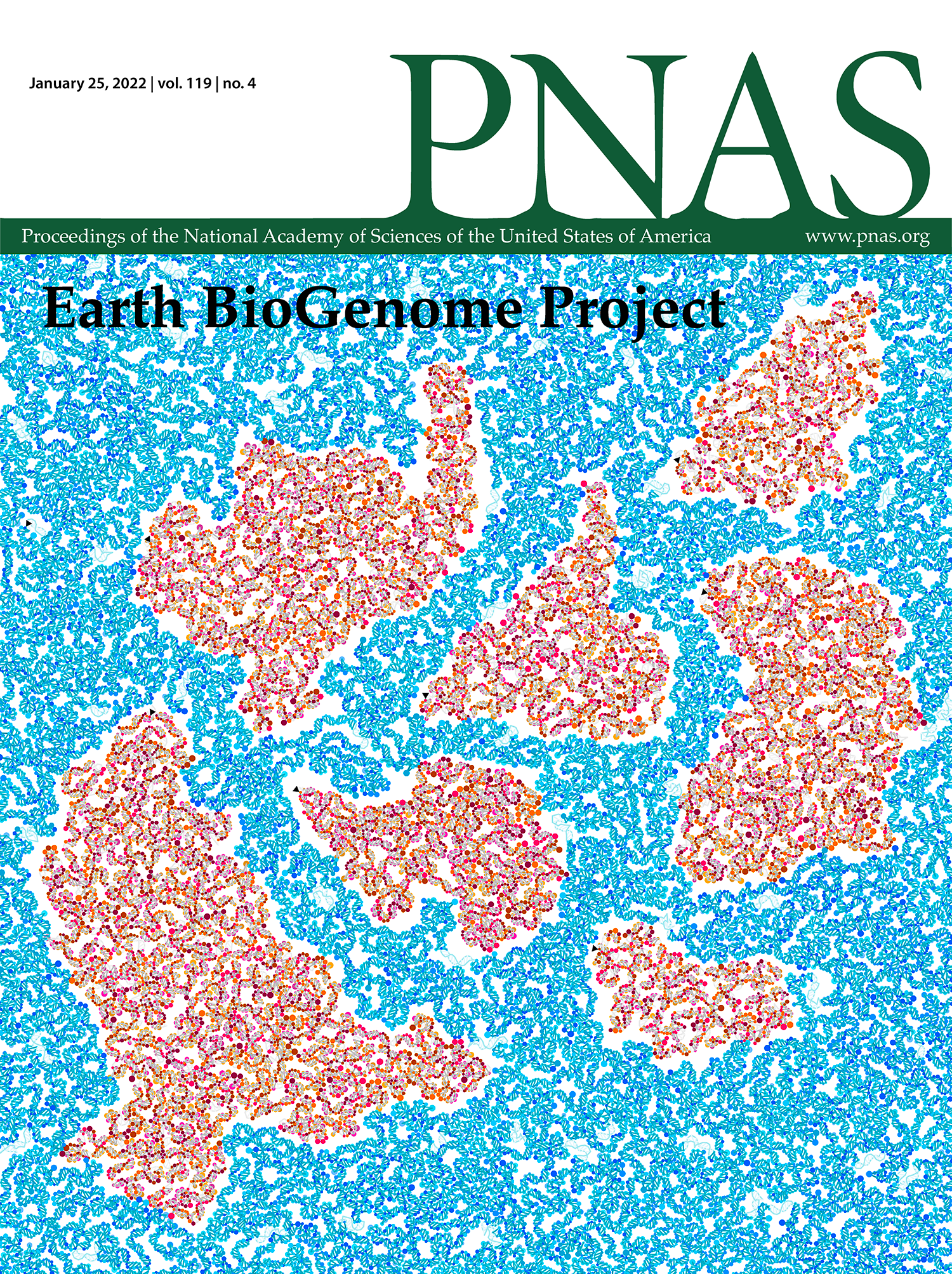

PNAS Cover — Earth BioGenome Project

My design appears on the 25 January 2022 PNAS issue.

The cover shows a view of Earth that captures the vision of the Earth BioGenome Project — understanding and conserving genetic diversity on a global scale. Continents from the Authagraph projection, which preserves areas and shapes, are represented as a double helix of 32,111 bases. Short sequences of 806 unique species, sequenced as part of EBP-affiliated projects, are mapped onto the double helix of the continent (or ocean) where the species is commonly found. The length of the sequence is the same for each species on a continent (or ocean) and the sequences are separated by short gaps. Individual bases of the sequence are colored by dots. Species appear along the path in alphabetical order (by Latin name) and the first base of the first species is identified by a small black triangle.

Lewin HA et al. The Earth BioGenome Project 2020: Starting the clock. (2022) PNAS 119(4) e2115635118.

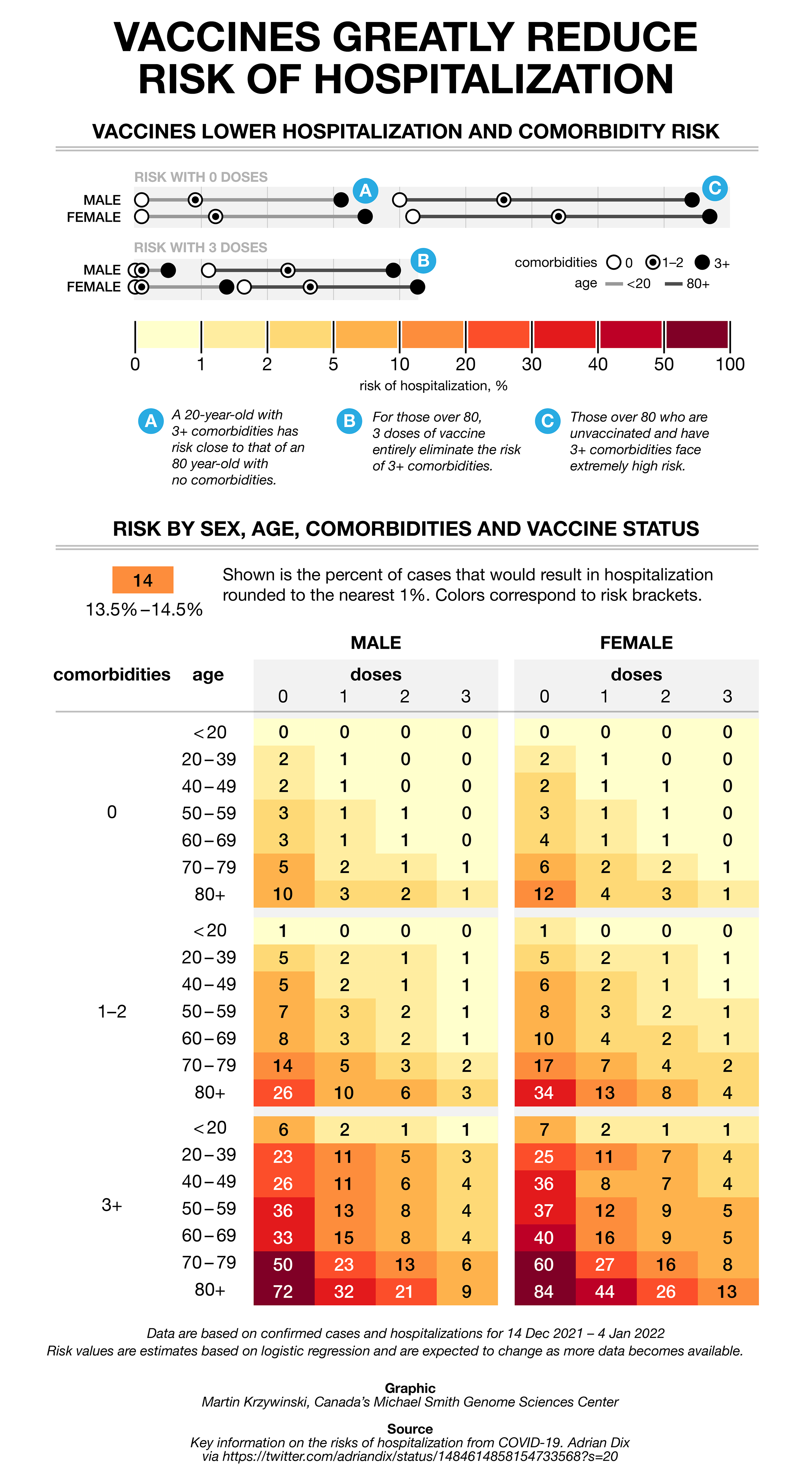

The COVID charts — hospitalization rates

As part of the COVID Charts series, I fix a muddled and storyless graphic tweeted by Adrian Dix, Canada's Health Minister.

I show you how to fix color schemes to make them colorblind-accessible and effective in revealing patters, how to reduce redundancy in labels (a key but overlooked part of many visualizations) and how to extract a story out of a table to frame the narrative.

The class imbalance problem

The exception proves the rule.

But when one class is rare, evaluating a classifier using accuracy can be misleading — because it can vary across classes. This is the class imbalance problem.

We discuss at how a data set can be rebalanced by removing data (undersampling) or adding (oversampling) synthetic data. This must be done with care — undersampling can result in the loss of information and oversampling can lead to overfitting.

We look at various resampling methods (e.g. SMOTE) and explore how they influence performance of a classifier as the imbalance ratio increases.

Megahed, F.M, Chen, Y-J., Megahed, A., Ong, Y., Altman, N. & Krzywinski, M. (2021) Points of significance: The class imbalance problem. Nature Methods 18:1270–1272.

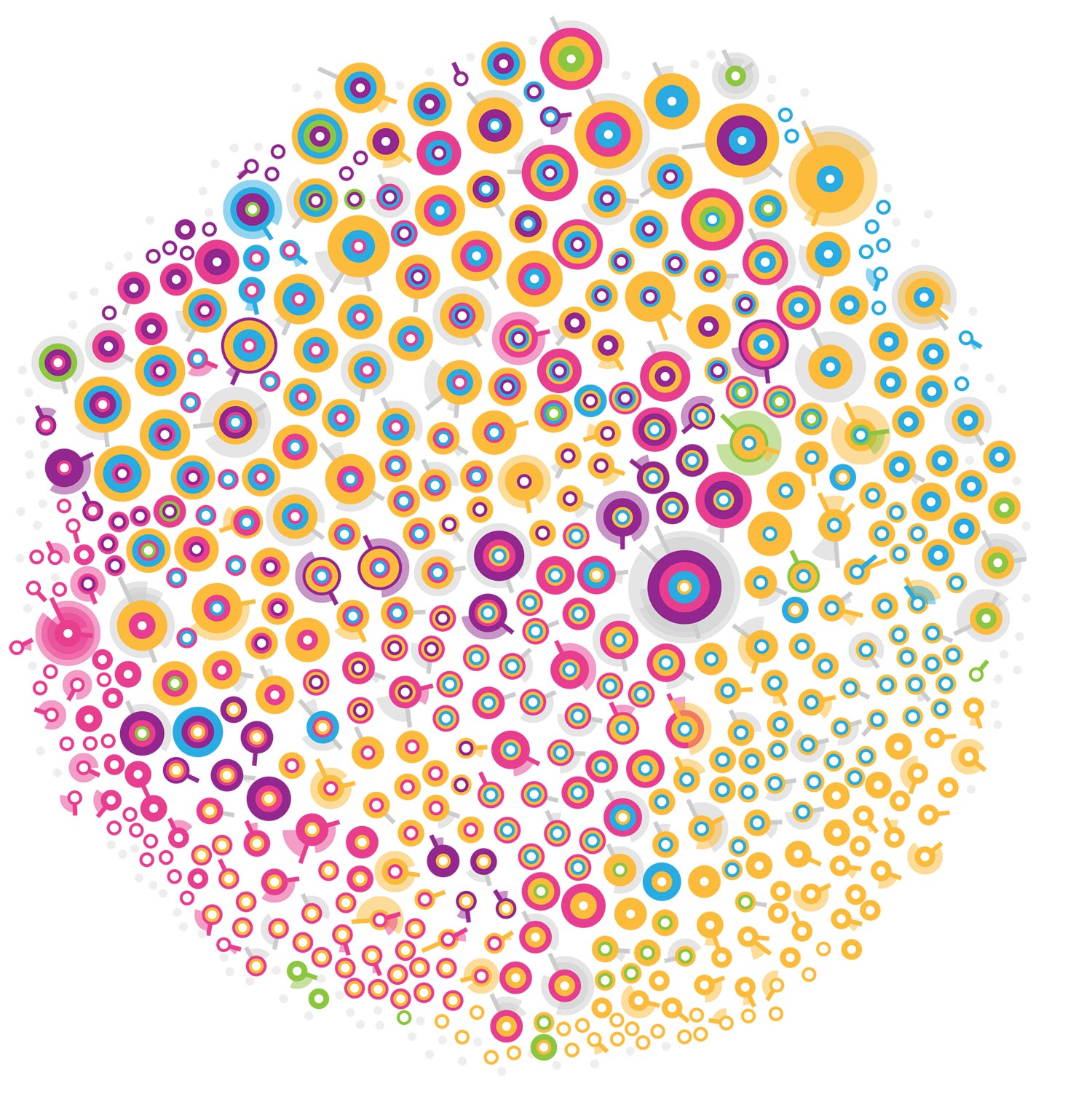

Science cover — The Human Genome

My cover design on the 24 Sep 2021 Science issue depicts the human genome as a spiral (scale: 1 million bases per centimeter), with colored segments representing different chromosomes. Circle size denotes the number of genes associated with Mendelian disorders and hollow circles indicate the number of mutation clusters from a pan-cancer analysis.

Browse my gallery of cover designs.

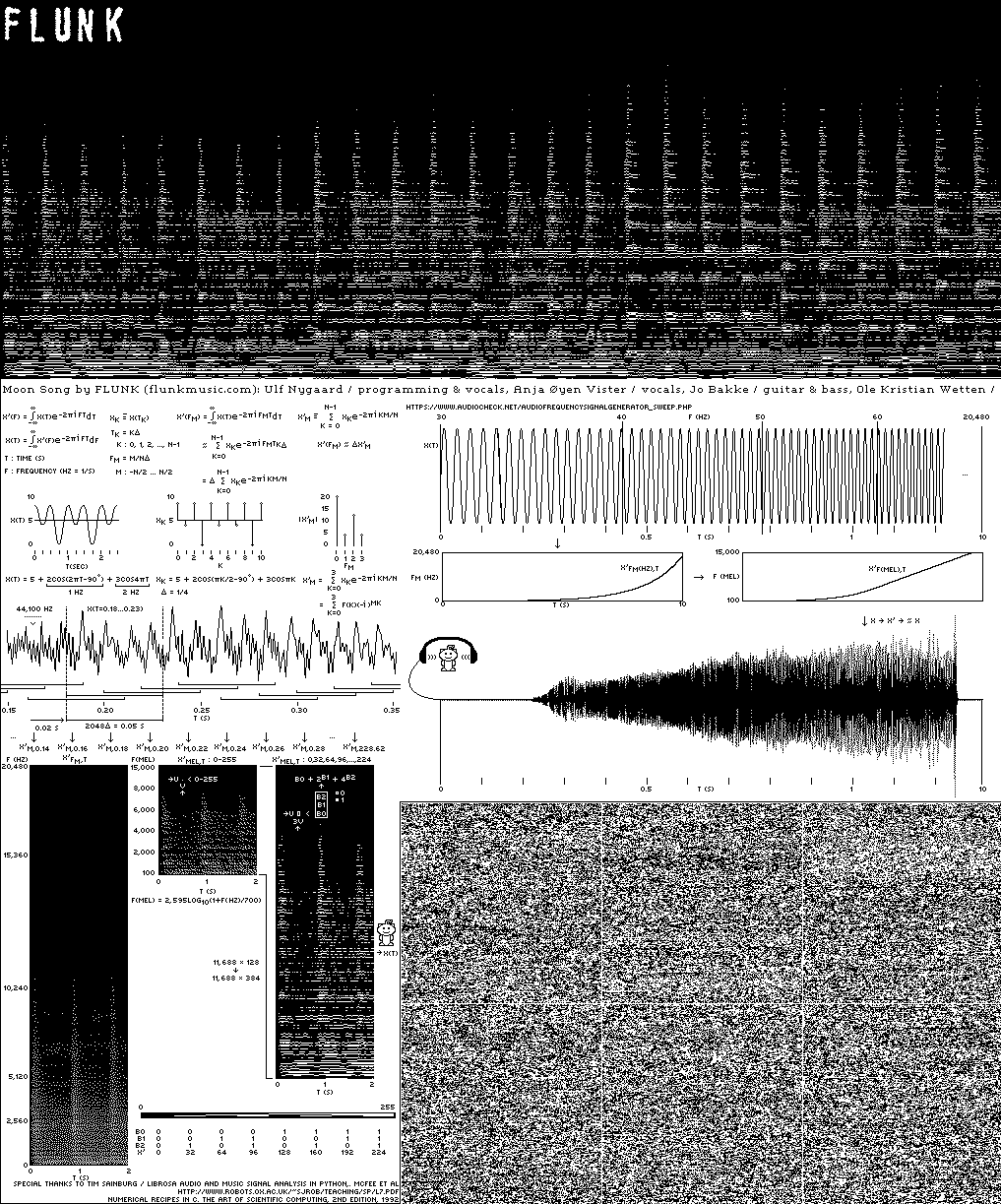

Music for the Moon: Flunk's 'Down Here / Moon Above'

The Sanctuary Project is a Lunar vault of science and art. It includes two fully sequenced human genomes, sequenced and assembled by us at Canada's Michael Smith Genome Sciences Centre.

The first disc includes a song composed by Flunk for the (eventual) trip to the Moon.

But how do you send sound to space? I describe the inspiration, process and art behind the work.

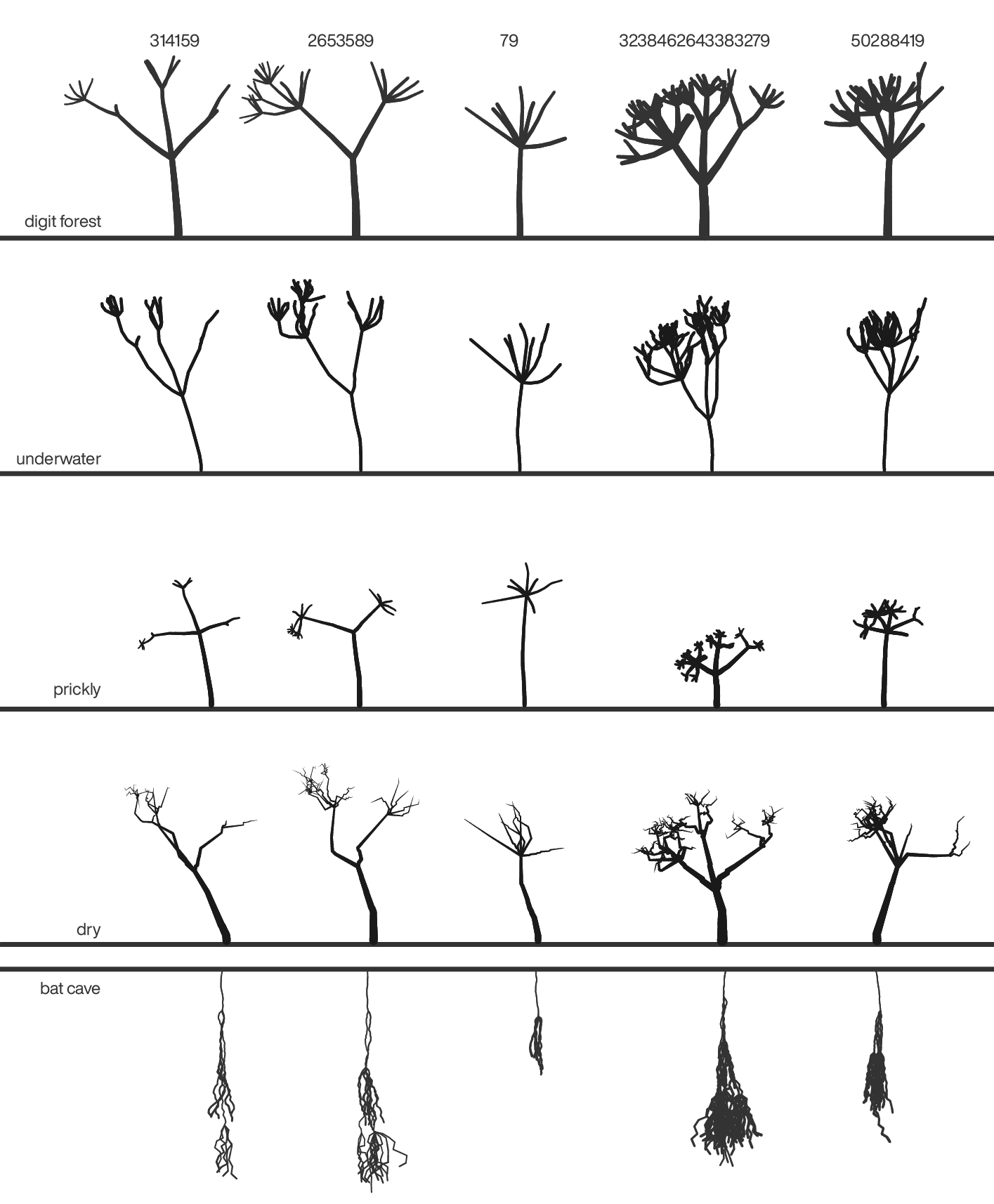

Happy 2021 `\pi` Day—

A forest of digits

Celebrate `\pi` Day (March 14th) and finally see the digits through the forest.

This year is full of botanical whimsy. A Lindenmayer system forest – deterministic but always changing. Feel free to stop and pick the flowers from the ground.

And things can get crazy in the forest.

Check out art from previous years: 2013 `\pi` Day and 2014 `\pi` Day, 2015 `\pi` Day, 2016 `\pi` Day, 2017 `\pi` Day, 2018 `\pi` Day and 2019 `\pi` Day.

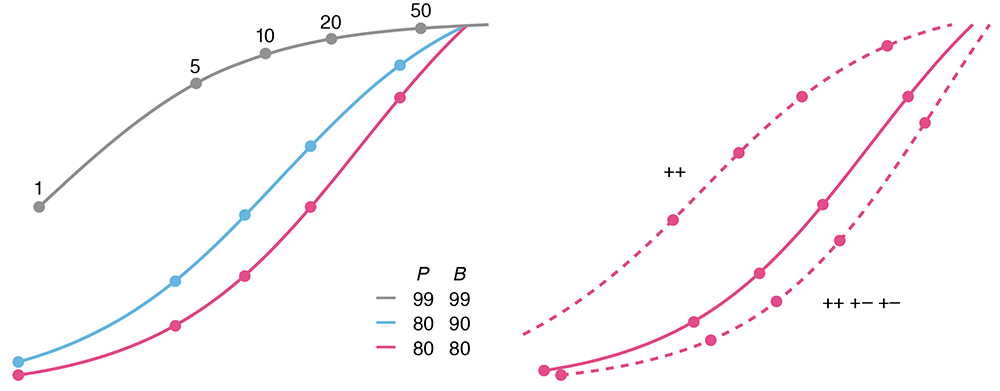

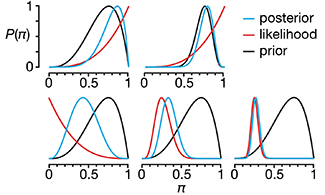

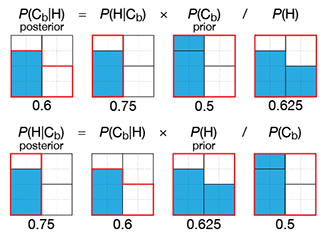

Testing for rare conditions

All that glitters is not gold. —W. Shakespeare

The sensitivity and specificity of a test do not necessarily correspond to its error rate. This becomes critically important when testing for a rare condition — a test with 99% sensitivity and specificity has an even chance of being wrong when the condition prevalence is 1%.

We discuss the positive predictive value (PPV) and how practices such as screen can increase it.

Altman, N. & Krzywinski, M. (2021) Points of significance: Testing for rare conditions. Nature Methods 18:224–225.

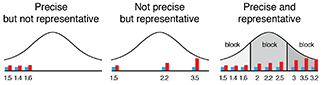

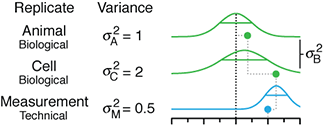

Standardization fallacy

We demand rigidly defined areas of doubt and uncertainty! —D. Adams

A popular notion about experiments is that it's good to keep variability in subjects low to limit the influence of confounding factors. This is called standardization.

Unfortunately, although standardization increases power, it can induce unrealistically low variability and lead to results that do not generalize to the population of interest. And, in fact, may be irreproducible.

Not paying attention to these details and thinking (or hoping) that standardization is always good is the "standardization fallacy". In this column, we look at how standardization can be balanced with heterogenization to avoid this thorny issue.

Voelkl, B., Würbel, H., Krzywinski, M. & Altman, N. (2021) Points of significance: Standardization fallacy. Nature Methods 18:5–6.

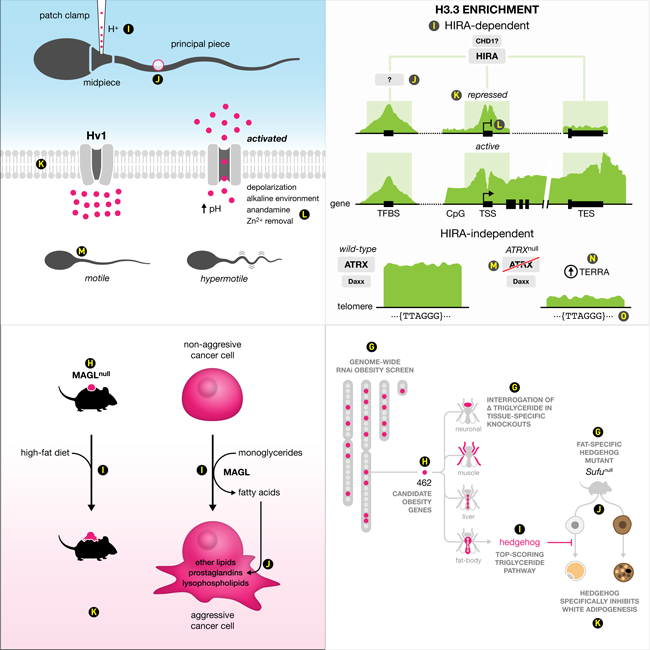

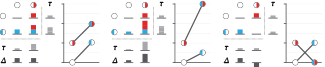

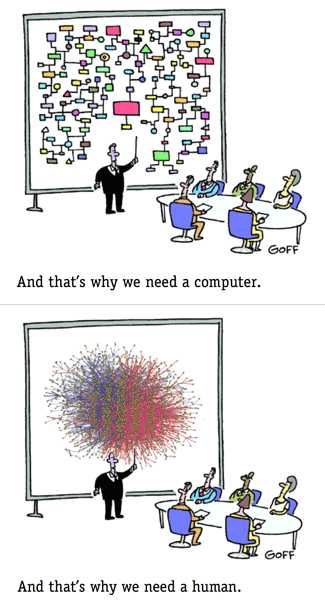

Graphical Abstract Design Guidelines

Clear, concise, legible and compelling.

Making a scientific graphical abstract? Refer to my practical design guidelines and redesign examples to improve organization, design and clarity of your graphical abstracts.

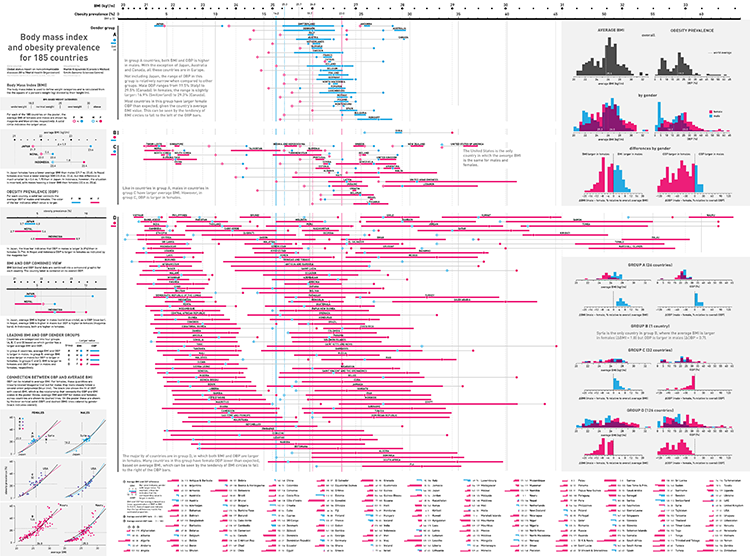

"This data might give you a migrane"

An in-depth look at my process of reacting to a bad figure — how I design a poster and tell data stories.

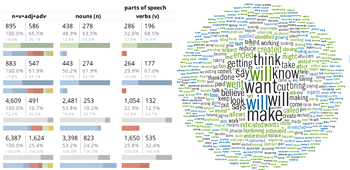

He said, he said — a word analysis of the 2020 Presidential Debates

Building on the method I used to analyze the 2008, 2012 and 2016 U.S. Presidential and Vice Presidential debates, I explore word usagein the 2020 Debates between Donald Trump and Joe Biden.

Points of Significance celebrates 50th column

We are celebrating the publication of our 50th column!

To all our coauthors — thank you and see you in the next column!

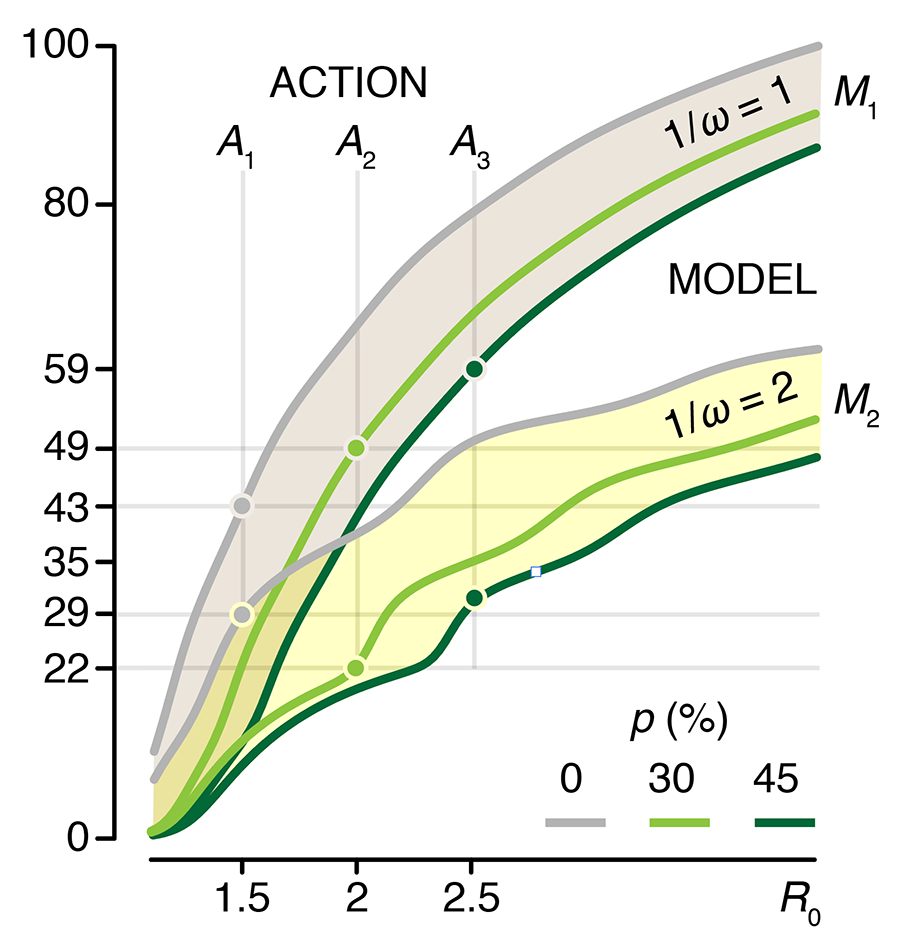

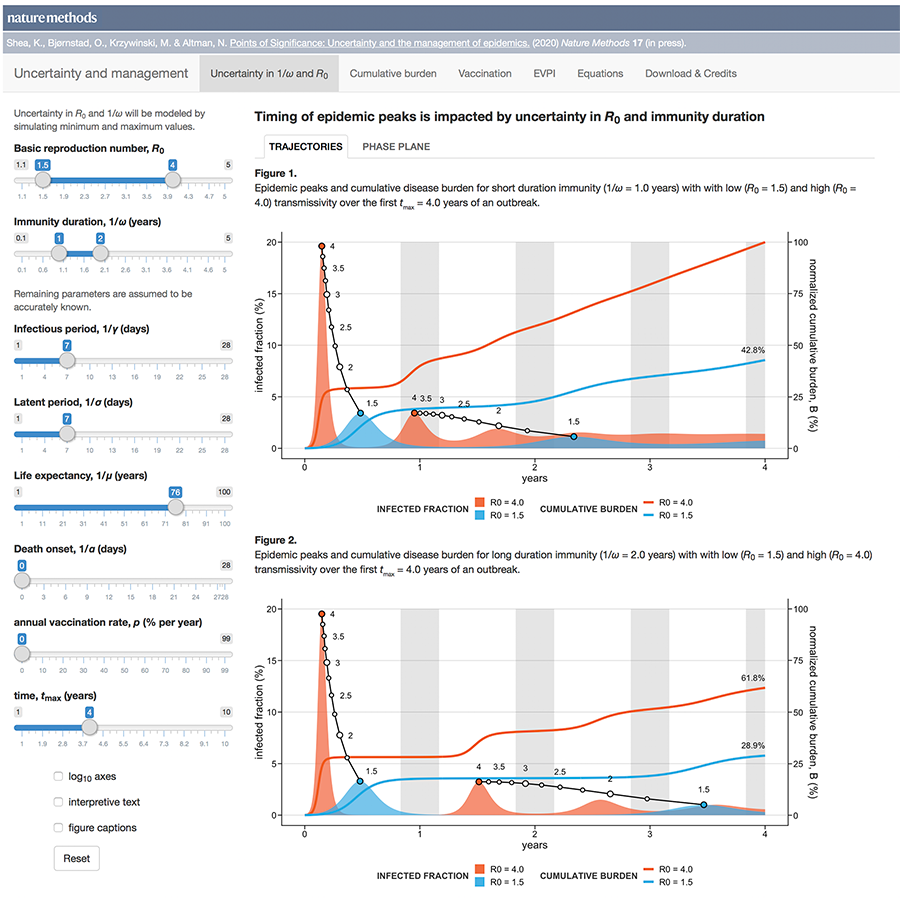

Uncertainty and the management of epidemics

When modelling epidemics, some uncertainties matter more than others.

Public health policy is always hampered by uncertainty. During a novel outbreak, nearly everything will be uncertain: the mode of transmission, the duration and population variability of latency, infection and protective immunity and, critically, whether the outbreak will fade out or turn into a major epidemic.

The uncertainty may be structural (which model?), parametric (what is `R_0`?), and/or operational (how well do masks work?).

This month, we continue our exploration of epidemiological models and look at how uncertainty affects forecasts of disease dynamics and optimization of intervention strategies.

We show how the impact of the uncertainty on any choice in strategy can be expressed using the Expected Value of Perfect Information (EVPI), which is the potential improvement in outcomes that could be obtained if the uncertainty is resolved before making a decision on the intervention strategy. In other words, by how much could we potentially increase effectiveness of our choice (e.g. lowering total disease burden) if we knew which model best reflects reality?

This column has an interactive supplemental component (download code) that allows you to explore the impact of uncertainty in `R_0` and immunity duration on timing and size of epidemic waves and the total burden of the outbreak and calculate EVPI for various outbreak models and scenarios.

Bjørnstad, O.N., Shea, K., Krzywinski, M. & Altman, N. (2020) Points of significance: Uncertainty and the management of epidemics. Nature Methods 17.

Background reading

Bjørnstad, O.N., Shea, K., Krzywinski, M. & Altman, N. (2020) Points of significance: Modeling infectious epidemics. Nature Methods 17:455–456.

Bjørnstad, O.N., Shea, K., Krzywinski, M. & Altman, N. (2020) Points of significance: The SEIRS model for infectious disease dynamics. Nature Methods 17:557–558.

Cover of Nature Genetics August 2020

Our design on the cover of Nature Genetics's August 2020 issue is “Dichotomy of Chromatin in Color”. Thanks to Dr. Andy Mungall for suggesting this terrific title.

The cover design accompanies our report in the issue Gagliardi, A., Porter, V.L., Zong, Z. et al. (2020) Analysis of Ugandan cervical carcinomas identifies human papillomavirus clade–specific epigenome and transcriptome landscapes. Nature Genetics 52:800–810.

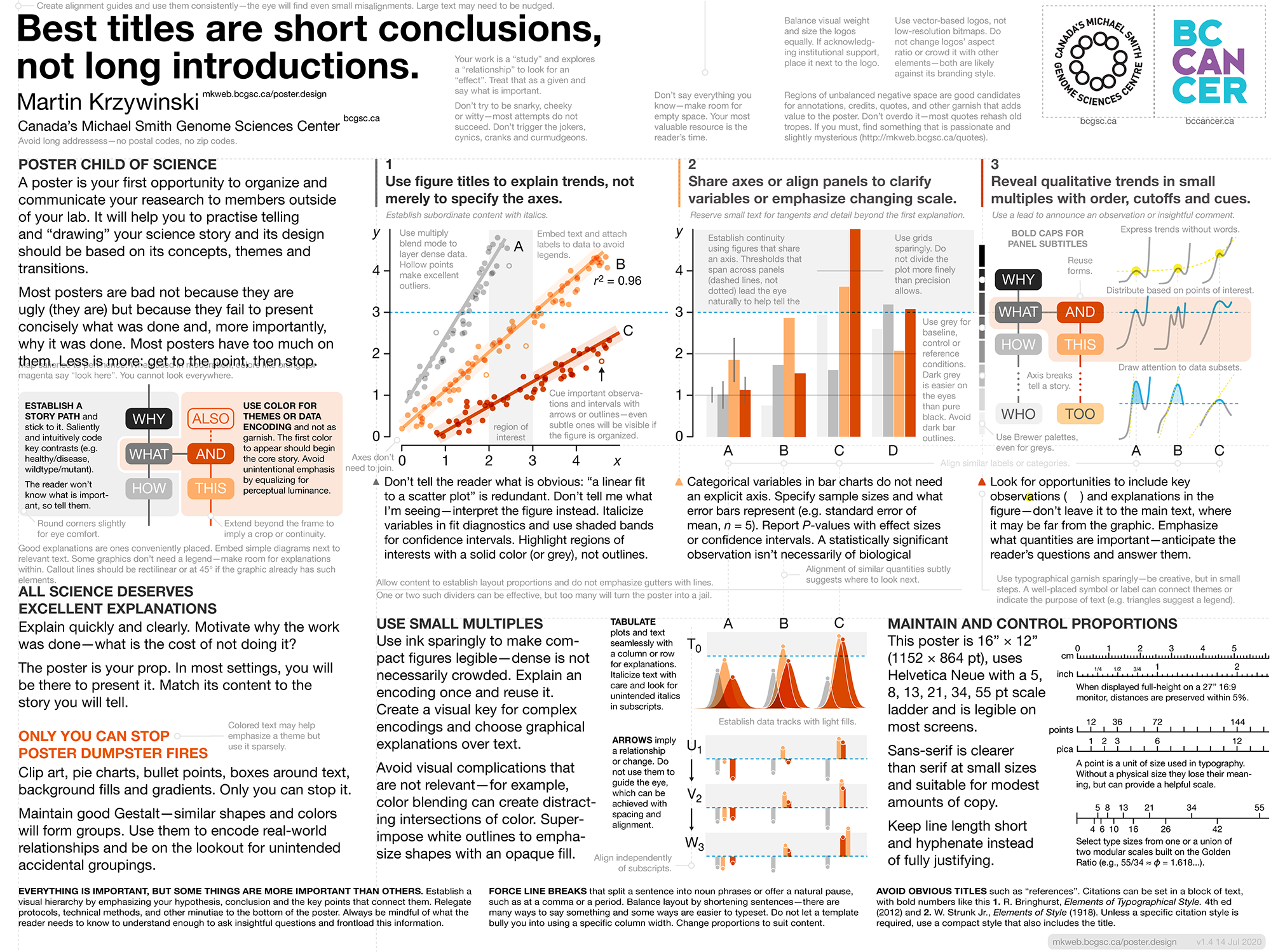

Poster Design Guidelines

Clear, concise, legible and compelling.

The PDF template is a poster about making posters. It provides design, typography and data visualiation tips with minimum fuss. Follow its advice until you have developed enough design sobriety and experience to know when to go your own way.

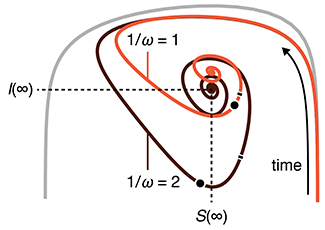

The SEIRS model for infectious disease dynamics

Realistic models of epidemics account for latency, loss of immunity, births and deaths.

We continue with our discussion about epidemic models and show how births, deaths and loss of immunity can create epidemic waves—a periodic fluctuation in the fraction of population that is infected.

This column has an interactive supplemental component (download code) that allows you to explore epidemic waves and introduces the idea of the phase plane, a compact way to understand the evolution of an epidemic over its entire course.

Bjørnstad, O.N., Shea, K., Krzywinski, M. & Altman, N. (2020) Points of significance: The SEIRS model for infectious disease dynamics. Nature Methods 17:557–558.

Background reading

Bjørnstad, O.N., Shea, K., Krzywinski, M. & Altman, N. (2020) Points of significance: Modeling infectious epidemics. Nature Methods 17:455–456.

Gene Machines

Shifting soundscapes, textures and rhythmic loops produced by laboratory machines.

In commemoration of the 20th anniversary of Canada's Michael Smith Genome Sciences Centre, Segue was commissioned to create an original composition based on audio recordings from the GSC's laboratory equipment, robots and computers—to make “music” from the noise they produce.

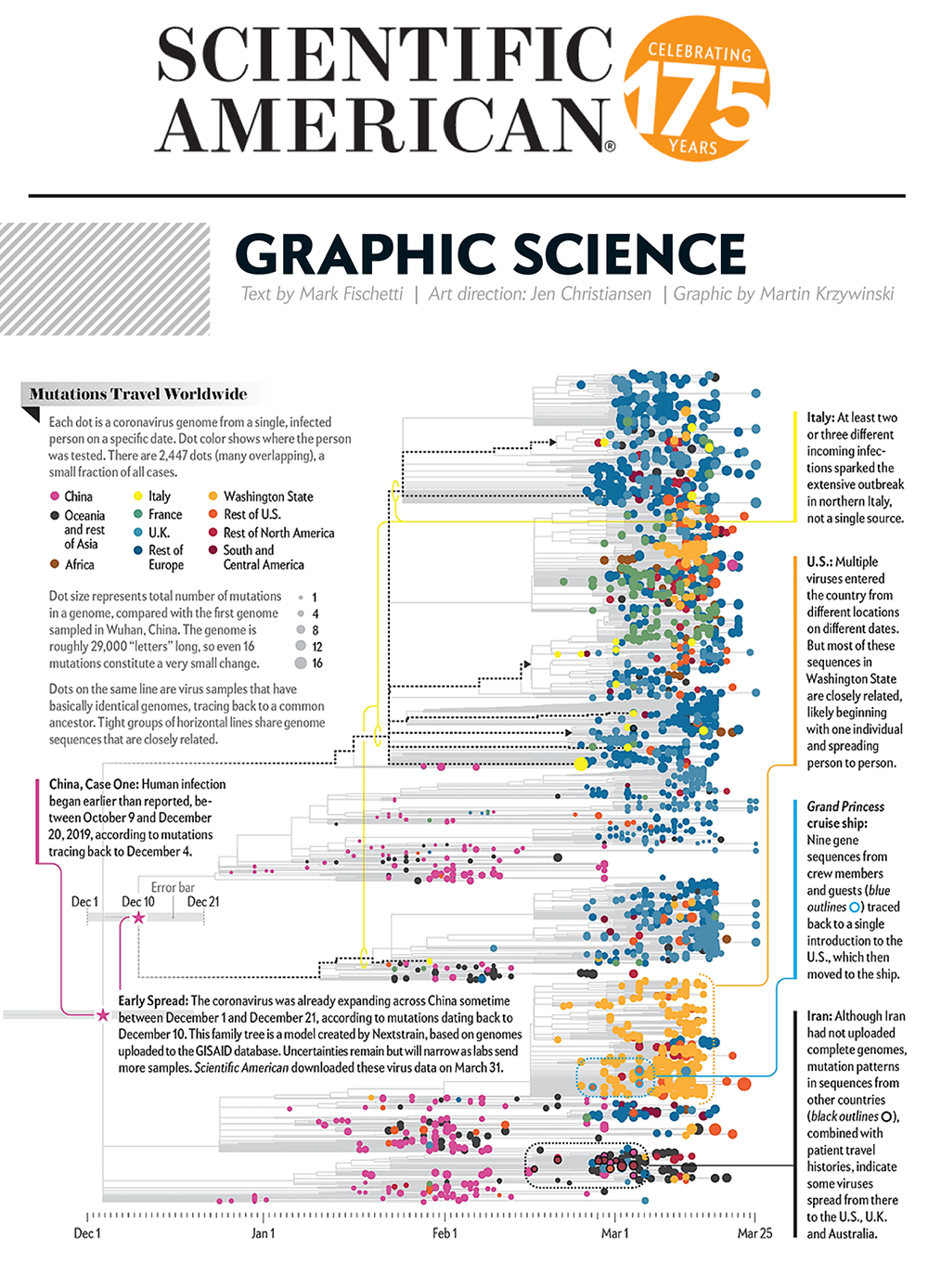

Virus Mutations Reveal How COVID-19 Really Spread

Genetic sequences of the coronavirus tell story of when the virus arrived in each country and where it came from.

Our graphic in Scientific American's Graphic Science section in the June 2020 issue shows a phylogenetic tree based on a snapshot of the data model from Nextstrain as of 31 March 2020.

Cover of Nature Cancer April 2020

Our design on the cover of Nature Cancer's April 2020 issue shows mutation spectra of patients from the POG570 cohort of 570 individuals with advanced metastatic cancer.

The cover design accompanies our report in the issue Pleasance, E., Titmuss, E., Williamson, L. et al. (2020) Pan-cancer analysis of advanced patient tumors reveals interactions between therapy and genomic landscapes. Nat Cancer 1:452–468.

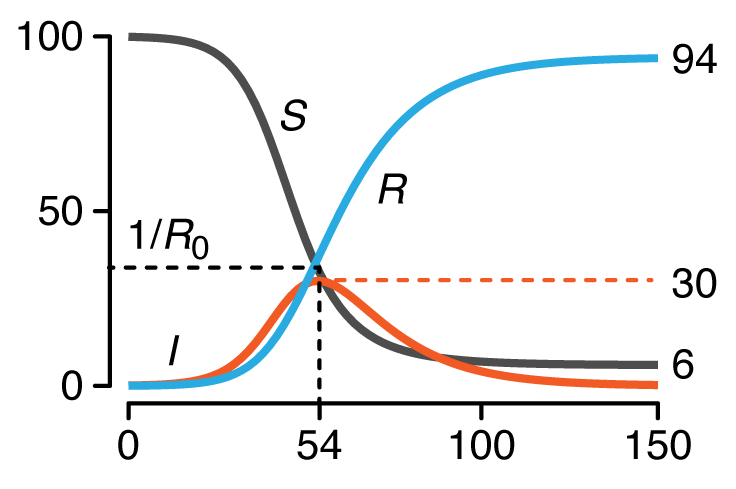

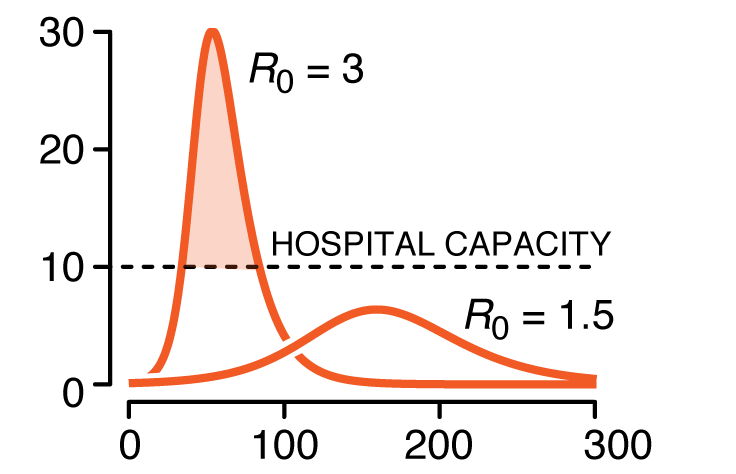

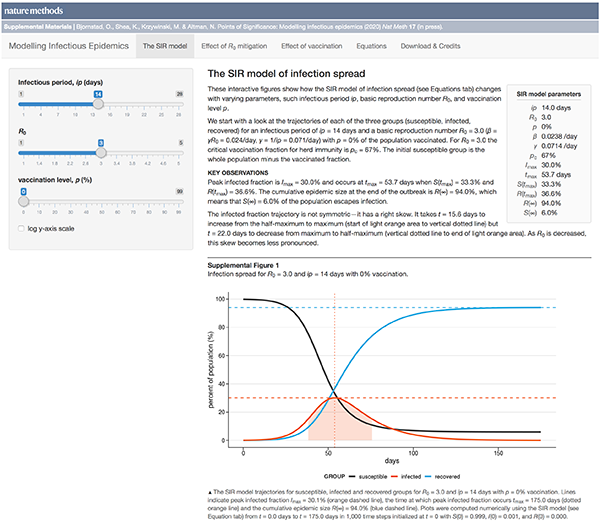

Modeling infectious epidemics

Every day sadder and sadder news of its increase. In the City died this week 7496; and of them, 6102 of the plague. But it is feared that the true number of the dead this week is near 10,000 ....

—Samuel Pepys, 1665

This month, we begin a series of columns on epidemiological models. We start with the basic SIR model, which models the spread of an infection between three groups in a population: susceptible, infected and recovered.

We discuss conditions under which an outbreak occurs, estimates of spread characteristics and the effects that mitigation can play on disease trajectories. We show the trends that arise when "flattenting the curve" by decreasing `R_0`.

This column has an interactive supplemental component (download code) that allows you to explore how the model curves change with parameters such as infectious period, basic reproduction number and vaccination level.

Bjørnstad, O.N., Shea, K., Krzywinski, M. & Altman, N. (2020) Points of significance: Modeling infectious epidemics. Nature Methods 17:455–456.

The Outbreak Poems

I'm writing poetry daily to put my feelings into words more often during the COVID-19 outbreak.

Your hours will last me my years.

Hole in heart is bigger than you were.

From hand to heart in a flutter.

Come fly in my heart for a while.

Can't feel you in my hand, dying.

Need new words, please, for these feelings.

Mini dee you put you in me.

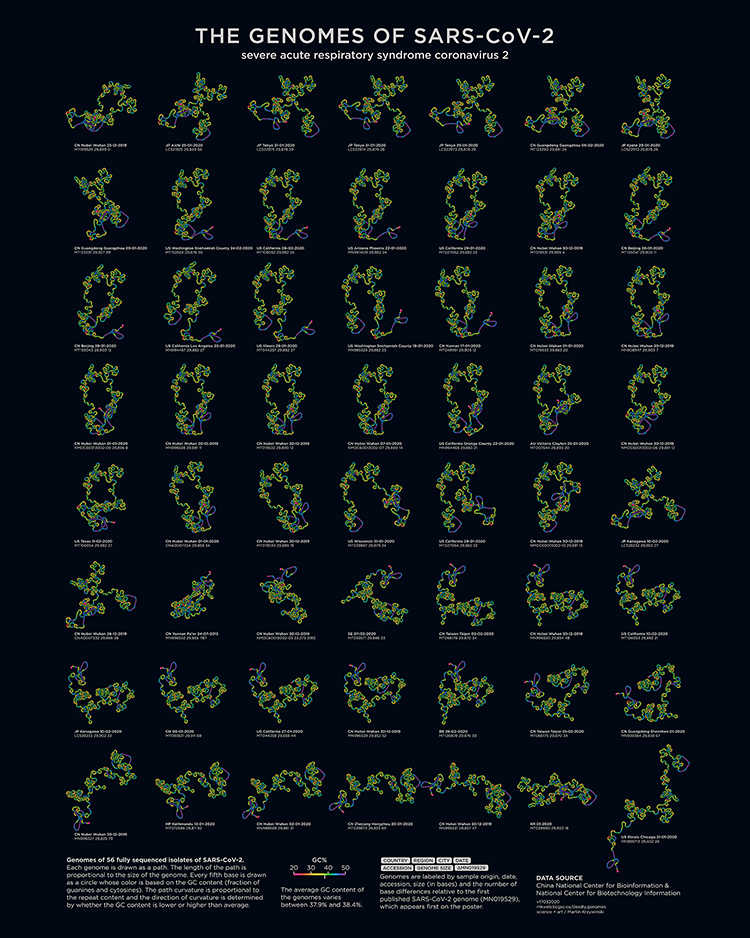

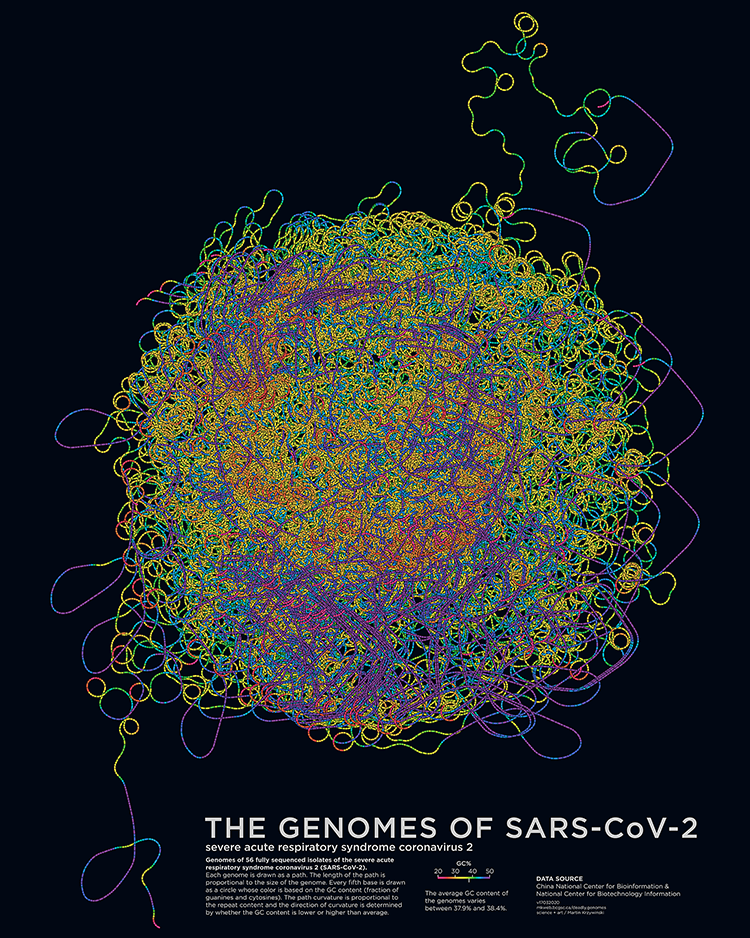

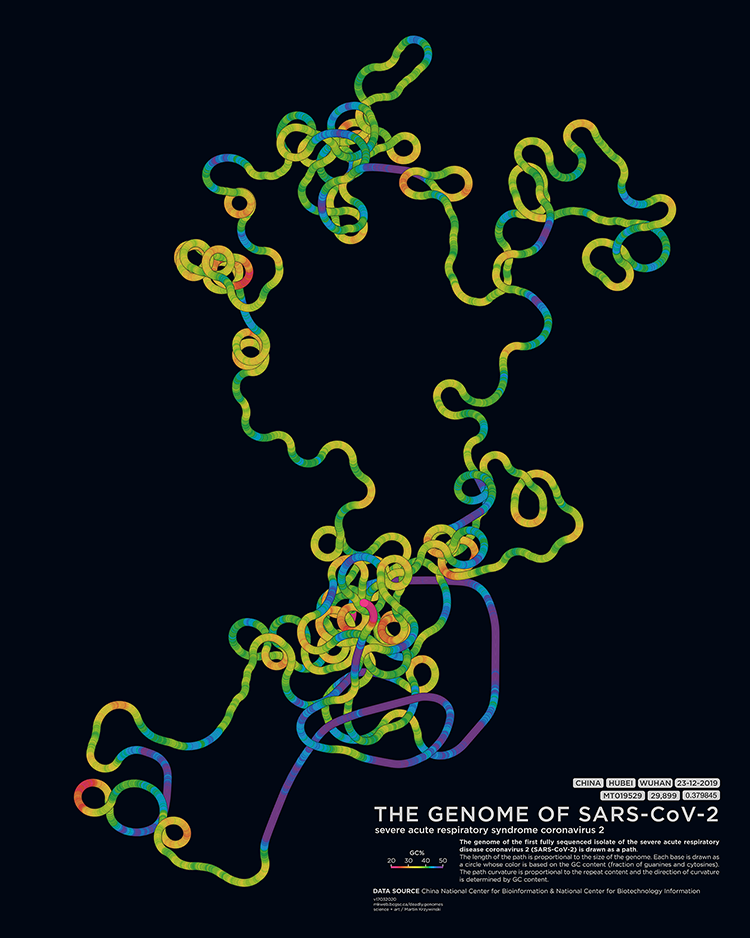

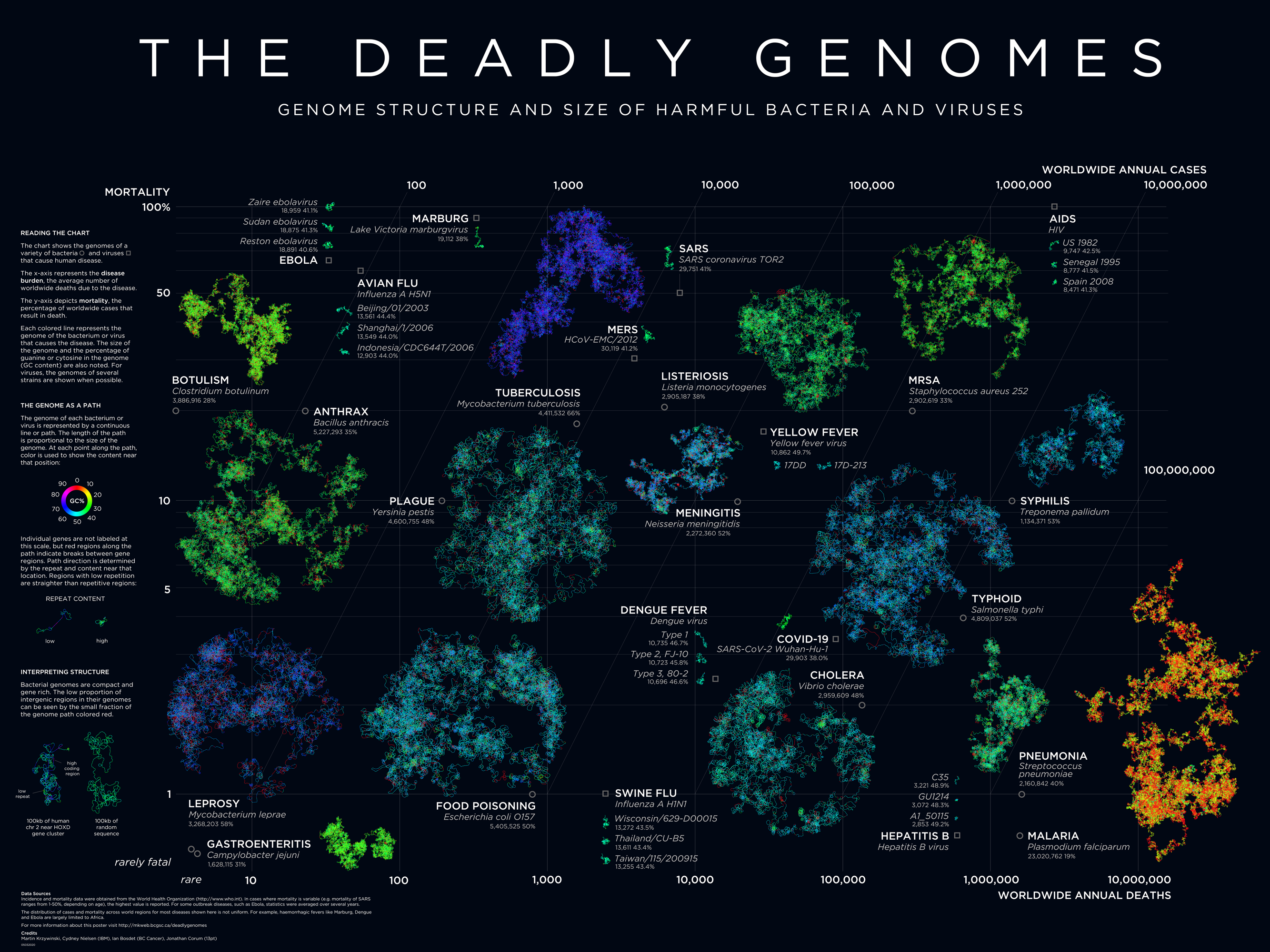

Deadly Genomes: Genome Structure and Size of Harmful Bacteria and Viruses

A poster full of epidemiological worry and statistics. Now updated with the genome of SARS-CoV-2 and COVID-19 case statistics as of 3 March 2020.

Bacterial and viral genomes of various diseases are drawn as paths with color encoding local GC content and curvature encoding local repeat content. Position of the genome encodes prevalence and mortality rate.

The deadly genomes collection has been updated with a posters of the genomes of SARS-CoV-2, the novel coronavirus that causes COVID-19.

Using Circos in Galaxy Australia Workshop

A workshop in using the Circos Galaxy wrapper by Hiltemann and Rasche. Event organized by Australian Biocommons.

Galaxy wrapper training materials, Saskia Hiltemann, Helena Rasche, 2020 Visualisation with Circos (Galaxy Training Materials).

Essence of Data Visualization in Bioinformatics Webinar

My webinar on fundamental concepts in data visualization and visual communication of scientific data and concepts. Event organized by Australian Biocommons.

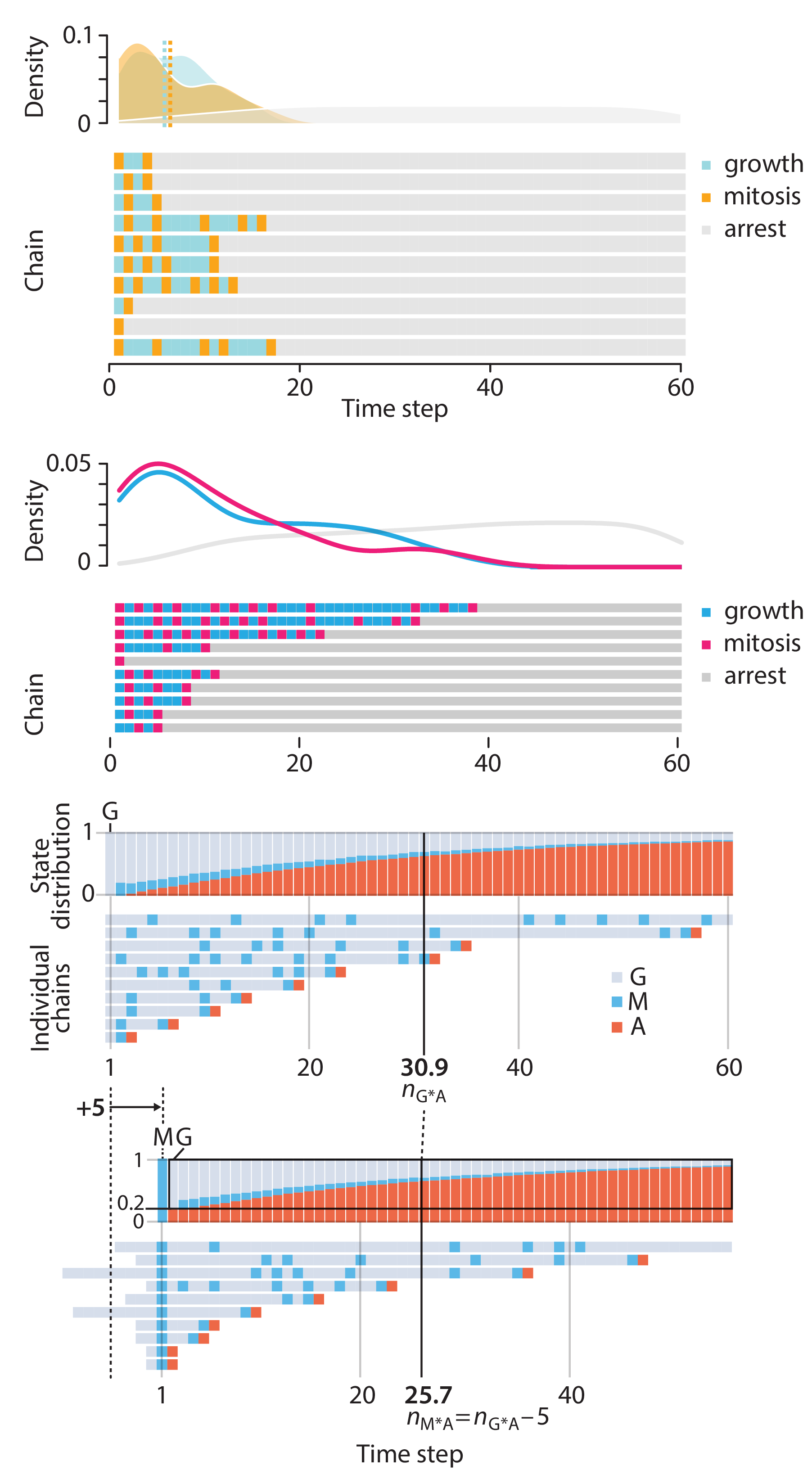

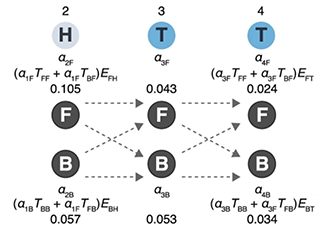

Markov models — training and evaluation of hidden Markov models

With one eye you are looking at the outside world, while with the other you are looking within yourself.

—Amedeo Modigliani

Following up with our Markov Chain column and Hidden Markov model column, this month we look at how Markov models are trained using the example of biased coin.

We introduce the concepts of forward and backward probabilities and explicitly show how they are calculated in the training process using the Baum-Welch algorithm. We also discuss the value of ensemble models and the use of pseudocounts for cases where rare observations are expected but not necessarily seen.

Grewal, J., Krzywinski, M. & Altman, N. (2019) Points of significance: Markov models — training and evaluation of hidden Markov models. Nature Methods 17:121–122.

Background reading

Altman, N. & Krzywinski, M. (2019) Points of significance: Hidden Markov models. Nature Methods 16:795–796.

Altman, N. & Krzywinski, M. (2019) Points of significance: Markov Chains. Nature Methods 16:663–664.

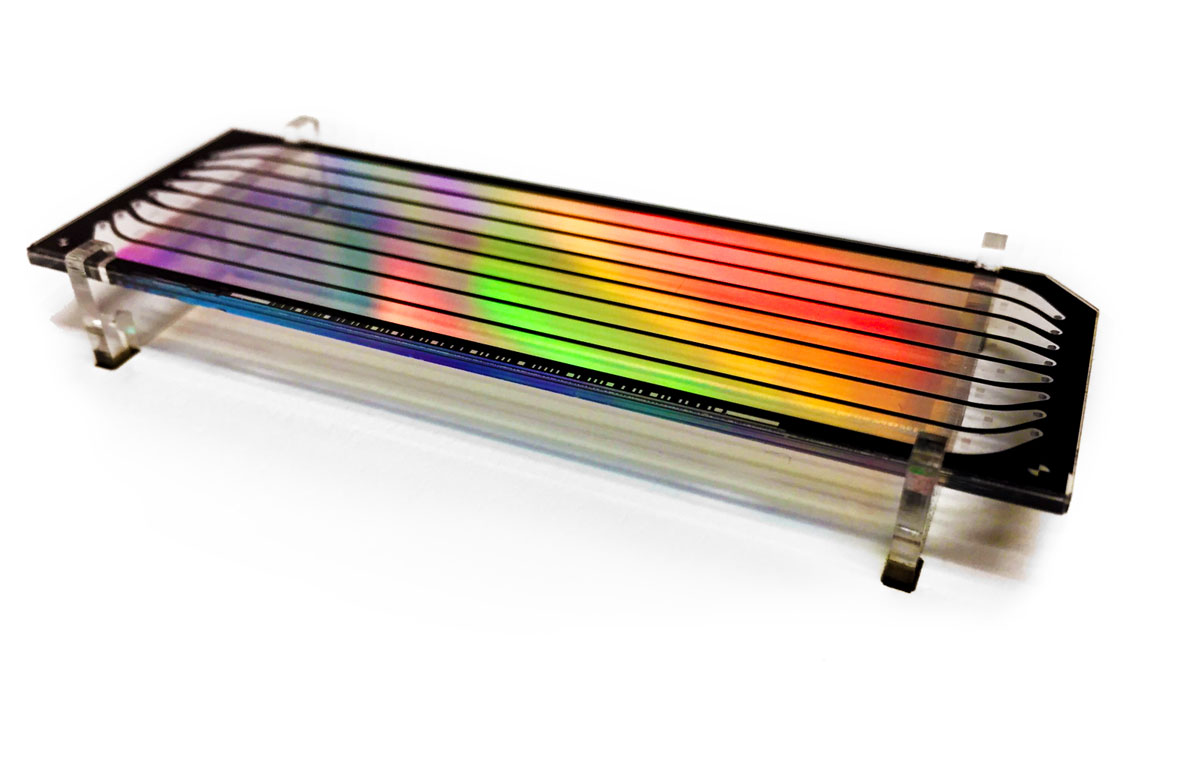

Genome Sciences Center 20th Anniversary Clothing, Music, Drinks and Art

Science. Timeliness. Respect.

Read about the design of the clothing, music, drinks and art for the Genome Sciences Center 20th Anniversary Celebration, held on 15 November 2019.

As part of the celebration and with the help of our engineering team, we framed 48 flow cells from the lab.

Each flow cell was accompanied by an interpretive plaque explaining the technology behind the flow cell and the sample information and sequence content.

Scientific data visualization: Aesthetic for diagrammatic clarity

The scientific process works because all its output is empirically constrained.

My chapter from The Aesthetics of Scientific Data Representation, More than Pretty Pictures, in which I discuss the principles of data visualization and connect them to the concept of "quality" introduced by Robert Pirsig in Zen and the Art of Motorcycle Maintenance.

Yearning for the Infinite — Aleph 2

Discover Cantor's transfinite numbers through my music video for the Aleph 2 track of Max Cooper's Yearning for the Infinite (album page, event page).

I discuss the math behind the video and the system I built to create the video.

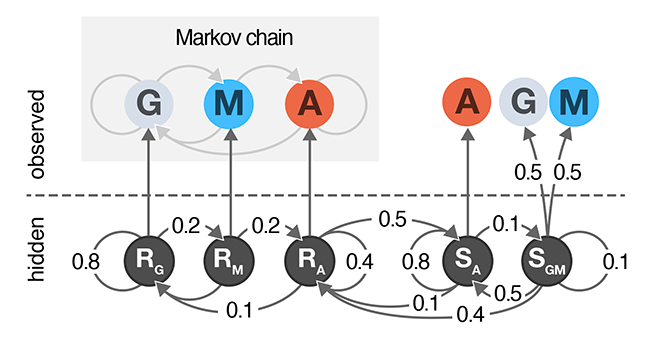

Hidden Markov Models

Everything we see hides another thing, we always want to see what is hidden by what we see.

—Rene Magritte

A Hidden Markov Model extends a Markov chain to have hidden states. Hidden states are used to model aspects of the system that cannot be directly observed and themselves form a Markov chain and each state may emit one or more observed values.

Hidden states in HMMs do not have to have meaning—they can be used to account for measurement errors, compress multi-modal observational data, or to detect unobservable events.

In this column, we extend the cell growth model from our Markov Chain column to include two hidden states: normal and sedentary.

We show how to calculate forward probabilities that can predict the most likely path through the HMM given an observed sequence.

Grewal, J., Krzywinski, M. & Altman, N. (2019) Points of significance: Hidden Markov Models. Nature Methods 16:795–796.

Background reading

Altman, N. & Krzywinski, M. (2019) Points of significance: Markov Chains. Nature Methods 16:663–664.

Hola Mundo Cover

My cover design for Hola Mundo by Hannah Fry. Published by Blackie Books.

Curious how the design was created? Read the full details.

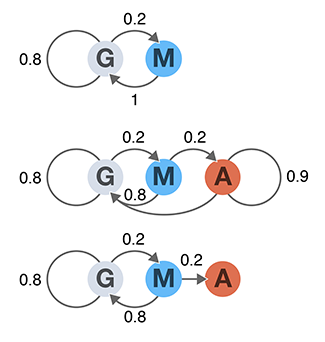

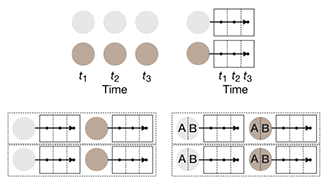

Markov Chains

You can look back there to explain things,

but the explanation disappears.

You'll never find it there.

Things are not explained by the past.

They're explained by what happens now.

—Alan Watts

A Markov chain is a probabilistic model that is used to model how a system changes over time as a series of transitions between states. Each transition is assigned a probability that defines the chance of the system changing from one state to another.

Together with the states, these transitions probabilities define a stochastic model with the Markov property: transition probabilities only depend on the current state—the future is independent of the past if the present is known.

Once the transition probabilities are defined in matrix form, it is easy to predict the distribution of future states of the system. We cover concepts of aperiodicity, irreducibility, limiting and stationary distributions and absorption.

This column is the first part of a series and pairs particularly well with Alan Watts and Blond:ish.

Grewal, J., Krzywinski, M. & Altman, N. (2019) Points of significance: Markov Chains. Nature Methods 16:663–664.

1-bit zoomable gigapixel maps of Moon, Solar System and Sky

Places to go and nobody to see.

Exquisitely detailed maps of places on the Moon, comets and asteroids in the Solar System and stars, deep-sky objects and exoplanets in the northern and southern sky. All maps are zoomable.

Quantile regression

Quantile regression robustly estimates the typical and extreme values of a response.Quantile regression explores the effect of one or more predictors on quantiles of the response. It can answer questions such as "What is the weight of 90% of individuals of a given height?"

Unlike in traditional mean regression methods, no assumptions about the distribution of the response are required, which makes it practical, robust and amenable to skewed distributions.

Quantile regression is also very useful when extremes are interesting or when the response variance varies with the predictors.

Das, K., Krzywinski, M. & Altman, N. (2019) Points of significance: Quantile regression. Nature Methods 16:451–452.

Background reading

Altman, N. & Krzywinski, M. (2015) Points of significance: Simple linear regression. Nature Methods 12:999–1000.

Analyzing outliers: Robust methods to the rescue

Robust regression generates more reliable estimates by detecting and downweighting outliers.Outliers can degrade the fit of linear regression models when the estimation is performed using the ordinary least squares. The impact of outliers can be mitigated with methods that provide robust inference and greater reliability in the presence of anomalous values.

We discuss MM-estimation and show how it can be used to keep your fitting sane and reliable.

Greco, L., Luta, G., Krzywinski, M. & Altman, N. (2019) Points of significance: Analyzing outliers: Robust methods to the rescue. Nature Methods 16:275–276.

Background reading

Altman, N. & Krzywinski, M. (2016) Points of significance: Analyzing outliers: Influential or nuisance. Nature Methods 13:281–282.

Two-level factorial experiments

To find which experimental factors have an effect, simultaneously examine the difference between the high and low levels of each.Two-level factorial experiments, in which all combinations of multiple factor levels are used, efficiently estimate factor effects and detect interactions—desirable statistical qualities that can provide deep insight into a system.

They offer two benefits over the widely used one-factor-at-a-time (OFAT) experiments: efficiency and ability to detect interactions.

Since the number of factor combinations can quickly increase, one approach is to model only some of the factorial effects using empirically-validated assumptions of effect sparsity and effect hierarchy. Effect sparsity tells us that in factorial experiments most of the factorial terms are likely to be unimportant. Effect hierarchy tells us that low-order terms (e.g. main effects) tend to be larger than higher-order terms (e.g. two-factor or three-factor interactions).

Smucker, B., Krzywinski, M. & Altman, N. (2019) Points of significance: Two-level factorial experiments Nature Methods 16:211–212.

Background reading

Krzywinski, M. & Altman, N. (2014) Points of significance: Designing comparative experiments.. Nature Methods 11:597–598.

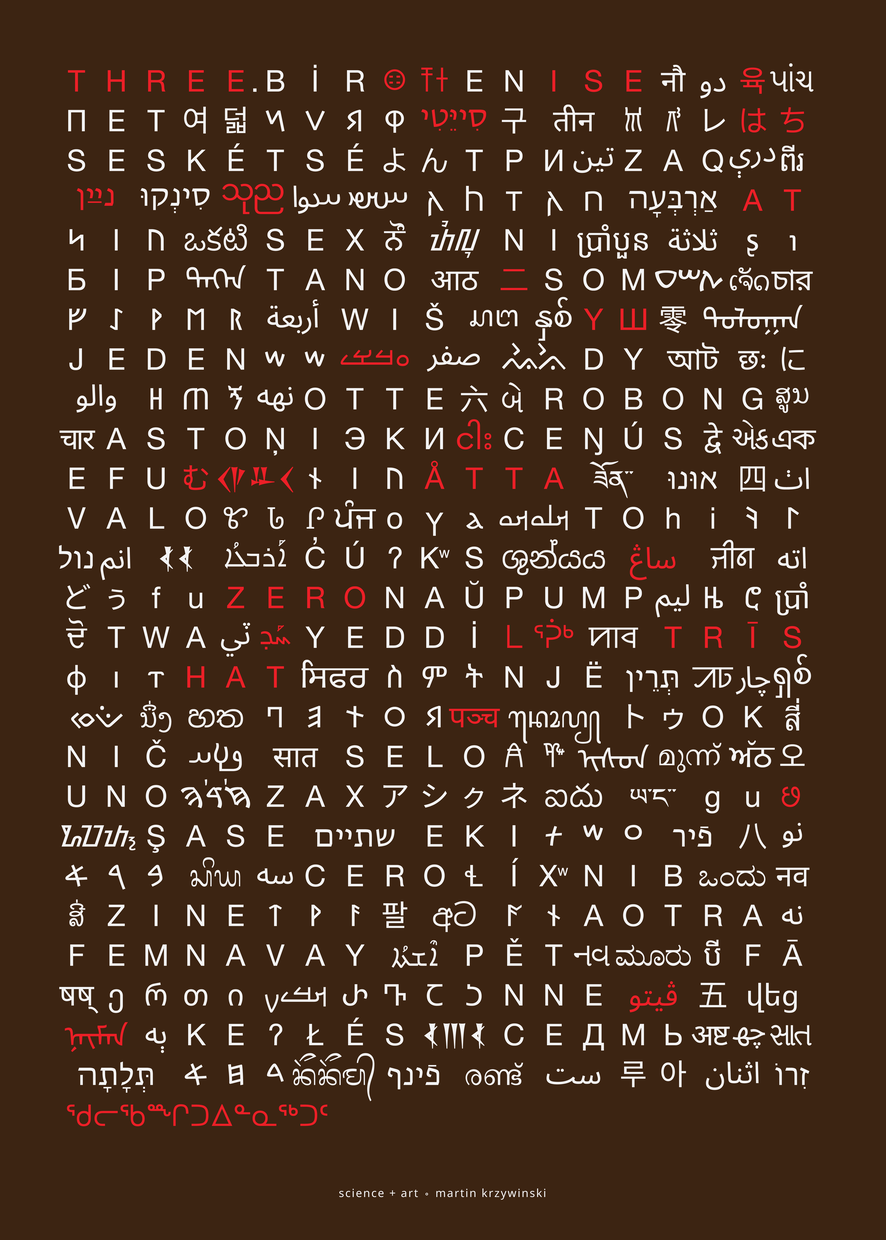

Happy 2019 `\pi` Day—

Digits, internationally

Celebrate `\pi` Day (March 14th) and set out on an exploration explore accents unknown (to you)!

This year is purely typographical, with something for everyone. Hundreds of digits and hundreds of languages.

A special kids' edition merges math with color and fat fonts.

Check out art from previous years: 2013 `\pi` Day and 2014 `\pi` Day, 2015 `\pi` Day, 2016 `\pi` Day, 2017 `\pi` Day and 2018 `\pi` Day.

Tree of Emotional Life

One moment you're :) and the next you're :-.

Make sense of it all with my Tree of Emotional life—a hierarchical account of how we feel.

Find and snap to colors in an image

One of my color tools, the colorsnap application snaps colors in an image to a set of reference colors and reports their proportion.

Below is Times Square rendered using the colors of the MTA subway lines.

Colors used by the New York MTA subway lines.

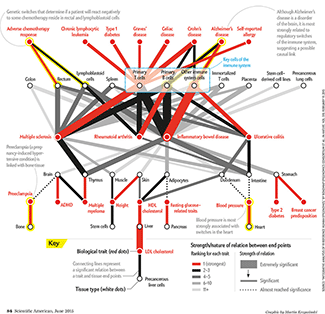

Take your medicine ... now

Drugs could be more effective if taken when the genetic proteins they target are most active.

Design tip: rediscover CMYK primaries.

More of my American Scientific Graphic Science designs

Ruben et al. A database of tissue-specific rhythmically expressed human genes has potential applications in circadian medicine Science Translational Medicine 10 Issue 458, eaat8806.

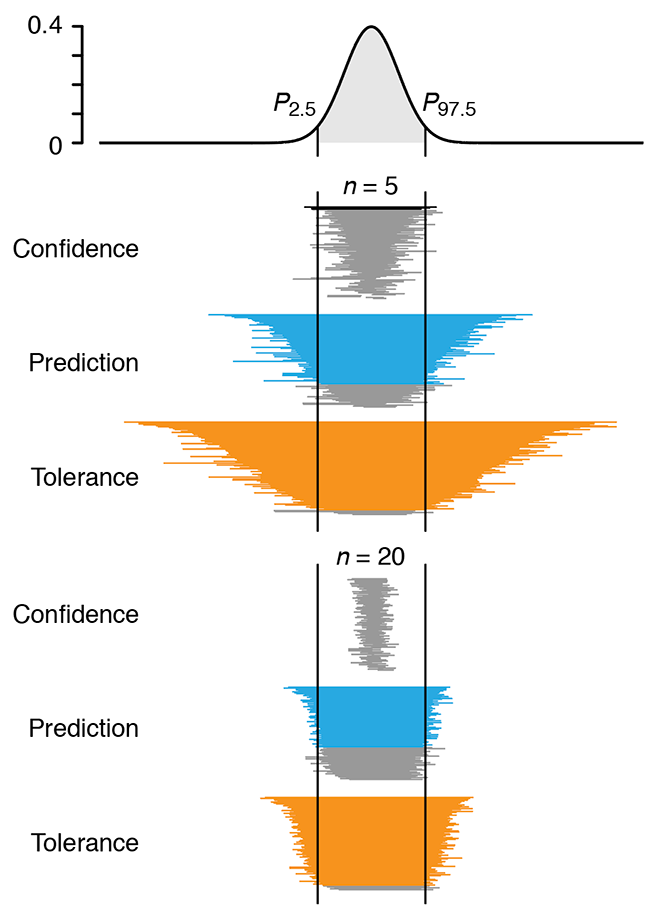

Predicting with confidence and tolerance

I abhor averages. I like the individual case. —J.D. Brandeis.We focus on the important distinction between confidence intervals, typically used to express uncertainty of a sampling statistic such as the mean and, prediction and tolerance intervals, used to make statements about the next value to be drawn from the population.

Confidence intervals provide coverage of a single point—the population mean—with the assurance that the probability of non-coverage is some acceptable value (e.g. 0.05). On the other hand, prediction and tolerance intervals both give information about typical values from the population and the percentage of the population expected to be in the interval. For example, a tolerance interval can be configured to tell us what fraction of sampled values (e.g. 95%) will fall into an interval some fraction of the time (e.g. 95%).

Altman, N. & Krzywinski, M. (2018) Points of significance: Predicting with confidence and tolerance Nature Methods 15:843–844.

Background reading

Krzywinski, M. & Altman, N. (2013) Points of significance: Importance of being uncertain. Nature Methods 10:809–810.

4-day Circos course

A 4-day introductory course on genome data parsing and visualization using Circos. Prepared for the Bioinformatics and Genome Analysis course in Institut Pasteur Tunis, Tunis, Tunisia.

Oryza longistaminata genome cake

Data visualization should be informative and, where possible, tasty.

Stefan Reuscher from Bioscience and Biotechnology Center at Nagoya University celebrates a publication with a Circos cake.

The cake shows an overview of a de-novo assembled genome of a wild rice species Oryza longistaminata.

Optimal experimental design

Customize the experiment for the setting instead of adjusting the setting to fit a classical design.The presence of constraints in experiments, such as sample size restrictions, awkward blocking or disallowed treatment combinations may make using classical designs very difficult or impossible.

Optimal design is a powerful, general purpose alternative for high quality, statistically grounded designs under nonstandard conditions.

We discuss two types of optimal designs (D-optimal and I-optimal) and show how it can be applied to a scenario with sample size and blocking constraints.

Smucker, B., Krzywinski, M. & Altman, N. (2018) Points of significance: Optimal experimental design Nature Methods 15:599–600.

Background reading

Krzywinski, M., Altman, N. (2014) Points of significance: Two factor designs. Nature Methods 11:1187–1188.

Krzywinski, M. & Altman, N. (2014) Points of significance: Analysis of variance (ANOVA) and blocking. Nature Methods 11:699–700.

Krzywinski, M. & Altman, N. (2014) Points of significance: Designing comparative experiments. Nature Methods 11:597–598.

The Whole Earth Cataloguer

All the living things.An illustration of the Tree of Life, showing some of the key branches.

The tree is drawn as a DNA double helix, with bases colored to encode ribosomal RNA genes from various organisms on the tree.

All living things on earth descended from a single organism called LUCA (last universal common ancestor) and inherited LUCA’s genetic code for basic biological functions, such as translating DNA and creating proteins. Constant genetic mutations shuffled and altered this inheritance and added new genetic material—a process that created the diversity of life we see today. The “tree of life” organizes all organisms based on the extent of shuffling and alteration between them. The full tree has millions of branches and every living organism has its own place at one of the leaves in the tree. The simplified tree shown here depicts all three kingdoms of life: bacteria, archaebacteria and eukaryota. For some organisms a grey bar shows when they first appeared in the tree in millions of years (Ma). The double helix winding around the tree encodes highly conserved ribosomal RNA genes from various organisms.

Johnson, H.L. (2018) The Whole Earth Cataloguer, Sactown, Jun/Jul, p. 89

Why we can't give up this odd way of typing

All fingers report to home row.An article about keyboard layouts and the history and persistence of QWERTY.

My Carpalx keyboard optimization software is mentioned along with my World's Most Difficult Layout: TNWMLC. True typing hell.

McDonald, T. (2018) Why we can't give up this odd way of typing, BBC, 25 May 2018.

Molecular Case Studies Cover

The theme of the April issue of Molecular Case Studies is precision oncogenomics. We have three papers in the issue based on work done in our Personalized Oncogenomics Program (POG).

The covers of Molecular Case Studies typically show microscopy images, with some shown in a more abstract fashion. There's also the occasional Circos plot.

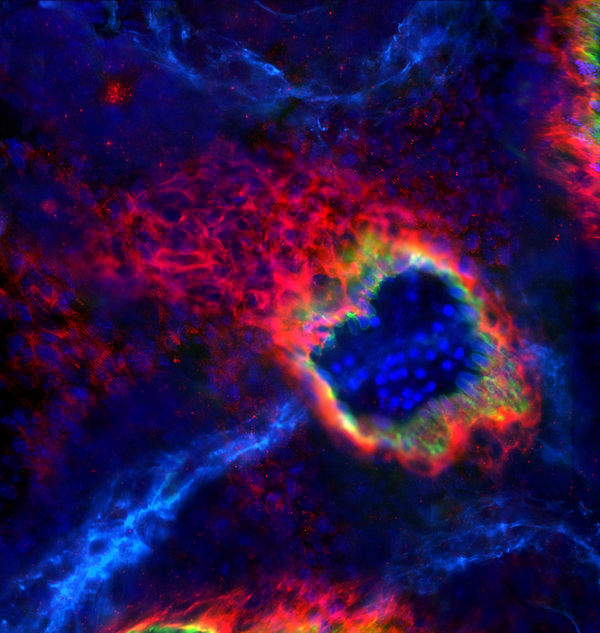

I've previously taken a more fine-art approach to cover design, such for those of Nature, Genome Research and Trends in Genetics. I've used microscopy images to create a cover for PNAS—the one that made biology look like astrophysics—and thought that this is kind of material I'd start with for the MCS cover.

Happy 2018 `\tau` Day—Art for everyone

Universe Superclusters and Voids

A map of the nearby superclusters and voids in the Unvierse.

By "nearby" I mean within 6,000 million light-years.

Datavis for your feet—the 178.75 lb socks

In the past, I've been tangentially involved in fashion design. I've also been more directly involved in fashion photography.

It was now time to design my first ... pair of socks.

In collaboration with Flux Socks, the design features the colors and relative thicknesses of Rogue olympic weightlifting plates. The first four plates in the stack are the 55, 45, 35, and 25 competition plates. The top 4 plates are the 10, 5, 2.5 and 1.25 lb change plates.

The perceived weight of each sock is 178.75 lb and 357.5 lb for the pair.

The actual weight is much less.

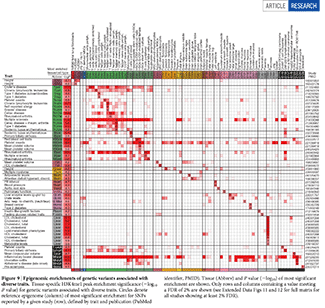

Genes Behind Psychiatric Disorders

Find patterns behind gene expression and disease.

Expression, correlation and network module membership of 11,000+ genes and 5 psychiatric disorders in about 6" x 7" on a single page.

Design tip: Stay calm.

More of my American Scientific Graphic Science designs

Gandal M.J. et al. Shared Molecular Neuropathology Across Major Psychiatric Disorders Parallels Polygenic Overlap Science 359 693–697 (2018)

Curse(s) of dimensionality

There is such a thing as too much of a good thing.We discuss the many ways in which analysis can be confounded when data has a large number of dimensions (variables). Collectively, these are called the "curses of dimensionality".

Some of these are unintuitive, such as the fact that the volume of the hypersphere increases and then shrinks beyond about 7 dimensions, while the volume of the hypercube always increases. This means that high-dimensional space is "mostly corners" and the distance between points increases greatly with dimension. This has consequences on correlation and classification.

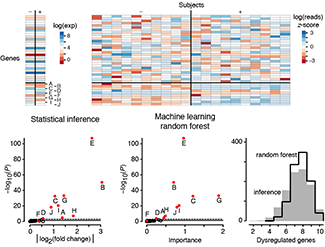

Statistics vs Machine Learning

We conclude our series on Machine Learning with a comparison of two approaches: classical statistical inference and machine learning. The boundary between them is subject to debate, but important generalizations can be made.Inference creates a mathematical model of the datageneration process to formalize understanding or test a hypothesis about how the system behaves. Prediction aims at forecasting unobserved outcomes or future behavior. Typically we want to do both and know how biological processes work and what will happen next. Inference and ML are complementary in pointing us to biologically meaningful conclusions.

Statistics asks us to choose a model that incorporates our knowledge of the system, and ML requires us to choose a predictive algorithm by relying on its empirical capabilities. Justification for an inference model typically rests on whether we feel it adequately captures the essence of the system. The choice of pattern-learning algorithms often depends on measures of past performance in similar scenarios.

Bzdok, D., Krzywinski, M. & Altman, N. (2018) Points of Significance: Statistics vs machine learning. Nature Methods 15:233–234.

Background reading

Bzdok, D., Krzywinski, M. & Altman, N. (2017) Points of Significance: Machine learning: a primer. Nature Methods 14:1119–1120.

Bzdok, D., Krzywinski, M. & Altman, N. (2017) Points of Significance: Machine learning: supervised methods. Nature Methods 15:5–6.

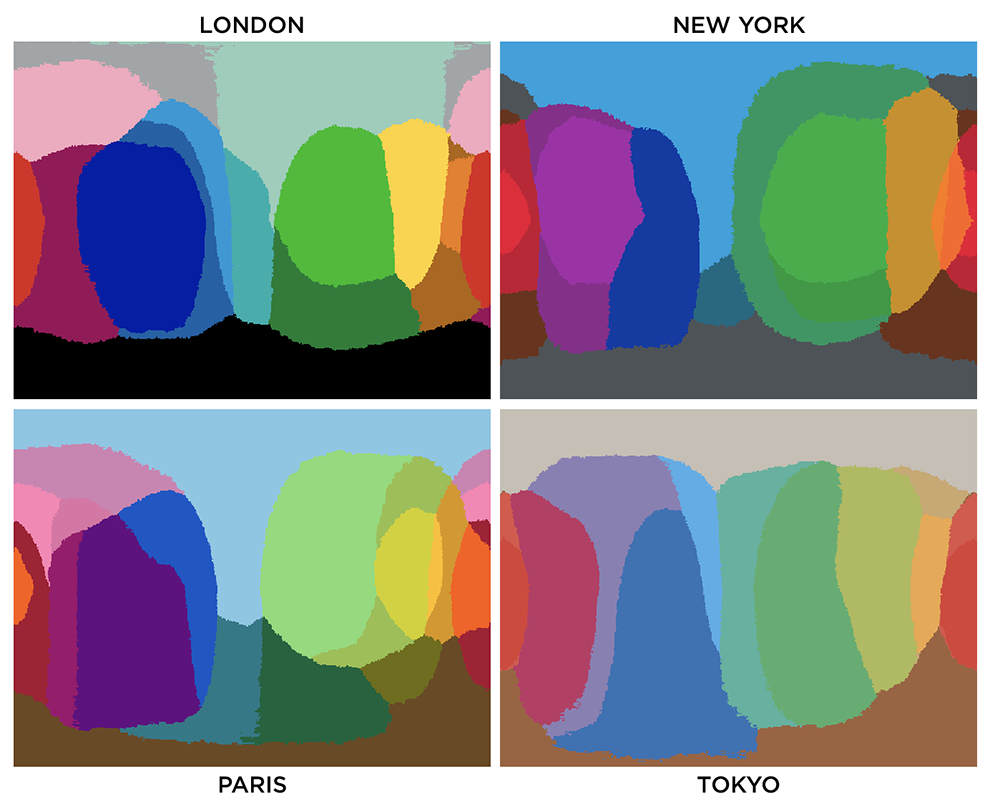

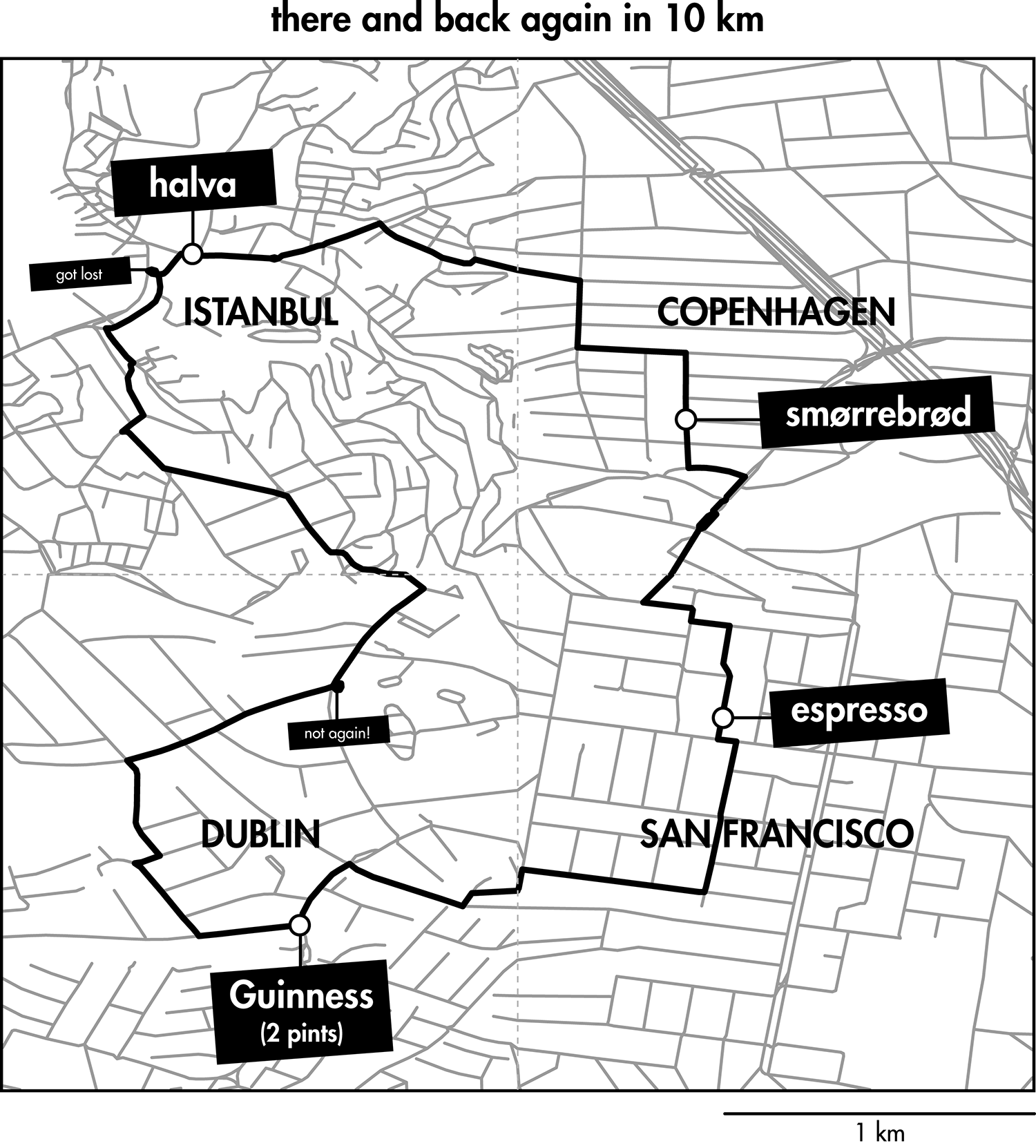

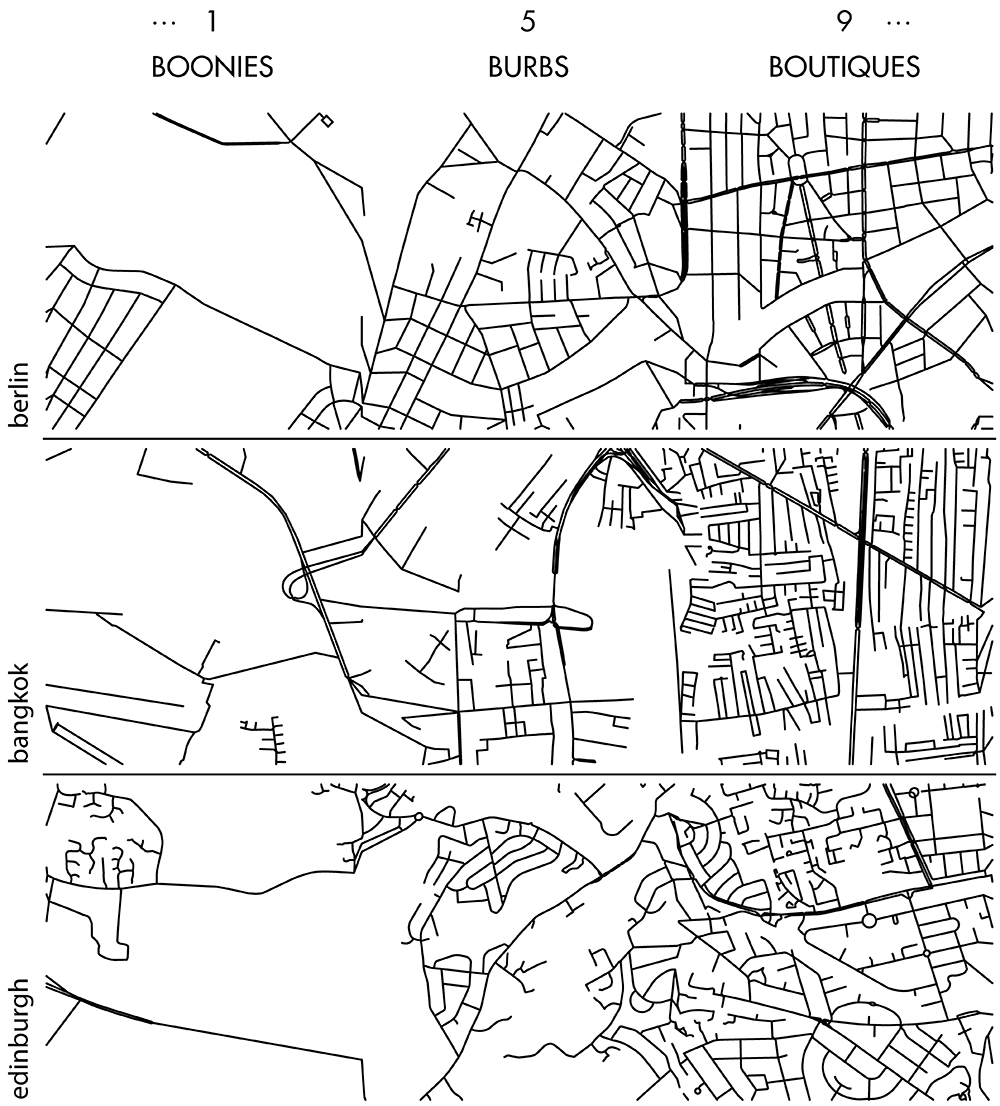

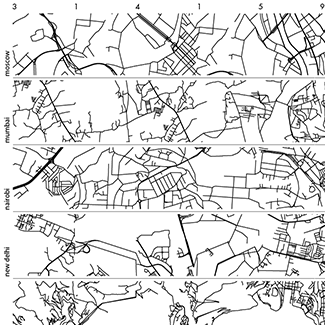

Happy 2018 `\pi` Day—Boonies, burbs and boutiques of `\pi`

Celebrate `\pi` Day (March 14th) and go to brand new places. Together with Jake Lever, this year we shrink the world and play with road maps.

Streets are seamlessly streets from across the world. Finally, a halva shop on the same block!

Intriguing and personal patterns of urban development for each city appear in the Boonies, Burbs and Boutiques series.

No color—just lines. Lines from Marrakesh, Prague, Istanbul, Nice and other destinations for the mind and the heart.

The art is featured in the Pi City on the Scientific American SA Visual blog.

Check out art from previous years: 2013 `\pi` Day and 2014 `\pi` Day, 2015 `\pi` Day, 2016 `\pi` Day and 2017 `\pi` Day.

Machine learning: supervised methods (SVM & kNN)

Supervised learning algorithms extract general principles from observed examples guided by a specific prediction objective.We examine two very common supervised machine learning methods: linear support vector machines (SVM) and k-nearest neighbors (kNN).

SVM is often less computationally demanding than kNN and is easier to interpret, but it can identify only a limited set of patterns. On the other hand, kNN can find very complex patterns, but its output is more challenging to interpret.

We illustrate SVM using a data set in which points fall into two categories, which are separated in SVM by a straight line "margin". SVM can be tuned using a parameter that influences the width and location of the margin, permitting points to fall within the margin or on the wrong side of the margin. We then show how kNN relaxes explicit boundary definitions, such as the straight line in SVM, and how kNN too can be tuned to create more robust classification.

Bzdok, D., Krzywinski, M. & Altman, N. (2018) Points of Significance: Machine learning: a primer. Nature Methods 15:5–6.

Background reading

Bzdok, D., Krzywinski, M. & Altman, N. (2017) Points of Significance: Machine learning: a primer. Nature Methods 14:1119–1120.

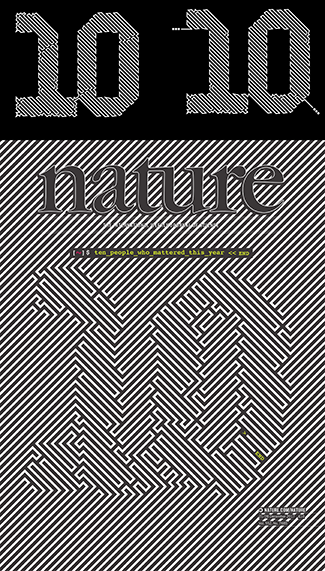

Human Versus Machine

Balancing subjective design with objective optimization.In a Nature graphics blog article, I present my process behind designing the stark black-and-white Nature 10 cover.

Nature 10, 18 December 2017

Machine learning: a primer

Machine learning extracts patterns from data without explicit instructions.In this primer, we focus on essential ML principles— a modeling strategy to let the data speak for themselves, to the extent possible.

The benefits of ML arise from its use of a large number of tuning parameters or weights, which control the algorithm’s complexity and are estimated from the data using numerical optimization. Often ML algorithms are motivated by heuristics such as models of interacting neurons or natural evolution—even if the underlying mechanism of the biological system being studied is substantially different. The utility of ML algorithms is typically assessed empirically by how well extracted patterns generalize to new observations.

We present a data scenario in which we fit to a model with 5 predictors using polynomials and show what to expect from ML when noise and sample size vary. We also demonstrate the consequences of excluding an important predictor or including a spurious one.

Bzdok, D., Krzywinski, M. & Altman, N. (2017) Points of Significance: Machine learning: a primer. Nature Methods 14:1119–1120.

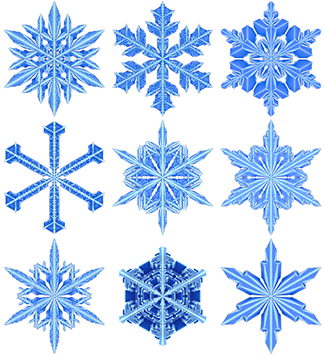

Snowflake simulation

Symmetric, beautiful and unique.Just in time for the season, I've simulated a snow-pile of snowflakes based on the Gravner-Griffeath model.

The work is described as a wintertime tale in In Silico Flurries: Computing a world of snow and co-authored with Jake Lever in the Scientific American SA Blog.

Gravner, J. & Griffeath, D. (2007) Modeling Snow Crystal Growth II: A mesoscopic lattice map with plausible dynamics.

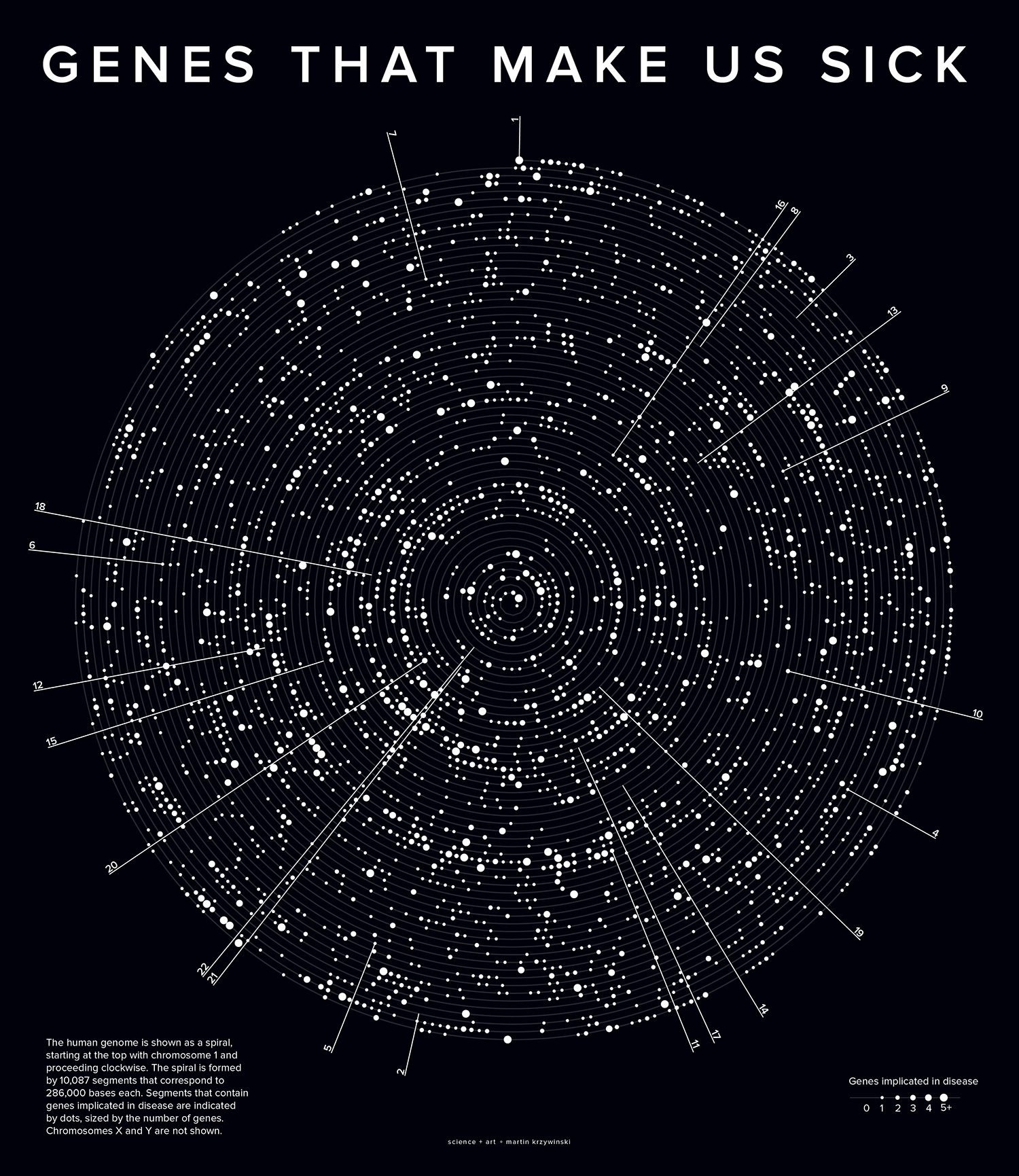

Genes that make us sick

Where disease hides in the genome.My illustration of the location of genes in the human genome that are implicated in disease appears in The Objects that Power the Global Economy, a book by Quartz.

Ensemble methods: Bagging and random forests

Many heads are better than one.We introduce two common ensemble methods: bagging and random forests. Both of these methods repeat a statistical analysis on a bootstrap sample to improve the accuracy of the predictor. Our column shows these methods as applied to Classification and Regression Trees.

For example, we can sample the space of values more finely when using bagging with regression trees because each sample has potentially different boundaries at which the tree splits.

Random forests generate a large number of trees by not only generating bootstrap samples but also randomly choosing which predictor variables are considered at each split in the tree.

Krzywinski, M. & Altman, N. (2017) Points of Significance: Ensemble methods: bagging and random forests. Nature Methods 14:933–934.

Background reading

Krzywinski, M. & Altman, N. (2017) Points of Significance: Classification and regression trees. Nature Methods 14:757–758.

Classification and regression trees

Decision trees are a powerful but simple prediction method.Decision trees classify data by splitting it along the predictor axes into partitions with homogeneous values of the dependent variable. Unlike logistic or linear regression, CART does not develop a prediction equation. Instead, data are predicted by a series of binary decisions based on the boundaries of the splits. Decision trees are very effective and the resulting rules are readily interpreted.

Trees can be built using different metrics that measure how well the splits divide up the data classes: Gini index, entropy or misclassification error.

When the predictor variable is quantitative and not categorical, regression trees are used. Here, the data are still split but now the predictor variable is estimated by the average within the split boundaries. Tree growth can be controlled using the complexity parameter, a measure of the relative improvement of each new split.

Individual trees can be very sensitive to minor changes in the data and even better prediction can be achieved by exploiting this variability. Using ensemble methods, we can grow multiple trees from the same data.

Krzywinski, M. & Altman, N. (2017) Points of Significance: Classification and regression trees. Nature Methods 14:757–758.

Background reading

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Logistic regression. Nature Methods 13:541-542.

Altman, N. & Krzywinski, M. (2015) Points of Significance: Multiple Linear Regression Nature Methods 12:1103-1104.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Classifier evaluation. Nature Methods 13:603-604.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Model Selection and Overfitting. Nature Methods 13:703-704.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Regularization. Nature Methods 13:803-804.

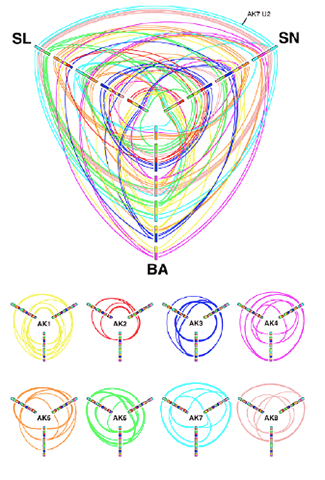

Personal Oncogenomics Program 5 Year Anniversary Art

The artwork was created in collaboration with my colleagues at the Genome Sciences Center to celebrate the 5 year anniversary of the Personalized Oncogenomics Program (POG).

The Personal Oncogenomics Program (POG) is a collaborative research study including many BC Cancer Agency oncologists, pathologists and other clinicians along with Canada's Michael Smith Genome Sciences Centre with support from BC Cancer Foundation.

The aim of the program is to sequence, analyze and compare the genome of each patient's cancer—the entire DNA and RNA inside tumor cells— in order to understand what is enabling it to identify less toxic and more effective treatment options.

Principal component analysis

PCA helps you interpret your data, but it will not always find the important patterns.Principal component analysis (PCA) simplifies the complexity in high-dimensional data by reducing its number of dimensions.

To retain trend and patterns in the reduced representation, PCA finds linear combinations of canonical dimensions that maximize the variance of the projection of the data.

PCA is helpful in visualizing high-dimensional data and scatter plots based on 2-dimensional PCA can reveal clusters.

Altman, N. & Krzywinski, M. (2017) Points of Significance: Principal component analysis. Nature Methods 14:641–642.

Background reading

Altman, N. & Krzywinski, M. (2017) Points of Significance: Clustering. Nature Methods 14:545–546.

`k` index: a weightlighting and Crossfit performance measure

Similar to the `h` index in publishing, the `k` index is a measure of fitness performance.

To achieve a `k` index for a movement you must perform `k` unbroken reps at `k`% 1RM.

The expected value for the `k` index is probably somewhere in the range of `k = 26` to `k=35`, with higher values progressively more difficult to achieve.

In my `k` index introduction article I provide detailed explanation, rep scheme table and WOD example.

Dark Matter of the English Language—the unwords

I've applied the char-rnn recurrent neural network to generate new words, names of drugs and countries.

The effect is intriguing and facetious—yes, those are real words.

But these are not: necronology, abobionalism, gabdologist, and nonerify.

These places only exist in the mind: Conchar and Pobacia, Hzuuland, New Kain, Rabibus and Megee Islands, Sentip and Sitina, Sinistan and Urzenia.

And these are the imaginary afflictions of the imagination: ictophobia, myconomascophobia, and talmatomania.

And these, of the body: ophalosis, icabulosis, mediatopathy and bellotalgia.

Want to name your baby? Or someone else's baby? Try Ginavietta Xilly Anganelel or Ferandulde Hommanloco Kictortick.

When taking new therapeutics, never mix salivac and labromine. And don't forget that abadarone is best taken on an empty stomach.

And nothing increases the chance of getting that grant funded than proposing the study of a new –ome! We really need someone to looking into the femome and manome.

Dark Matter of the Genome—the nullomers

An exploration of things that are missing in the human genome. The nullomers.

Julia Herold, Stefan Kurtz and Robert Giegerich. Efficient computation of absent words in genomic sequences. BMC Bioinformatics (2008) 9:167

Clustering

Clustering finds patterns in data—whether they are there or not.We've already seen how data can be grouped into classes in our series on classifiers. In this column, we look at how data can be grouped by similarity in an unsupervised way.

We look at two common clustering approaches: `k`-means and hierarchical clustering. All clustering methods share the same approach: they first calculate similarity and then use it to group objects into clusters. The details of the methods, and outputs, vary widely.

Altman, N. & Krzywinski, M. (2017) Points of Significance: Clustering. Nature Methods 14:545–546.

Background reading

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Logistic regression. Nature Methods 13:541-542.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Classifier evaluation. Nature Methods 13:603-604.

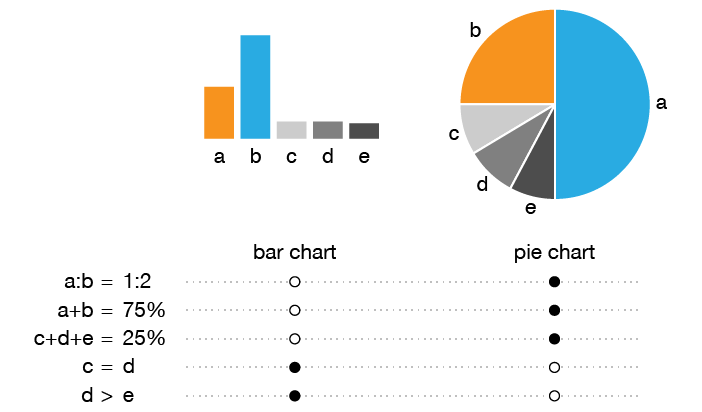

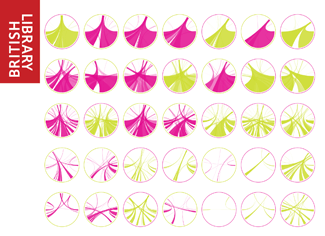

What's wrong with pie charts?

In this redesign of a pie chart figure from a Nature Medicine article [1], I look at how to organize and present a large number of categories.

I first discuss some of the benefits of a pie chart—there are few and specific—and its shortcomings—there are few but fundamental.

I then walk through the redesign process by showing how the tumor categories can be shown more clearly if they are first aggregated into a small number groups.

(bottom left) Figure 2b from Zehir et al. Mutational landscape of metastatic cancer revealed from prospective clinical sequencing of 10,000 patients. (2017) Nature Medicine doi:10.1038/nm.4333

Tabular Data

Tabulating the number of objects in categories of interest dates back to the earliest records of commerce and population censuses.After 30 columns, this is our first one without a single figure. Sometimes a table is all you need.

In this column, we discuss nominal categorical data, in which data points are assigned to categories in which there is no implied order. We introduce one-way and two-way tables and the `\chi^2` and Fisher's exact tests.

Altman, N. & Krzywinski, M. (2017) Points of Significance: Tabular data. Nature Methods 14:329–330.

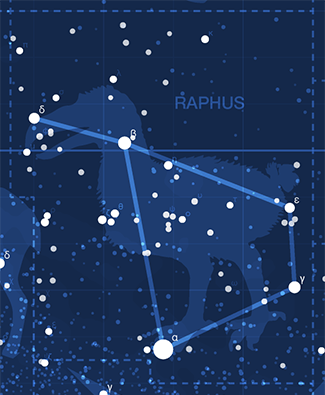

Happy 2017 `\pi` Day—Star Charts, Creatures Once Living and a Poem

on a brim of echo,

capsized chamber

drawn into our constellation, and cooling.

—Paolo Marcazzan

Celebrate `\pi` Day (March 14th) with star chart of the digits. The charts draw 40,000 stars generated from the first 12 million digits.

The 80 constellations are extinct animals and plants. Here you'll find old friends and new stories. Read about how Desmodus is always trying to escape or how Megalodon terrorizes the poor Tecopa! Most constellations have a story.

This year I collaborate with Paolo Marcazzan, a Canadian poet, who contributes a poem, Of Black Body, about space and things we might find and lose there.

Check out art from previous years: 2013 `\pi` Day and 2014 `\pi` Day, 2015 `\pi` Day and and 2016 `\pi` Day.

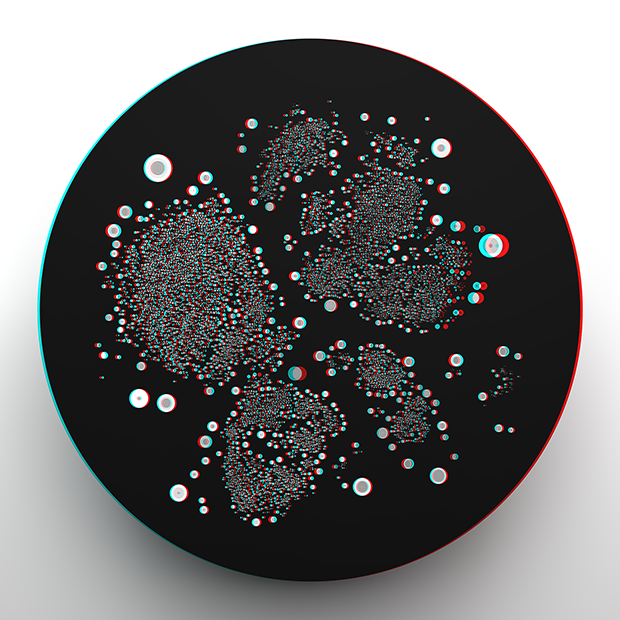

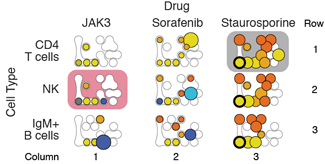

Data in New Dimensions: convergence of art, genomics and bioinformatics

Art is science in love.

— E.F. Weisslitz

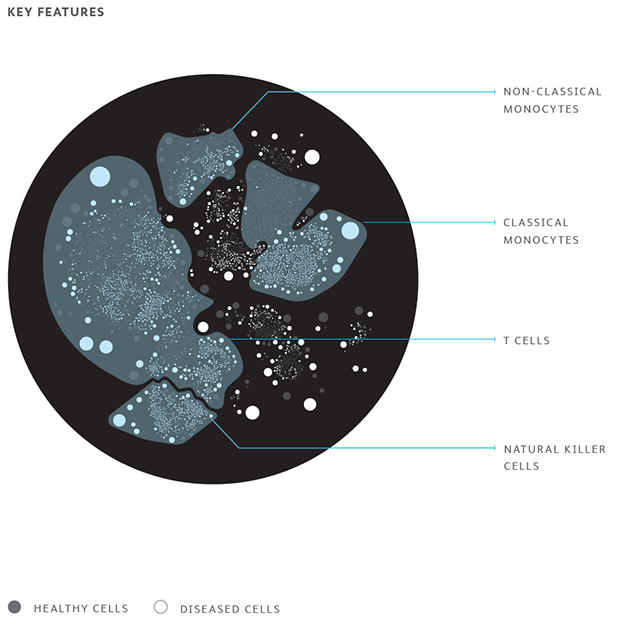

A behind-the-scenes look at the making of our stereoscopic images which were at display at the AGBT 2017 Conference in February. The art is a creative collaboration with Becton Dickinson and The Linus Group.

Its creation began with the concept of differences and my writeup of the creative and design process focuses on storytelling and how concept of differences is incorporated into the art.

Oh, and this might be a good time to pick up some red-blue 3D glasses.

Interpreting P values

A P value measures a sample’s compatibility with a hypothesis, not the truth of the hypothesis.This month we continue our discussion about `P` values and focus on the fact that `P` value is a probability statement about the observed sample in the context of a hypothesis, not about the hypothesis being tested.

Given that we are always interested in making inferences about hypotheses, we discuss how `P` values can be used to do this by way of the Benjamin-Berger bound, `\bar{B}` on the Bayes factor, `B`.

Heuristics such as these are valuable in helping to interpret `P` values, though we stress that `P` values vary from sample to sample and hence many sources of evidence need to be examined before drawing scientific conclusions.

Altman, N. & Krzywinski, M. (2017) Points of Significance: Interpreting P values. Nature Methods 14:213–214.

Background reading

Krzywinski, M. & Altman, N. (2017) Points of significance: P values and the search for significance. Nature Methods 14:3–4.

Krzywinski, M. & Altman, N. (2013) Points of significance: Significance, P values and t–tests. Nature Methods 10:1041–1042.

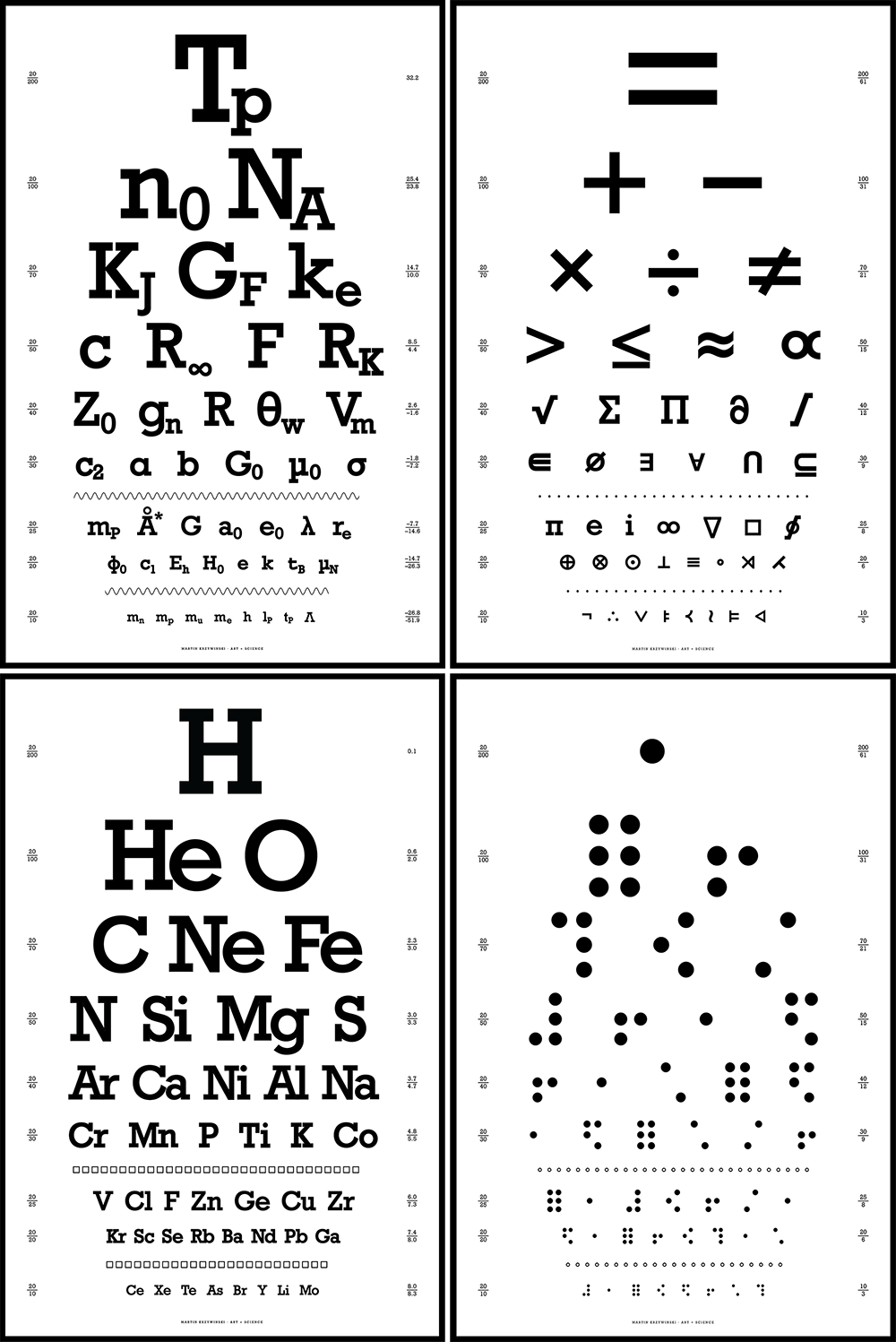

Snellen Charts—Typography to Really Look at

Another collection of typographical posters. These ones really ask you to look.

The charts show a variety of interesting symbols and operators found in science and math. The design is in the style of a Snellen chart and typset with the Rockwell font.

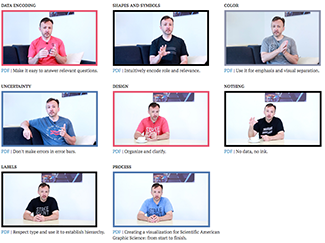

Essentials of Data Visualization—8-part video series

In collaboration with the Phil Poronnik and Kim Bell-Anderson at the University of Sydney, I'm delighted to share with you our 8-part video series project about thinking about drawing data and communicating science.

We've created 8 videos, each focusing on a different essential idea in data visualization: encoding, shapes, color, uncertainty, design, drawing missing or unobserved data, labels and process.

The videos were designed as teaching materials. Each video comes with a slide deck and exercises.

P values and the search for significance

Little P valueWhat are you trying to say

Of significance?

—Steve Ziliak

We've written about P values before and warned readers about common misconceptions about them, which are so rife that the American Statistical Association itself has a long statement about them.

This month is our first of a two-part article about P values. Here we look at 'P value hacking' and 'data dredging', which are questionable practices that invalidate the correct interpretation of P values.

We also illustrate how P values can lead us astray by asking "What is the smallest P value we can expect if the null hypothesis is true but we have done many tests, either explicitly or implicitly?"

Incidentally, this is our first column in which the standfirst is a haiku.

Altman, N. & Krzywinski, M. (2017) Points of Significance: P values and the search for significance. Nature Methods 14:3–4.

Background reading

Krzywinski, M. & Altman, N. (2013) Points of significance: Significance, P values and t–tests. Nature Methods 10:1041–1042.

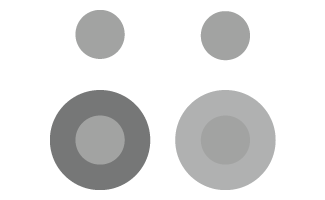

Intuitive Design

Appeal to intuition when designing with value judgments in mind.

Figure clarity and concision are improved when the selection of shapes and colors is grounded in the Gestalt principles, which describe how we visually perceive and organize information.

The Gestalt principles are value free. For example, they tell us how we group objects but do not speak to any meaning that we might intuitively infer from visual characteristics.

This month, we discuss how appealing to such intuitions—related to shapes, colors and spatial orientation— can help us add information to a figure as well as anticipate and encourage useful interpretations.

Krzywinski, M. (2016) Points of View: Intuitive Design. Nature Methods 13:895.

Regularization

Constraining the magnitude of parameters of a model can control its complexity.

This month we continue our discussion about model selection and evaluation and address how to choose a model that avoids both overfitting and underfitting.

Ideally, we want to avoid having either an underfitted model, which is usually a poor fit to the training data, or an overfitted model, which is a good fit to the training data but not to new data.

Regularization is a process that penalizes the magnitude of model parameters. This is done by not only minimizing the SSE, `\mathrm{SSE} = \sum_i (y_i - \hat{y}_i)^2 `, as is done normally in a fit, but adding to this minimized quantity the sum of the mode's squared parameters, `\mathrm{SSE} + \lambda \sum_i \hat{\beta}^2_i`.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Regularization. Nature Methods 13:803-804.

Background reading

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Model Selection and Overfitting. Nature Methods 13:703-704.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Classifier evaluation. Nature Methods 13:603-604.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Logistic regression. Nature Methods 13:541-542.

Model Selection and Overfitting

With four parameters I can fit an elephant and with five I can make him wiggle his trunk. —John von Neumann.

By increasing the complexity of a model, it is easy to make it fit to data perfectly. Does this mean that the model is perfectly suitable? No.

When a model has a relatively large number of parameters, it is likely to be influenced by the noise in the data, which varies across observations, as much as any underlying trend, which remains the same. Such a model is overfitted—it matches training data well but does not generalize to new observations.

We discuss the use of training, validation and testing data sets and how they can be used, with methods such as cross-validation, to avoid overfitting.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Model Selection and Overfitting. Nature Methods 13:703-704.

Background reading

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Classifier evaluation. Nature Methods 13:603-604.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Logistic regression. Nature Methods 13:541-542.

Classifier Evaluation

It is important to understand both what a classification metric expresses and what it hides.

We examine various metrics use to assess the performance of a classifier. We show that a single metric is insufficient to capture performance—for any metric, a variety of scenarios yield the same value.

We also discuss ROC and AUC curves and how their interpretation changes based on class balance.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Classifier evaluation. Nature Methods 13:603-604.

Background reading

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Logistic regression. Nature Methods 13:541-542.

Happy 2016 `\pi` Approximation, roughly speaking

Today is the day and it's hardly an approximation. In fact, `22/7` is 20% more accurate of a representation of `\pi` than `3.14`!

Time to celebrate, graphically. This year I do so with perfect packing of circles that embody the approximation.

By warping the circle by 8% along one axis, we can create a shape whose ratio of circumference to diameter, taken as twice the average radius, is 22/7.

If you prefer something more accurate, check out art from previous `\pi` days: 2013 `\pi` Day and 2014 `\pi` Day, 2015 `\pi` Day, and 2016 `\pi` Day.

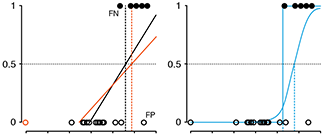

Logistic Regression

Regression can be used on categorical responses to estimate probabilities and to classify.

The next column in our series on regression deals with how to classify categorical data.

We show how linear regression can be used for classification and demonstrate that it can be unreliable in the presence of outliers. Using a logistic regression, which fits a linear model to the log odds ratio, improves robustness.

Logistic regression is solved numerically and in most cases, the maximum-likelihood estimates are unique and optimal. However, when the classes are perfectly separable, the numerical approach fails because there is an infinite number of solutions.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of Significance: Logistic regression. Nature Methods 13:541-542.

Background reading

Altman, N. & Krzywinski, M. (2016) Points of Significance: Regression diagnostics? Nature Methods 13:385-386.

Altman, N. & Krzywinski, M. (2015) Points of Significance: Multiple Linear Regression Nature Methods 12:1103-1104.

Altman, N. & Krzywinski, M. (2015) Points of significance: Simple Linear Regression Nature Methods 12:999-1000.

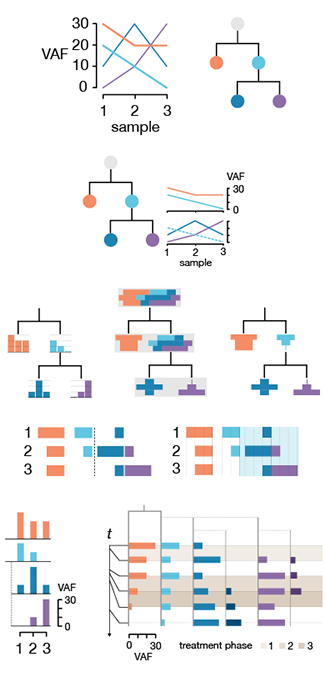

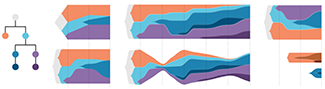

Visualizing Clonal Evolution in Cancer

Genomic instability is one of the defining characteristics of cancer and within a tumor, which is an ever-evolving population of cells, there are many genomes. Mutations accumulate and propagate to create subpopulations and these groups of cells, called clones, may respond differently to treatment.

It is now possible to sequence individual cells within a tumor to create a profile of genomes. This profile changes with time, both in the kinds of mutation that are found and in their proportion in the overall population.

Clone evolution diagrams visualize these data. These diagrams can be qualitative, showing only trends, or quantitative, showing temporal and population changes to scale. In this Molecular Cell forum article I provide guidelines for drawing these diagrams, focusing with how to use color and navigational elements, such as grids, to clarify the relationships between clones.

I'd like to thank Maia Smith and Cydney Nielsen for assistance in preparing some of the figures in the paper.

Krzywinski, M. (2016) Visualizing Clonal Evolution in Cancer. Mol Cell 62:652-656.

Binning High-Resolution Data

Limitations in print resolution and visual acuity impose limits on data density and detail.

Your printer can print at 1,200 or 2,400 dots per inch. At reading distance, your reader can resolve about 200–300 lines per inch. This large gap—how finely we can print and how well we can see—can create problems when we don't take visual acuity into account.

The column provides some guidelines—particularly relevant when showing whole-genome data, where the scale of elements of interest such as genes is below the visual acuity limit—for binning data so that they are represented by elements that can be comfortably discerned.

Krzywinski, M. (2016) Points of view: Binning high-resolution data. Nature Methods 13:463.

Regression diagnostics

Residual plots can be used to validate assumptions about the regression model.

Continuing with our series on regression, we look at how you can identify issues in your regression model.

The difference between the observed value and the model's predicted value is the residual, `r = y_i - \hat{y}_i`, a very useful quantity to identify the effects of outliers and trends in the data that might suggest your model is inadequate.

We also discuss normal probability plots (or Q-Q plots) and show how these can be used to check that the residuals are normally distributed, which is one of the assumptions of regression (constant variance being another).

Background reading

Altman, N. & Krzywinski, M. (2016) Points of Significance: Analyzing outliers: Influential or nuisance? Nature Methods 13:281-282.

Altman, N. & Krzywinski, M. (2015) Points of Significance: Multiple Linear Regression Nature Methods 12:1103-1104.

Altman, N. & Krzywinski, M. (2015) Points of significance: Simple Linear Regression Nature Methods 12:999-1000.

Analyzing Outliers: Influential or Nuisance?

Some outliers influence the regression fit more than others.

This month our column addresses the effect that outliers have on linear regression.

You may be surprised, but not all outliers have the same influence on the fit (e.g. regression slope) or inference (e.g. confidence or prediction intervals). Outliers with large leverage—points that are far from the sample average—can have a very large effect. On the other hand, if the outlier is close to the sample average, it may not influence the regression slope at all.

Quantities such as Cook's distance and the so-called hat matrix, which defines leverage, are useful in assessing the effect of outliers.

Background reading

Altman, N. & Krzywinski, M. (2015) Points of Significance: Multiple Linear Regression Nature Methods 12:1103-1104.

Altman, N. & Krzywinski, M. (2015) Points of significance: Simple Linear Regression Nature Methods 12:999-1000.

Typographical posters of bird songs

Chirp, chirp, chirp but much better looking.

If you like these, check out my other typographical art posters.

Happy 2016 Pi Day—gravity of `\pi`

Celebrate `\\pi` Day (March 14th) with colliding digits in space. This year, I celebrate the detection of gravitational waves at the LIGO lab and simulate the effect of gravity on masses created from the digits of `\\pi`.

Some strange things can happen.

The art is featured in the Gravity of Pi article on the Scientific American SA Visual blog.

Check out art from previous years: 2013 `\\pi` Day and 2014 `\\pi` Day and 2015 `\\pi` Day.

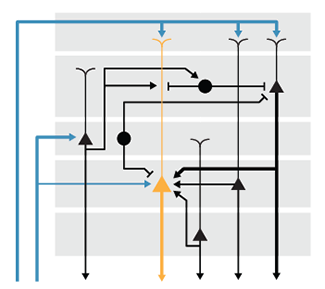

Neural Circuit Diagrams

Use alignment and consistency to untangle complex circuit diagrams.

This month we apply the ideas presented in our column about drawing pathways to neural circuit diagrams. Neural circuits are networks of cells or regions, typically with a large number of variables, such as cell and neurotransmitter type.

We discuss how to effectively route arrows, how to avoid pitfalls of redundant encoding and suggest ways to encorporate emphasis in the layout.

Hunnicutt, B.J. & Krzywinski, M. (2016) Points of View: Neural circuit diagrams. Nature Methods 13:189.

background reading

Hunnicutt, B.J. & Krzywinski, M. (2016) Points of Viev: Pathways. Nature Methods 13:5.

Wong, B. (2010) Points of Viev: Gestalt principles (part 1). Nature Methods 7:863.

Wong, B. (2010) Points of Viev: Gestalt principles (part 2). Nature Methods 7:941.

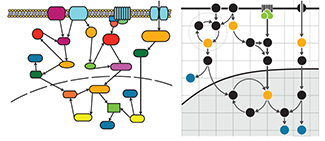

Pathways

Apply visual grouping principles to add clarity to information flow in pathway diagrams.

We draw on the Gestalt principles of connection, grouping and enclosure to construct practical guidelines for drawing pathways with a clear layout that maintains hierarchy.

We include tips about how to use negative space and align nodes to emphasizxe groups and how to effectively draw curved arrows to clearly show paths.

Hunnicutt, B.J. & Krzywinski, M. (2016) Points of Viev: Pathways. Nature Methods 13:5.

background reading

Wong, B. (2010) Points of Viev: Gestalt principles (part 1). Nature Methods 7:863.

Wong, B. (2010) Points of Viev: Gestalt principles (part 2). Nature Methods 7:941.

Multiple Linear Regression

When multiple variables are associated with a response, the interpretation of a prediction equation is seldom simple.