visualization + design

Getting into Visualization of Large Biological Data Sets

The 20 imperatives of information design

Martin Krzywinski, Inanc Birol, Steven Jones, Marco Marra

Presented at Biovis 2012 (Visweek 2012). Content is drawn from my book chapter Visualization Principles for Scientific Communication (Martin Krzywinski & Jonathan Corum) in the upcoming open access Cambridge Press book Visualizing biological data - a practical guide (Seán I. O'Donoghue, James B. Procter, Kate Patterson, eds.), a survey of best practices and unsolved problems in biological visualization. This book project was conceptualized and initiated at the Vizbi 2011 conference.

If you are interested in guidelines for data encoding and visualization in biology, see our Visualization Principles Vizbi 2012 Tutorial and Nature Methods Points of View column by Bang Wong.

ENSURE LEGIBILITY AND FOCUS ON THE MESSAGE

Create legible visualizations with a strong message. Make elements large enough to be resolved comfortably. Bin dense data to avoid sacrificing clarity.

Distinguish between exploration and communication.

Use exploratory tools (e.g. genome browsers) to discover patterns and validate hypotheses. Avoid using screenshots from these applications for communication – they are typically too complex and cluttered with navigational elements to be an effective static figure.

Do not exceed resolution of visual acuity.

Our acuity is ~50 cycles/degree or about 1/200 (0.3 pt) at 10 inches. Ensure the reader can comfortably see detail by limiting resolution to no more than 50% of acuity. Where possible, elements that require visual separation should be at least 1 pt part.

Use no more than ~500 scale intervals.

Ensure data elements are at least 1 pt on a two-column Nature figure (6.22 in), 4 pixels on a 1920 horizontal resolution display, or 2 pixels on a typical LCD projector. These restrictions become challenges for large genomes.

Show variation with statistics.

Data on large genomes must be downsampled. Depict variation with min/max plots and consider hiding it when it is within noise levels. Help the reader notice significant outliers.

Do not draw small elements to scale.

Map size of elements onto clearly legible symbols. Legibility and clarity are more important than precise positioning and sizing. Discretize sizes and positions to facilitate making meaningful comparisons.

Aggregate data for focused theme.

A strong visual message has no uncertainty in its interpretation. Focus on a single theme by aggregating unnecessary detail.

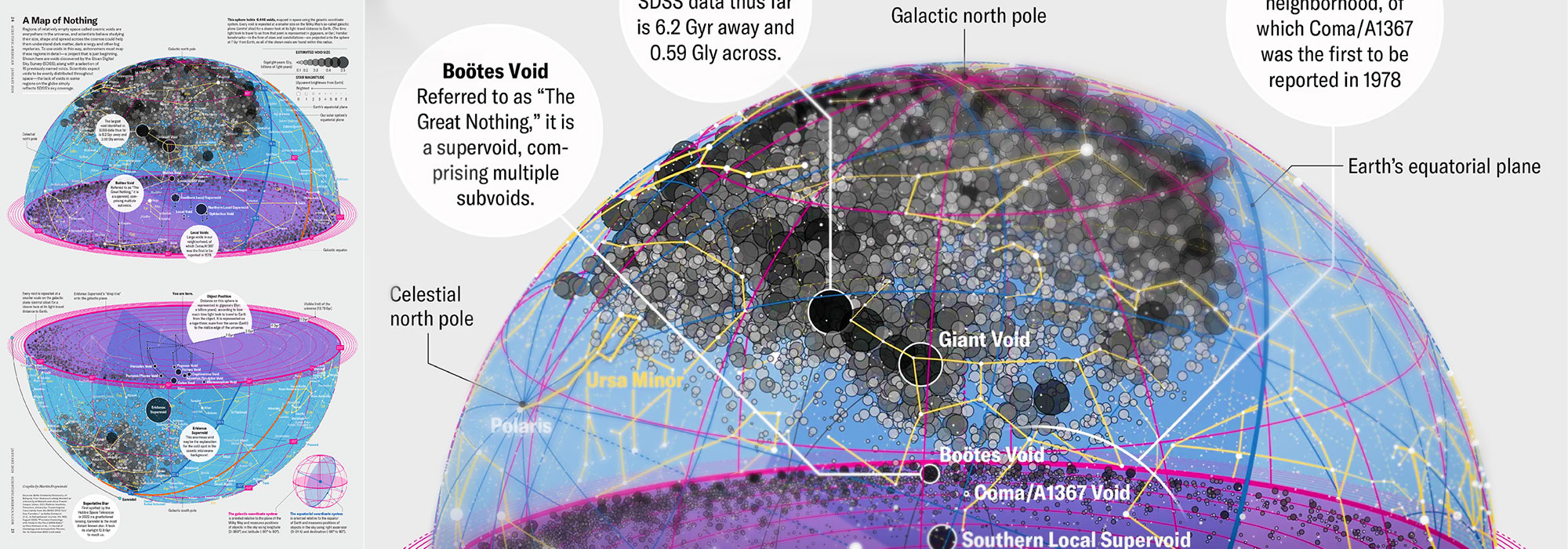

Show density maps and outliers.

Establishing context is helpful when emergent patterns in the data provide a useful perspective on the message. When data sets are large, it is difficult to maintain detail in the context layer because the density of points can visually overwhelm the area of interest. In this case, consider showing only the outliers in the data set.

Consider whether showing the full data set is useful.

The reader’s attention can be focused by increasing the salience of interesting patterns. Other complex data sets, such as networks, are shown more effectively when context is carefully edited or even removed.

USE EFFECTIVE VISUAL ENCODINGS TO ORGANIZE INFORMATION.

Match the visual encoding to the hypothesis. Use encodings specific and sensitive to important patterns. Dense annotations should be independent of the core data in distinct visual layers.

Use the simplest encoding.

Choose concise encodings over elaborate ones.

Help the reader judge accurately.

Accuracy and speed in detecting differences in visual forms depends on how information is presented. We judge relative lengths more accurately than areas, particularly when elements are aligned and adjacent. Our judgment of area is poor because we use length as a proxy, which causes us to systematically underestimate.

Use encodings that are robust and comparable.

In addition to being transparent and predictable, visualizations must be robust with respect to the data. Changes in the data set should be reflected by proportionate changes in the visualization. Be wary of force-directed network layouts, which have low spatial autocorrelation. In general, these are neither sensitive nor specific to patterns of interest.

Crop scale to reveal fine structure in data.

Biological data sets are typically high-resolution (changes at base pair level can meaningful), sparse (distances between changes are orders of magnitude greater than the affected areas) and connect distant regions by adjacency relationships (gene fusions and other rearrangements). It is difficult to take these properties into account on a fixed linear scale, the kind used by traditional genome browsers. To mitigate this, crop and order axis segments arbitrarily and apply a scale adjustment to a segment or portion thereof.Use perceptual palettes.

Selecting perceptually favorable colors is difficult because most software does not support the required color spaces. Brewer palettes exist for the full range of colors to help us make useful choices. Qualitative palettes have no perceived order of importance. Sequential palettes are suitable for heat maps because they have a natural order and the perceived difference between adjacent colors is constant. Twin hue diverging palettes, are useful for two-sided quantitative encodings, such as immunofluorescence and copy number.Never use hue to encode magnitude.

Hue does not communicate relative change in values because we perceive hue categorically (blue, green, yellow, etc). Changes within one category have less perceptual impact than transitions between categories. For example, variations across the green/yellow boundary are perceived to be larger than variations across the same sized hue interval in other parts of the spectrum.USE EFFECTIVE DESIGN PRINCIPLES TO EMPHASIZE AND COMMUNICATE PATTERNS.

Well-designed figures illustrate complex concepts and patterns that may be difficult to express concisely in words. Figures that are clear, concise and attractive are effective – they form a strong connection with the reader and communicate with immediacy. These qualities can be achieved with methods of graphic design, which are based on theories of how we perceive, interpret and organize visual information.

Reduce unnecessary variation.

The reader does not know what is important in a figure and will assume that any spatial or color variation is meaningful. The figure’s variation should come solely from data or act to organize information.

Encapsulate details.

Including details not relevant to the core message of the figure can create confusion. Encapsulation should be done to the same level of detail and to the simplest visual form. Duplication in labels should be avoided.

Use consistent alignment. Center on theme.

Establish equivalence using consistent alignment. Awkward callouts can be avoided if elements are logically placed.Respect natural hierarchies.

When the data set embodies a natural hierarchy, use an encoding that emphasizes it clearly and memorably. The use hierarchy in layout (e.g. tabular form) and encoding can significantly improve a muddled figure.

Be aware of the luminance effect.

Color is a useful encoding – the eye can distinguish about 450 levels of gray, 150 hues, and 10-60 levels of saturation, depending on the color – but our ability to perceive differences varies with context. Adjacent tones with different luminance values can interfere with discrimination, in interaction known as the luminance effect.

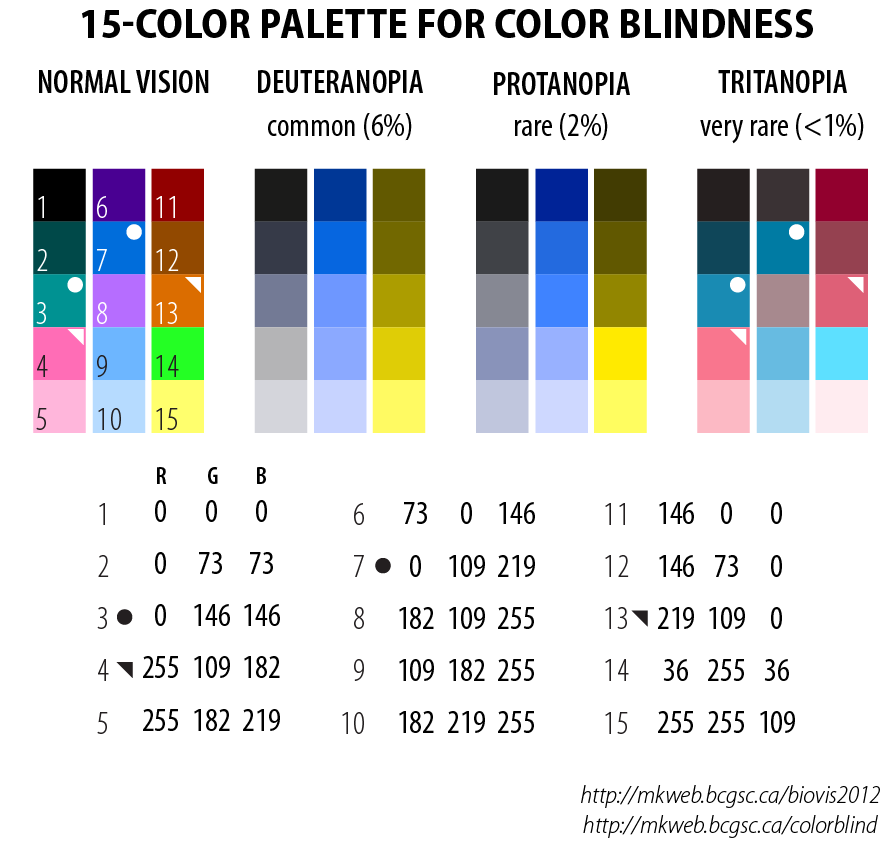

Be aware of color blindness.

In an audience of 8 men and 8 women, chances are 50% that at least one has some degree of color blindness. Use a palette that is color-blind safe. In the palette below the 15 colors appear as 5-color tone progressions to those with color blindness. Additional encodings can be achieved with symbols or line thickness.

I have designed 15-color palettes for color blindess for each of the three common types of color blindness.

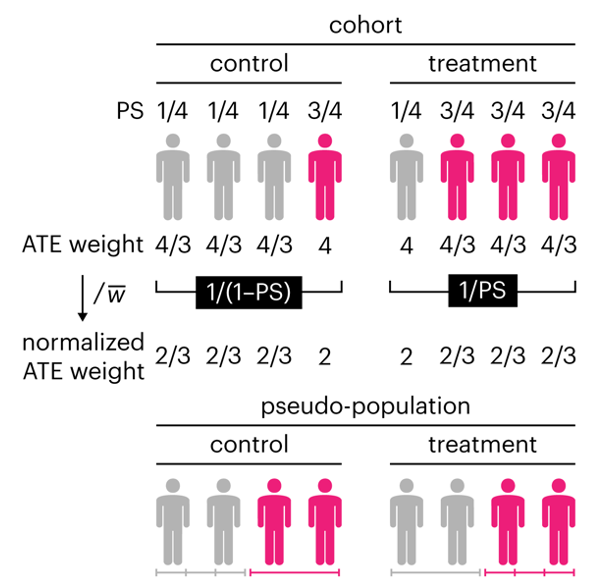

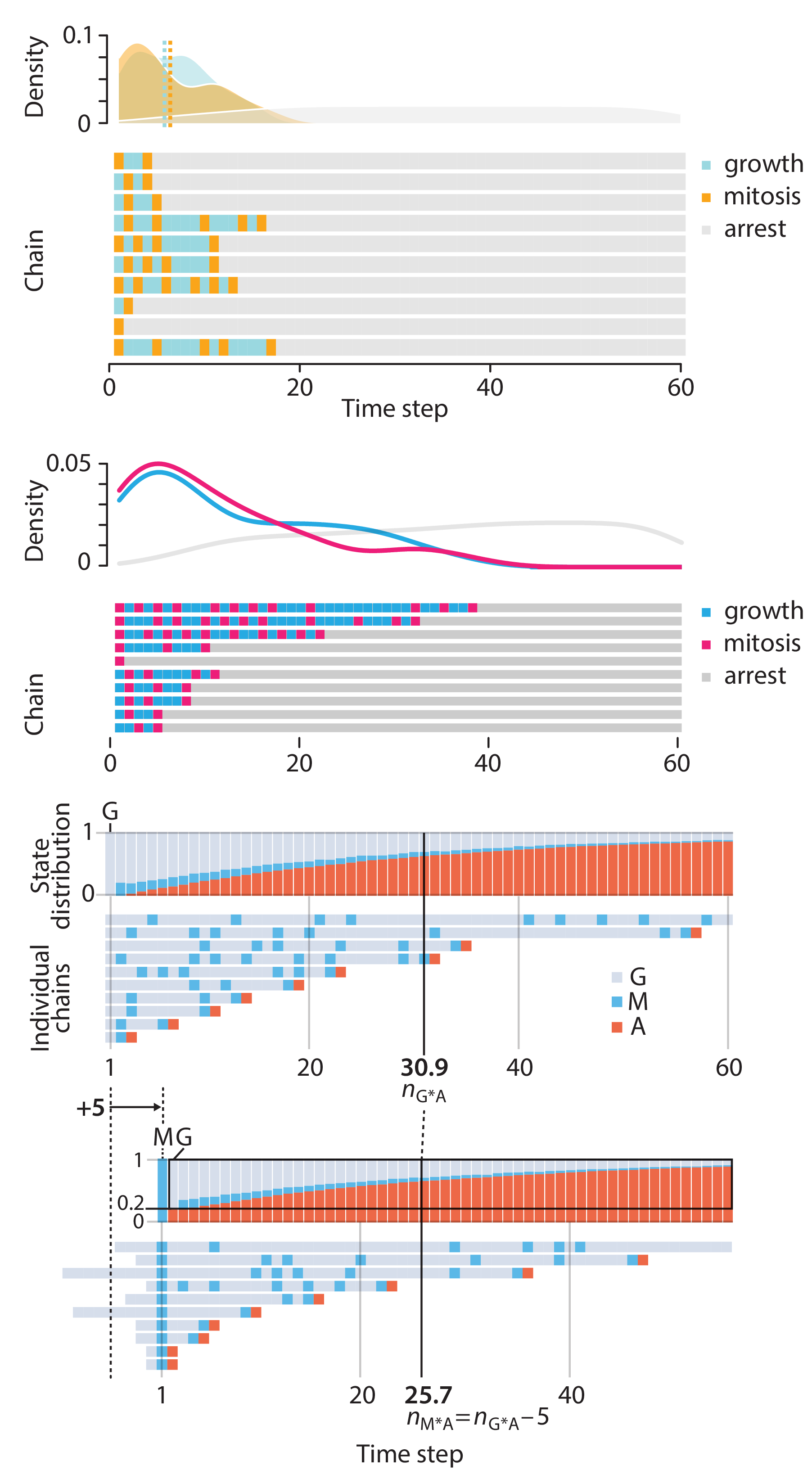

Propensity score weighting

The needs of the many outweigh the needs of the few. —Mr. Spock (Star Trek II)

This month, we explore a related and powerful technique to address bias: propensity score weighting (PSW), which applies weights to each subject instead of matching (or discarding) them.

Kurz, C.F., Krzywinski, M. & Altman, N. (2025) Points of significance: Propensity score weighting. Nat. Methods 22:1–3.

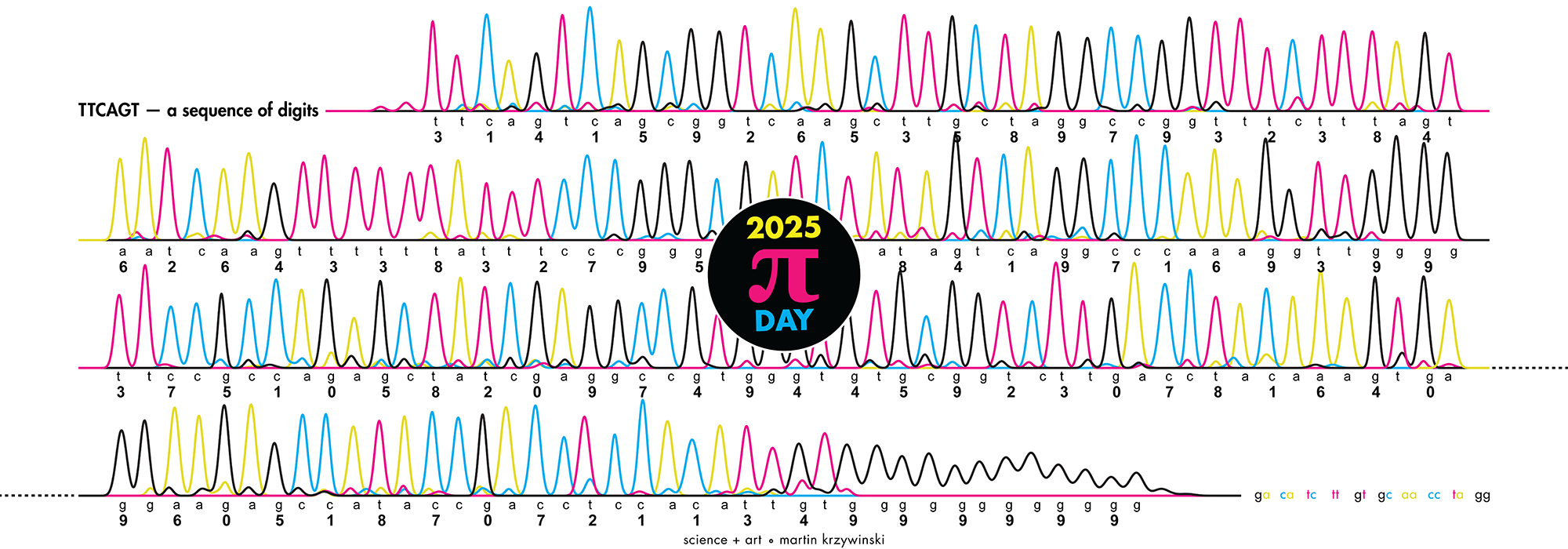

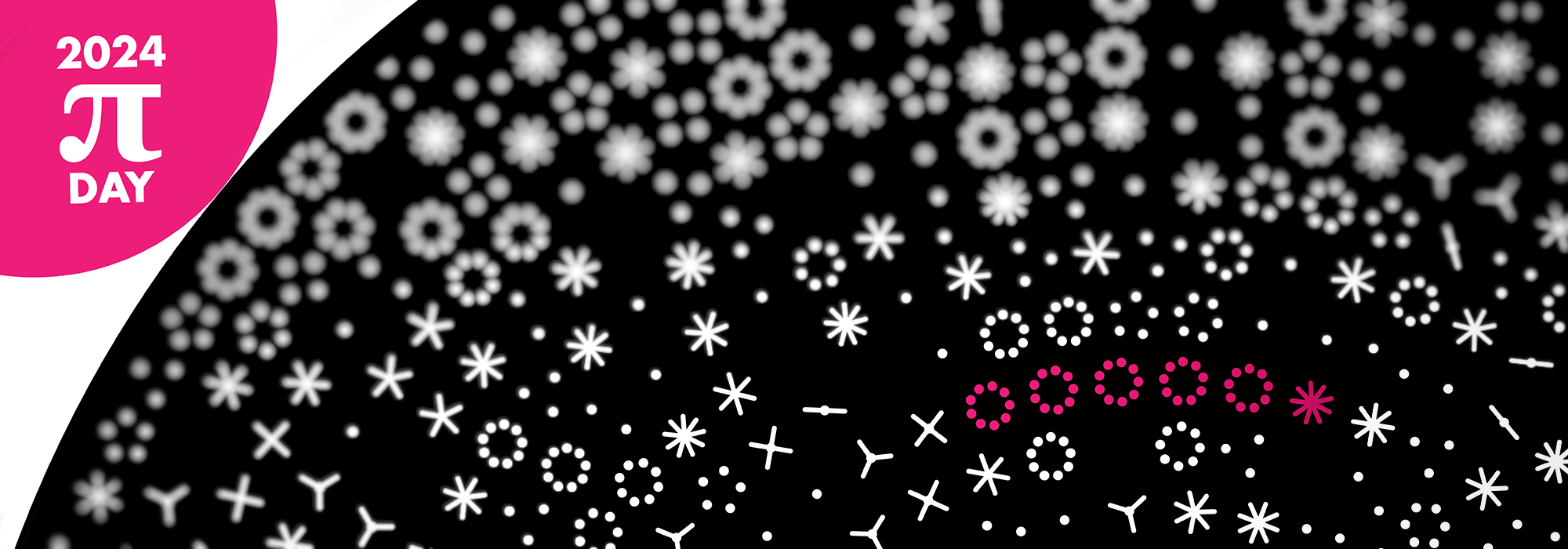

Happy 2025 π Day—

TTCAGT: a sequence of digits

Celebrate π Day (March 14th) and sequence digits like its 1999. Let's call some peaks.

Crafting 10 Years of Statistics Explanations: Points of Significance

I don’t have good luck in the match points. —Rafael Nadal, Spanish tennis player

Points of Significance is an ongoing series of short articles about statistics in Nature Methods that started in 2013. Its aim is to provide clear explanations of essential concepts in statistics for a nonspecialist audience. The articles favor heuristic explanations and make extensive use of simulated examples and graphical explanations, while maintaining mathematical rigor.

Topics range from basic, but often misunderstood, such as uncertainty and P-values, to relatively advanced, but often neglected, such as the error-in-variables problem and the curse of dimensionality. More recent articles have focused on timely topics such as modeling of epidemics, machine learning, and neural networks.

In this article, we discuss the evolution of topics and details behind some of the story arcs, our approach to crafting statistical explanations and narratives, and our use of figures and numerical simulations as props for building understanding.

Altman, N. & Krzywinski, M. (2025) Crafting 10 Years of Statistics Explanations: Points of Significance. Annual Review of Statistics and Its Application 12:69–87.

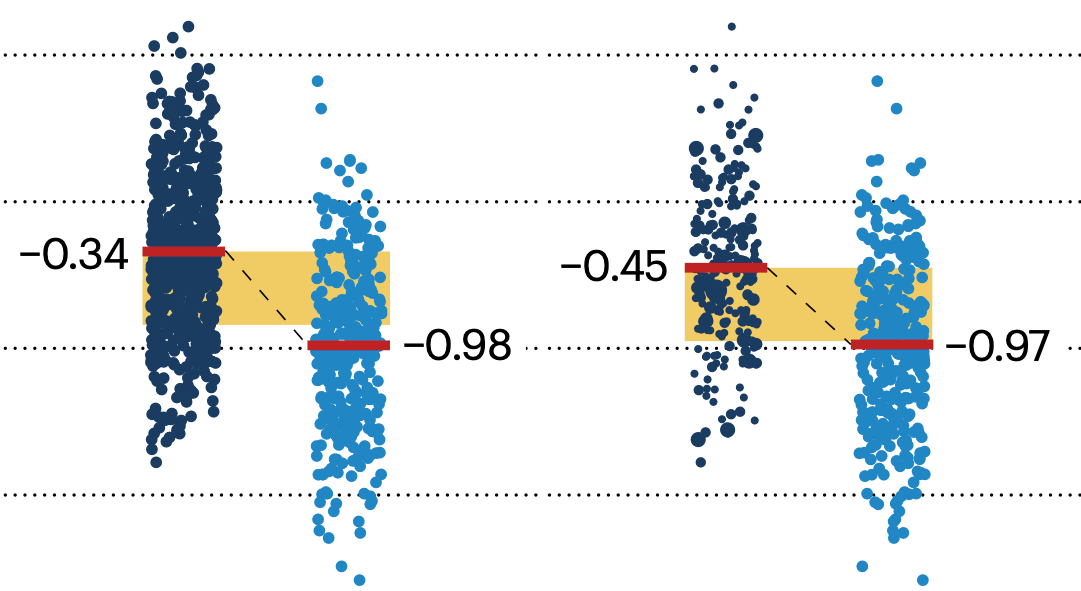

Propensity score matching

I don’t have good luck in the match points. —Rafael Nadal, Spanish tennis player

In many experimental designs, we need to keep in mind the possibility of confounding variables, which may give rise to bias in the estimate of the treatment effect.

If the control and experimental groups aren't matched (or, roughly, similar enough), this bias can arise.

Sometimes this can be dealt with by randomizing, which on average can balance this effect out. When randomization is not possible, propensity score matching is an excellent strategy to match control and experimental groups.

Kurz, C.F., Krzywinski, M. & Altman, N. (2024) Points of significance: Propensity score matching. Nat. Methods 21:1770–1772.

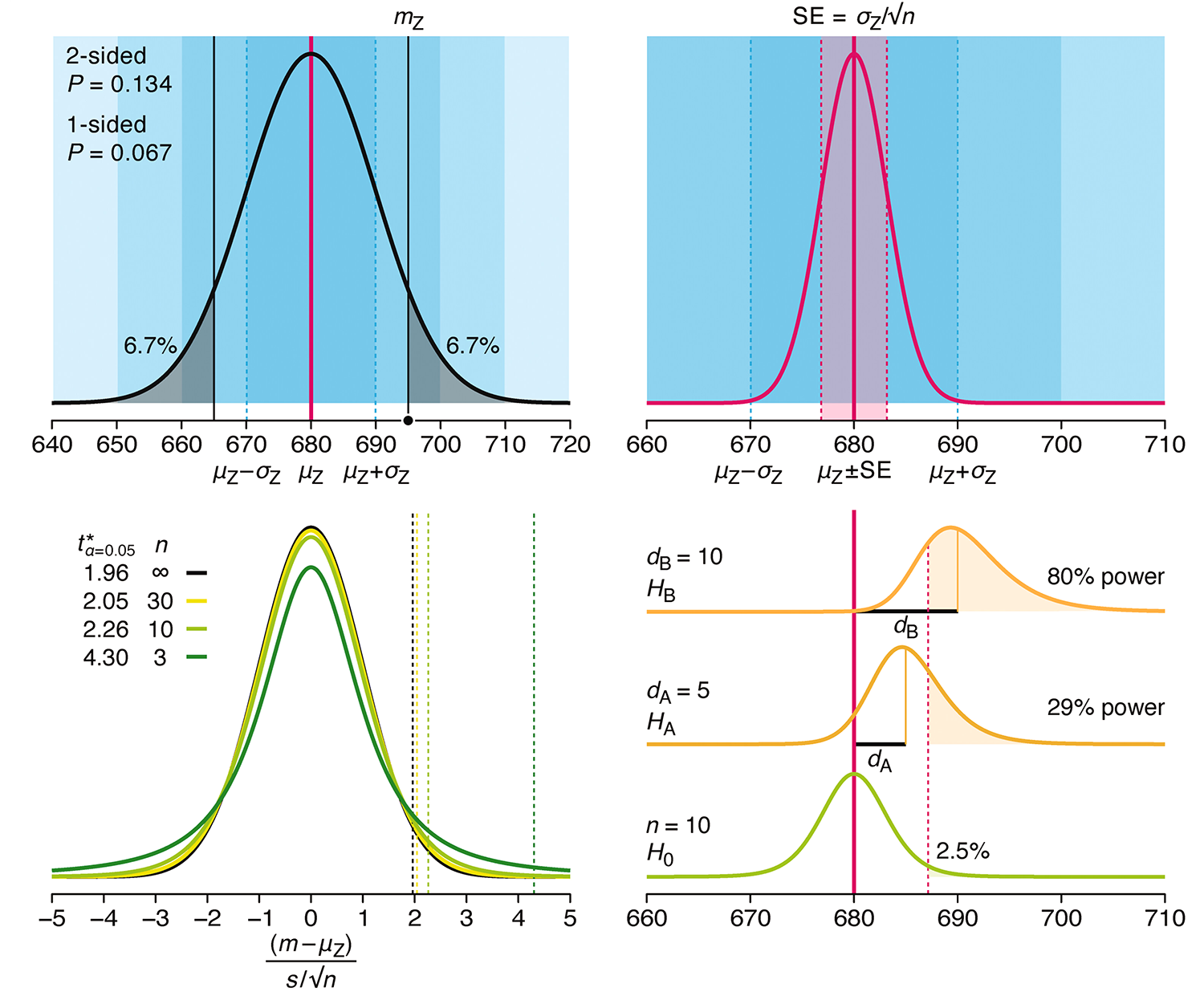

Understanding p-values and significance

P-values combined with estimates of effect size are used to assess the importance of experimental results. However, their interpretation can be invalidated by selection bias when testing multiple hypotheses, fitting multiple models or even informally selecting results that seem interesting after observing the data.

We offer an introduction to principled uses of p-values (targeted at the non-specialist) and identify questionable practices to be avoided.

Altman, N. & Krzywinski, M. (2024) Understanding p-values and significance. Laboratory Animals 58:443–446.